The IT industry is at the doorstep of the long-awaited exascale era, which promises massive systems that can run at least one exaflops, or a quintillion (a billion billion) calculations per second, at 64-bit precision and a lot more than that at lower precision and even more using low-precision integer data pumped through their vector and matrix engines.

The first such supercomputers in the United States – “Frontier” at Oak Ridge National Laboratory, “Aurora” at Argonne National Laboratory, and “El Capitan” at Lawrence Livermore National Laboratory – are expected to come online between this year and next.

It’s an important step forward in computing technology, says Scott Tease, vice president and general manager of HPC at Lenovo. It will enable scientists and researchers to run programs and do work that were too big or complex to run on current systems. The hope is that those capabilities eventually will become available to a greater number of organizations.

“The worry I have is that type of technology is going to be designed in a way that’s so complicated, so hard to use, so hard to consume that all that will be the purview of only a handful of sites around the world – a couple in China, a couple of the United States, one in Europe, one in Japan, that kind of thing,” Tease tells The Next Platform. “That’s the worst thing that can happen. What we want to see happen is those big sites around the globe forge a path for others to follow and then we make that technology easy to trickle down to everybody in the industry. “

The idea of making such powerful HPC computing more widely available to enterprises and others that traditionally could not access it is one that has been running through the IT industry for several years. IBM’s Supercomputing On Demand service was launched in 2003, three years before Amazon Web Services exploded onto the IT scene, and as computing power has become less expensive, systems have become more efficient and the cloud has opened up new ways to adopt and pay for it (Lenovo has an effort called “Exascale to Everyscale”). At the same time, the demand for such compute power has grown as the amount of data being generated has skyrocketed and new workloads like data analytics, artificial intelligence and machine learning have become more pervasive.

A couple of years ago, we spoke with Peter Ungaro, the former chief executive officer of supercomputer maker Cray, at the time the senior vice president and general manager of Hewlett Packard Enterprise’s HPC and AI business, and the IBMer who launched the Supercomputing On Demand effort. And Ungaro said his goal was to make exascale computing available to enterprises desperate for such compute power. HPE bought Cray for $1.3 billion in 2019, putting itself at the center of the exascale push because Cray had won the contracts for all three exascale systems in the years before the acquisition. (Ungaro retired from HPE last year, and has not yet surfaced elsewhere.)

Enabling HPC-level computing to cascade down to mainstream enterprises has been done in the past. In 2008, when Scott Tease was still with IBM – before Big Blue sold its System X86 server business, including its HPC unit, to Lenovo in 2014 for $2.1 billion – he was part of the team that built “Roadrunner,” a supercomputer that became the first in the world to surpass a petaflops of performance and that used a hybrid architecture. It was a $100 million monster, with almost 13,000 IBM PowerXCell CPUs and 6,480 dual-core AMD Opteron processors housed in blade servers interconnected with InfiniBand. It compromised hundreds of racks and hundreds of miles of cabling, he says.

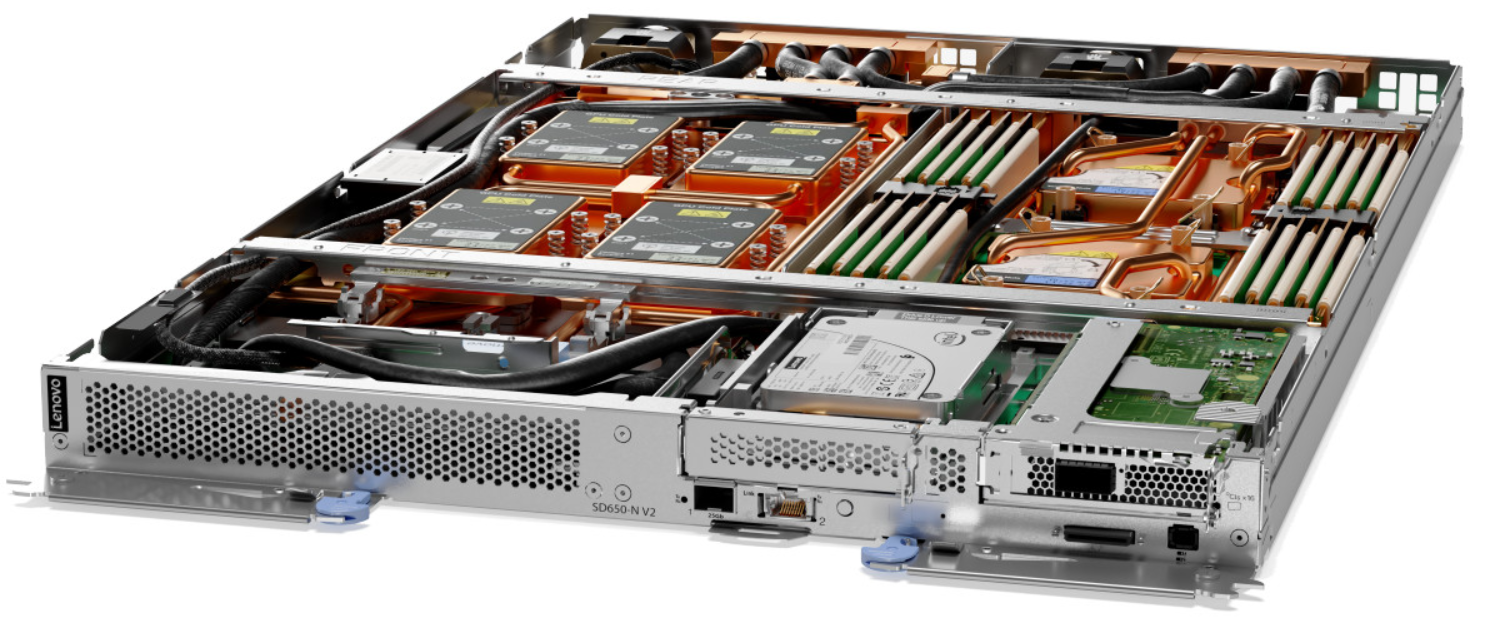

Such numbers were way outside of what the average organization could afford. However, thanks in large part to greater power in chips and accelerators, Lenovo and other OEMs can now pack 2.5 petaflops of power in a single rack, according to Tease. He points to Lenovo’s ThinkSystem SD650-N, a water-cooled 1U rack server with two “Ice Lake” Xeon SP CPUs and four Nvidia “Ampere” A100 GPUs designed for AI training and inference workloads and that supports more than 700 HPC applications. Shown below:

“A lot of the complexities and the difficulties that we used to have to reach those really big scales where you could do interesting research and to take on really new modeling and you really need problem-solving is then condensed down to something more and more people can use,” Tease says, adding that the improvements in CPU and GPU capabilities “allowed us to package so much more into such a small space.”

Lenovo and other system vendors can now deliver more compute power in smaller increments that are less complex because there are fewer building blocks.

“The key thing is that vendors like us or like an Atos or an HPE or Dell have got to design the gear in a way that not only can be consumed by the big sites that have generally greenfield datacenters,” he says. “They’re building everything from scratch and they can do pretty much anything. If they want the code, they write it themselves. But that same exact design point, that same engineering, can also translate down for the every-day user, which we call ‘every-scale users,’ so that it fits into their datacenter on their power through their doors.”

Now Lenovo is opening another avenue for enterprise that need high levels of compute. At its Winterstock even this week, the vendor said it is offering HPC compute power through its TruScale as-a-service initiative. The goal of TruScale HPCaaS is to give enterprises access to Lenovo’s supercomputer portfolio in a cloud-like model, where they can quickly access compute power as needed, consume it in a pay-per-use model and have it managed by Lenovo rather than having to do it themselves.

It’s a model designed for mainstream enterprises in mind. Traditional HPC users are large government or research institutions with much of their equipment on premises and talent level to run and manage them. Smaller organizations aren’t HPC experts and don’t have an administration team, but still need HPC power.

However, both have challenges that can be addressed with an HPC-as-a-service model. Traditional users need new resources but are hindered by global supply-chain challenges that stretch out the time it takes to get new gear into the datacenter. Lenovo can give these organizations faster access to the systems they need to scale their environments.

For mainstream enterprises, an option is going to a cloud services provider. Top public cloud providers Amazon Web Services (AWS), Microsoft Azure and Google Cloud all offer HPCaaS, as do vendors like HPE with its GreenLake platform and Penguin’s Computing on Demand. Hyperion Research said in a report that about 20 percent of HPC workloads run in the cloud, double the number seen in 2018. HPC applications in the cloud will grow 2.5 times faster than the on-prem HPC server market, with the increase driven in large part by the COVID-19 pandemic, which accelerated the shift to remote working and adoption of cloud services.

Tease says that when running analytics and AI workloads, a key is getting the compute as close to the data as possible to reduce the processing and analysis times and the costs of moving the data to and from the cloud.

“We can co-locate the compute next to the data that they’re actually going to analyze,” he says. “We’ll manage it for them, we’ll run it for them, optimize it for them. We’ll even train them about how to use it properly. It’s basically a cloud-like experience with cloud-like economics, but with on-prem benefits of having the processing close to the data that’s being generated.”

Data is the foundation of AI workloads and the need to process and analyze that data is high. Moving it around the cloud costs time and money that many enterprises don’t have.

“Then when we actually go into production – let’s say we’re in a manufacturing environment where I’m trying to do quality control with the models that I built — it’s even more difficult to send that data to the cloud, then answering them back in time for these high-speed, real-time applications,” he says. “With those kinds of use cases, people may want the cloud economics, but they can’t. It just doesn’t fit the application because of the real-time nature of it. They’re going to want to put that gear on-prem, but we can give them at least an economic model that resembles the cloud that they may do for other parts of their business.”

In the short term, TruScale HPCaaS is about enabling organizations to have gear running on premises, close to their data and with that cloud-like consumption model. However, Lenovo hopes to eventually get to the point where they can do sharing across sites.

“I would love to see Rutgers University, New York University, Harvard University, Yale University, or the University of Chicago with their HPC resource that they host themselves, but that can also be made available as a service to the local community,” Tease says. “That is another opportunity. Either we could host that for them somewhere or the university hosts it as part of their outreach to the community. That’s the sort of thing long term that I’d like to be able to see us deliver on and that takes that democratization of HPC much, much further. And that’s really what the end goal is here, to make sure as many people as possible are taking advantage of the capabilities that we’re able to give them with HPC these days.”

Be the first to comment