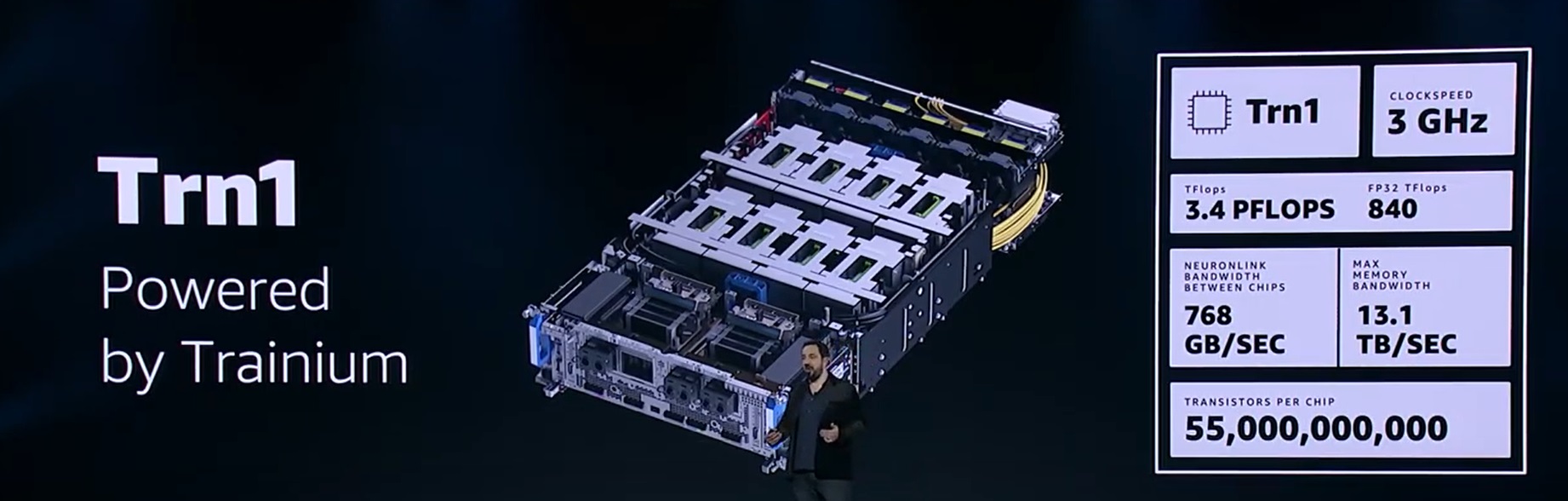

While AWS is making it clear it will continue to innovate around GPUs for cloud-based machine learning, there was little doubt after today that the company’s own Trainium devices are set to outdo what even the top-line Nvidia GPU instances have achieved.

In his keynote at AWS Re:Invent today, EC2 general manager, Peter DeSantis, described solid GPU performance with both the P3dn (Nvidia V100) and P4 (Nvidia A100) instances over time, but contrasted the memory and latency of these instance types with AWS’s own Trainium (Trn1n) option for AI/ML training.

All the way back in 2018 when BERT-Large was the showcase model for large-scale training, AWS’s P3DN instances with 256GB memory and 100Gb/sec networking represented the state of the art in training capabilities. However, with model size (by parameter count) and complexity growing 10X annually, even the P4D when it launched last year with 25 percent more memory and 4X the compute performance would still leave GPT-3 models waiting.

BERT-Large was state of the art in 2018, GPT-3 with its 175 billion parameters is 500X bigger than BERT and now there is talk of emerging models that hit the 10 trillion parameter mark. In this world, GPU limits are being stretched in two directions: memory and latency. Even with GPT-3 models, DeSantis says, it would take decades to train on a single P4D (not that anyone training would use one machine, of course).

“We collaborated with Nvidia to enable ultra-low latency networking using Elastic Fabric Adapter (EFA), which is a specifically optimized EC2 networking stack for HPC but we applied the same techniques to machine learning and the latency and speedups for these tightly coupled tasks made us the best for training on GPUs,” DeSantis explained.

While this was good news for last year’s GPU cloud users, that 10 trillion parameter world needs something different and Trainium is – even if it preserves some familiar elements and features of the GPU, especially in terms of flexibility. Getting around the networking overhead and solving the latency problem is where Trainium steps ahead.

Trainium has 60 percent more memory than the Nvidia A100 based instances and 2X the networking bandwidth. For the highest end of the training customer set, AWS has also created a network-optimized version that will provide 1.6 Tb/sec of networking, all built around that same EFA-2 acceleration development with some other secret sauce to further reduce latency and cost of training.

On the ground, this means training GPT-3 in under two weeks, which is more of a feat than it may sound. But it’s not necessarily just a matter of time to result, it’s number of instances used. The same training run on the P3DN would take 600 instances, P4 would take 200. DeSantis says this training result can be achieved with 130 Trainium instances, which opens the door to scaling ever-larger clusters with reduced training time. The benefit comes from all that tweaking of the networking efficiency. The V100 instances come with nearly 50 percent communication overhead, which was dramatically improved with the P4 instances (14%). DeSantis says the Trn1n has a 7 percent network hit.

The latency is one thing, the flexibility is another – and here’s where GPUs got it right, DeSantis says. “One reason people love GPUs is that they provide a high degree of flexibility, which allows for experimentation. Specialized processors for machine learning forgo this flexibility in exchange for efficiency but in machine learning, this kind of premature optimization is a bad idea.”

What he’s referring here is the systolic array approach to ML processors which, while an old concept, has been revived in AI/ML because it eliminates the need to shuttle data between registers and main memory (hands off intermediate results inside the processor). All of this makes for efficient matrix multiplication. But in non-GPUs, it is not flexible or efficient. With Trainium there are 16 in-line, fully programmable processors, which means the ability to program operands directly in the processor (C or C++ so no funky new language or tooling). This provides the best of all worlds (systolic array flexibility and matrix math efficiency along with programmatic ease).

AWS is trying to see around the curve for what its largest training customers might need. Current, known models are one thing but DeSantis says AWS has been keeping an eye on what new techniques will emerge and building to suit.

For example, he says, “training requires doing a lot of floating point calculations and in various steps there’s a need to round. All processors do this efficiently, but when training, this kind of rounding might not be optimal.” He explains that rounding allows for lower precision without big accuracy loss but this stochastic approach is a lot more work since each time a number is rounded, a random number is generated to round the input. Long story short, this gets hairy at scale and comes with a lot of overhead. To counter this and keep efficiency up and costs down with lower precision, Trainium will have native support for stochastic rounding. “It’s a useful feature but right now no one is using it for models [with non-Trainium instances] because it’s too expensive. We want ML scientists to use this on the largest models.”

“Our software development kit, AWS Neuron, lets people use Trainium as a target for all popular frameworks. This gives cost and performance advantages with little or no changes to your code while still maintaining support for other processors,” DeSantis adds.

By “other processors” there is a heavy nod toward GPUs, which were given honorable mention throughout the talk but were really being pitched as so last year.

It was a nice gesture to Nvidia when DeSantis said, apropos of nothing and in the middle of his exaltations about Trainium, “we are not done working with Nvidia on optimizing for GPU and will keep adding more networking and throughput for GPU instances in the coming year.”

You know, because AWS needs the networking. Well, for now.

Be the first to comment