Artificial intelligence and machine learning are foundational to many of the modernization efforts that enterprises are embracing, from leveraging them to more quickly analyze the mountains of data they’re generating and automating operational processes to running the advanced applications – like natural language processing, speech and image recognition, and machine vision – needed by a broad array of industries, from financial services, agriculture, healthcare and automotive.

The challenge with AI, as with most emerging technologies, is making it – and the powerful systems they run on – available beyond the largest of hyperscalers and academic and research institutions that have the money and skillsets to embrace and take advantage of them. A broad array of OEMs and software makers are pushing to make AI accessible to a wide swath of the mainstream market to not only help organizations get the tools they need to adapt to a world that continues to expand beyond their core datacenters out into the cloud and the edge but also to grab their share of a fast-growing global AI market that IDC expects to grow on average more than 17 percent a year, from $156.5 billion this year to more than $300 billion in 2024.

That has included building systems within their traditional product portfolios that leverage the fastest and newest technologies – such as the latest GPU accelerators from the likes of Nvidia and AMD – and finding new payment models, including subscriptions and pay-as-you-go, to make it all more affordable. Dell EMC this year unveiled a 5 petaflops system at the San Diego Supercomputer Center based on its PowerEdge servers running on AMD Epyc CPUs and Nvidia V100 Tensor Core GPUs that is being made available to organizations a wide range of industries. Last month the company talked about offering HPC and AI systems not only on premises but also through an as-a-service model as well as expanded use of such technologies as containers, Kubernetes and various AI-specific frameworks and languages.

Hewlett Packard Enterprise made a significant push into the HPC space when it bought supercomputer expert Cray last year for $1.3 billion. Peter Ungaro, former CEO of Cray and now senior vice president and general manager of HPE’s HPC and AI business, told The Next Platform in May that the development of exascale systems has “spawned a whole new set of technologies, both hardware and software technologies, that are in the process of being developed for these exascale systems that will be leverageable by everyone.” Ungaro added that in the future, “pretty much every company … will have a system in their datacenter that will look and feel very much like a supercomputer and have the same kinds of technologies that a supercomputer today has in it.”

Others like IBM and Cisco also are pushing to put AI and powerful compute in the hands of as many enterprises as possible. In the same vein, Lenovo over the past couple of weeks has made new pushes on multiple fronts to make AI and compute power more accessible. The company’s Data Center Group (DGC) at the virtual SC20 supercomputing event last month unveiled new systems and software tools that are designed to more easily adopt AI and data analytics capabilities, part of its larger Exascale to Everyscale initiative.

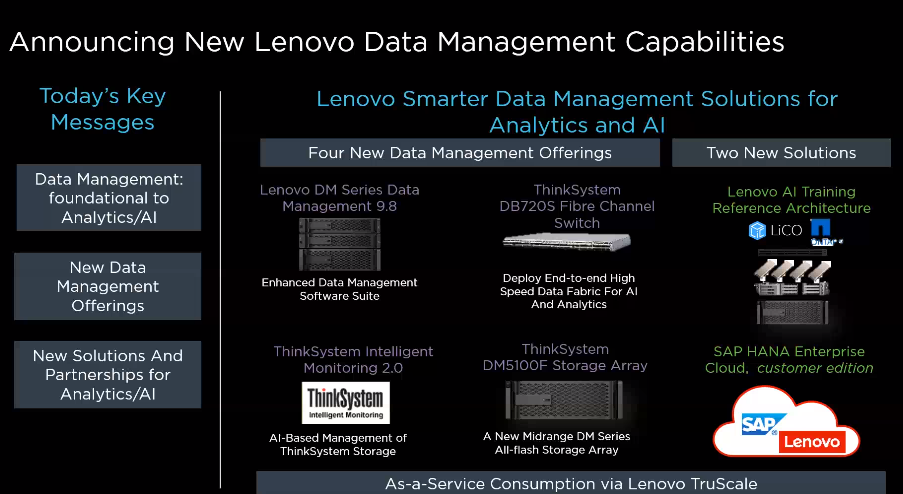

This week Lenovo introduced new data management solutions, including a AI training system reference architecture developed with Nvidia and NetApp that combines Lenovo servers and storage with Nvidia GPUs.

“It sounds like a simple goal is to bring the highest scale technologies and make them available, usable, deployable, digestible by average customers,” Scott Tease, general manager of HPC and AI at Lenovo DGC, said during a conference call with journalists. “It sounds simple, but it takes quite an engineering commitment to make that happen. It’s really easy to take some of these very high-end building blocks and put them inside of weird-shaped racks and odd types of power delivery or odd types of cooling. It’s much harder to take those building blocks and put them in standard form factors that run standard high-voltage power, that give you a choice of air cooling or water cooling, that are based on open-source software, open networks, things like that. And that’s what we’re doing with all of our designs and making sure that those highest end technologies are not reserved just for the five or six exascale systems that we’ll see in the world the next five or six years, but they’ll be available to users of all sizes from single units, partial racks on up to the biggest in the world.”

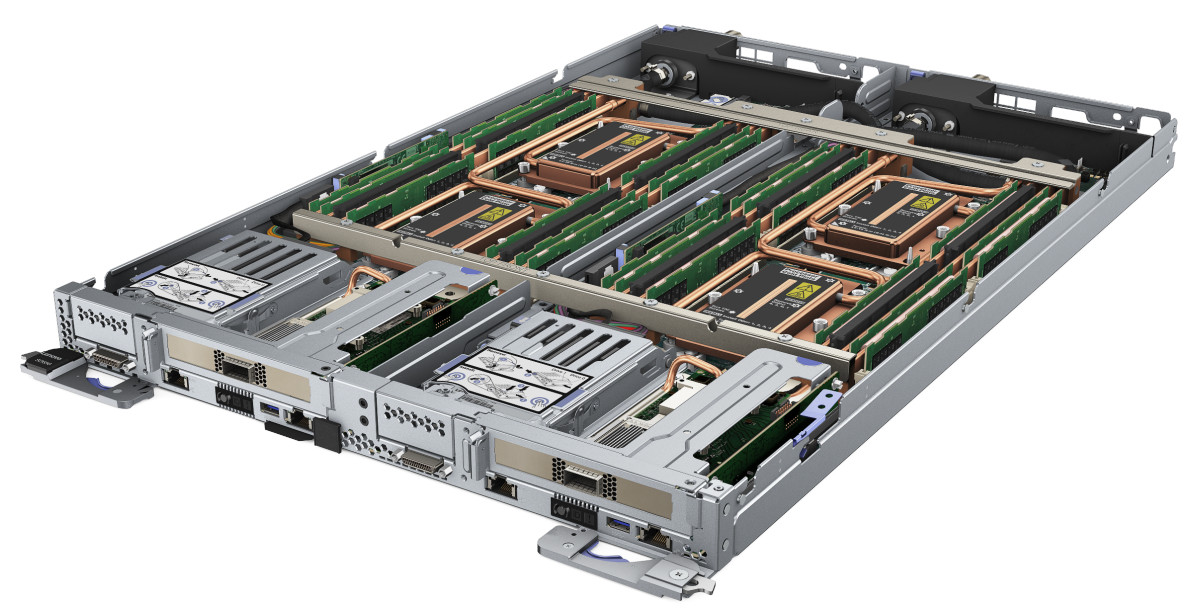

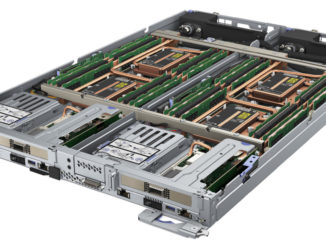

At the supercomputer event, Lenovo introduced the ThinkSystem SD650-N V2 server, a Direct-to-Node liquid-cool system. The server fits in a 1U form factor and delivers up to 3 petaflops of compute power in a 19-inch rack. With Lenovo’s Nepture liquid cooling, energy consumption is reduced up to 40 percent, and the system is powered by four Nvidia A100 GPUs interconnected with NVLink and two Intel 3rd Generation Xeon Scalable processors and leverages Nvidia’s InfiniBand networking. Enterprises can get 36 of the systems housed in a single rack, Tease said.

The ThinkSystem SR670 V2 is an air-cooled 3U system that supports up to eight A100 Tensor Core or Nvidia T4 GPUs, delivering p to 160 TFlops of power. Lenovo also has a model that includes liquid-to-air heat exchangers that can cool four board-mounted A100 GPUs. The differences between the two show the different directions that air- and liquid-cooled systems are going in terms of compute power and density, he said.

“Up until now, most of our water-cooling platforms and our air-cooled platforms were the same density. We just chose water cooling or air cooling for the for the cooling methodology,” Tease said. “In this generation, you start to really see that air is not able to keep up with the high component power and the amount of heat that these systems generate at the density that you can do with water. … It does show how air is going to start mandating a reduction in density or sacrificing some of the highest speed parts to make sure that our customers, whether they’re choosing a water-cooled roadmap to go super dense and really pack stuff in or standard traditional air-cooled datacenter, get choices.”

“Up until now, most of our water-cooling platforms and our air-cooled platforms were the same density. We just chose water cooling or air cooling for the for the cooling methodology,” Tease said. “In this generation, you start to really see that air is not able to keep up with the high component power and the amount of heat that these systems generate at the density that you can do with water. … It does show how air is going to start mandating a reduction in density or sacrificing some of the highest speed parts to make sure that our customers, whether they’re choosing a water-cooled roadmap to go super dense and really pack stuff in or standard traditional air-cooled datacenter, get choices.”

Also at SC20, Lenovo announced GOAST (Genomics Optimization and Scalability Tool), a validated and pre-configured bioinformatics offering based on standard two-, four- and eight-socket ThinkSystem servers and tuned to accelerate genetic sequencing, bringing the job of running a whole genome sequence from as long as 40 hours down to less than an hour.

More recently, Lenovo this week ran out new data management solutions, including the AI training reference architecture. The entry-level cluster architecture includes ThinkSystem SR670 servers and DM5000F all-flash storage and Nvidia GPUs, giving enterprises a right-sized system to get started on AI training workloads.

“The goal is to integrate all of that best-of-breed capability, provide one point of transaction, one point of support, and to make that efficient and affordable to people who are willing to deploy more analytics and more AI on the edge,” said Stuart McRae, executive director and general manager of storage for Lenovo DGC. “As that evolves for customers, as their skill set in the industry changes and as the demand for AI grows, there’s applications that customers can do, whether it’s loss prevention, recognition, manufacturing AI on the edge.”

The OEM also rolled out the ThinkSystem DM5100F to offer enterprises affordable low-latency all-NVMe storage to enhance analytics and AI deployments and said that its DM Series storage systems now support S3 object storage, creating a single platform through which organizations can manage and analyze block, file and object data. They also can reduce costs by adding cold-data tiering from hard drives to the cloud or replicating data to the cloud.

Other data management announcements included ThinkSystem Intelligent Monitoring 2.0, a cloud-based management platform that leverages AI to automate the care and optimization of ThinkSystem storage environments. Enterprises can manage such aspects as storage capacity and performance and detect and fix problems before they happen. Lenovo’s DB720S Fibre Channel switch offers 32 Gb/s and 64 Gb/s storage networking and 50 percent lower latency than its predecessors.

The solutions in the data management portfolio are all available through the company’s TruScale Infrastructure Services, which were introduced early last year to enable organizations to pay and use them in a consumption-based as-a-service model.

Be the first to comment