Everyone is in a big hurry to get the latest and greatest GPU accelerators to build generative AI platforms. Those who can’t get GPUs, or have custom devices that are better suited to their workloads than GPUs, deploy other kinds of accelerators.

The companies designing these AI compute engines have two things in common. First, they are all using Taiwan Semiconductor Manufacturing Co as their chip etching foundry, and many are using TSMC as their socket packager. And second, they have not lost their minds. With the devices launched so far this year, AI compute engine designers are hanging back a bit rather than try to be on the bleeding edge of process and packaging technology so they can make a little money on products and processes that were very expensive to develop.

Nothing shows this better than the fact that the future “Blackwell” B100 and B200 GPU accelerators from Nvidia, which are not even going to start shipping until later this year, are based on the N4P process at Taiwan Semiconductor Manufacturing Co. This is a refined variant of the N4 process that the prior generation of “Hopper” H100 and H200 GPUs used, also a 4 nanometer product.

TSMC has been very clear that the N3 node will be a very important volume product for a long time, and it is interesting that Nvidia chose to not use it when Apple did for its smartphone chips and both AMD and Intel are going to with their future CPUs. Apple is reportedly eating all of TSMC’s N3 capacity for its homegrown, Arm-based smartphone and Mac PC processors. It looks to us like Nvidia is hanging back on the N3 process as Apple helps TSMC work out the kinks in this somewhat troublesome 3 nanometer process, and we assume that Apple will also be in the driver’s seat with TSMC’s N2 2 nanometer nanosheet transistor process, which will be seeing real competition from a credible 18A process from Intel Foundry in 2025. But TSMC has been clear that it think its enhanced 3 nanometer process, called N3P, will be able to go up against Intel’s 18A.

The rumor is that most of the $15 billion in advanced bookings over many years that Intel Foundry has on the books are for Arm server chips and AI training and inference chips that Microsoft is building for its own use and for that of partner OpenAI. And with national security flag waving as well as the practicalities of needing a second source for chip suppliers among all chip designers, we think that Intel might be low-balling its aspirations of having $15 billion a year in external foundry revenues by 2030, and we can see the internal Intel product groups for chips in PCs and servers to drive maybe $25 billion in additional foundry revenues. That’s $40 billion, and who knows how profitable Intel Foundry will be.

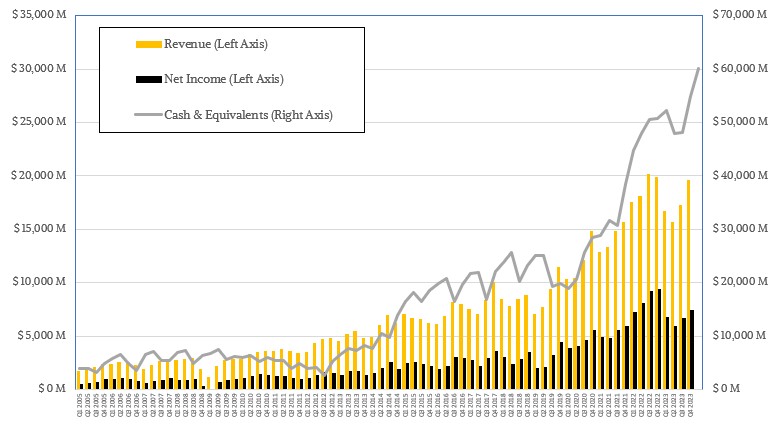

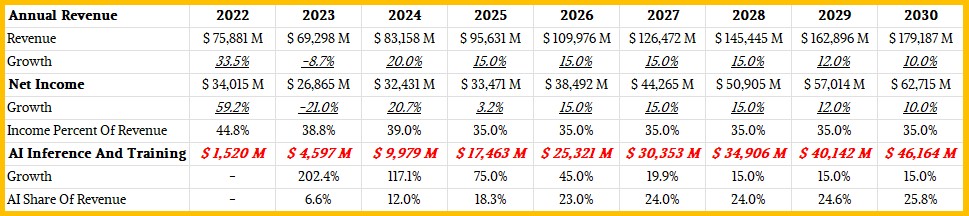

TSMC had $69.3 billion in sales and $26.9 billion in net income in 2023, and that was a year when sales were down 8.7 percent and net income was down a harsh 21 percent. The PC market decline has hurt pretty badly and so has a slump in smartphone sales, but PCs have flattened and smartphones are recovering. AI acceleration represents a new, large, and very profitable source of customers for chip making.

How much? We have some hints from TSMC. In the second quarter financials for 2023, TSMC’s top brass said that 6 percent of revenues, or about $941 million, was driven by the manufacturing of compute engines for AI training and inference together. We think this was a local minima amongst the quarters in 2023, not a local maxima (tricky tricky there).

Then CC Wei, chief executive officer at TSMC, said this in going over the financial results for the company for the first quarter of 2024:

“Almost all the AI innovators are working with TSMC to address the insatiable AI-related demand for energy-efficient computing power. We forecast the revenue contribution from several AI processors to more than double this year and account for low-teens percent of our total revenue in 2024. For the next five years, we forecast it to grow at 50 percent CAGR and increase to higher than 20 percent of our revenue by 2028. Several AI processors are narrowly defined as GPUs, AI accelerators and CPU’s performing, training and inference functions and do not include the networking edge or on-device AI. We expect several AI processors to be the strongest driver of our HPC platform growth and the largest contributor in terms of our overall incremental revenue growth in the next several years.”

Well, this is data we can use. So let’s have some fun.

Given that 6 percent hint in Q2 2023, the low-teens percentage for 2024, the doubling in 2024, and the 50 percent CAGR, we can plot out AI revenues from as a percent of total revenues between 2022 (with a guess that AI business tripled thanks to the generative AI boom in 2023) and 2030. These are based on the projections of 20 percent overall revenue growth for 2020 and then straight line historical returns for revenue, income, and AI inference and training. We assume the growth will average out and eventually the share of revenues coming from AI accelerators will also level out. (Perhaps sooner than we think.) Take a look:

TSMC’s average annual growth rate between 2005 and 2023 was 13.8 percent, and we are going to bend that curve down on purpose when our first inclination was to just say it was going to average 15 percent per year. We put a 15 percent spike in for 2023. We also assume in this model that net income stays around 35 percent.

This projection shows that by 2030, TSMC should have $145.4 billion in sales by 2028, and that will drive $34.9 billion in AI chip sales, which is a 50 percent CAGR from 2023 through 2028 with our estimate of $4.6 billion in AI chip sales for TSMC in 2023. (This is not the only way to solve this equation, but one that doesn’t violate our sensibilities.) Not all GPUs are used for AI, so it really comes down to the definition that TSMC is using. Don’t get too crazy about the specifics, this is a thought experiment.

Here’s the point. If things progress more or less like we think it will in this model – which assumed no glut in the IT chip market and no major recession or world war – then by 2030, TSMC could have something like $180 billion in revenues and around $46 billion of that could be coming from AI chippery.

And that means Intel might indeed be the second largest foundry behind TSMC, but it will be far, far behind it – like, by a factor of 4.5X. And the TSMC AI training and inference part of the chip foundry business will be a little bit larger than Intel’s entire foundry business.

This model obviously also assumes a healthy amount of consumption of very complex CPUs for servers, PCs, and smartphones. This represents a doubling of spending, and probably something more on the order a factor of 5X to 10X average increase in the compute capacity of these devices over those seven years.

Crazy, isn’t it?

With that, let’s actually talk about the TSMC numbers for Q1 2024.

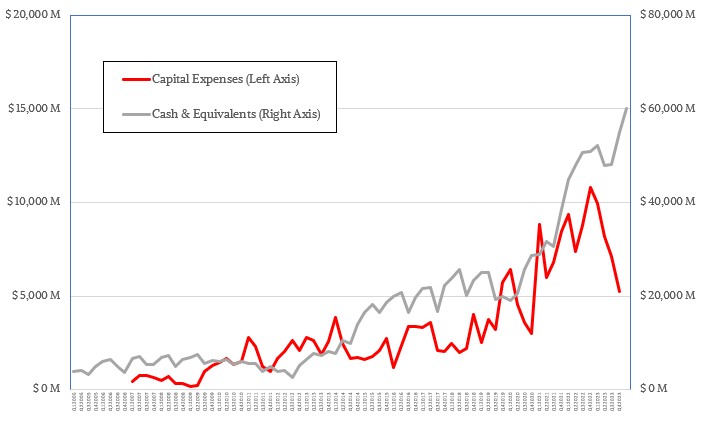

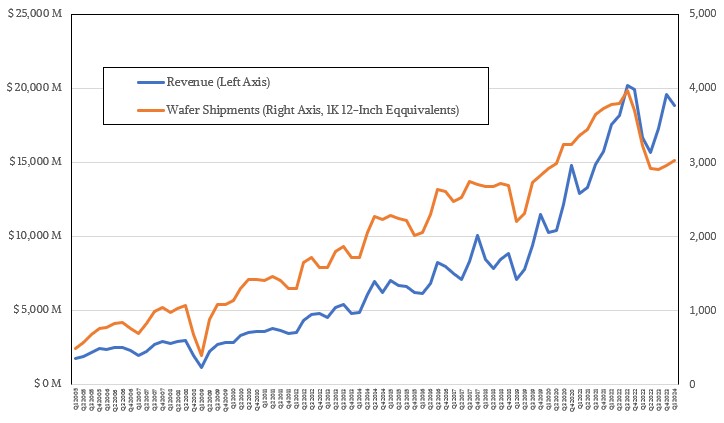

In the quarter ending in March, TSMC posted sales of $18.87 billion, down 3.8 percent sequentially but up 12.9 percent year on year. Net income fell by 4.1 percent sequentially but was up by 5.4 year on year to $7.17 billion.

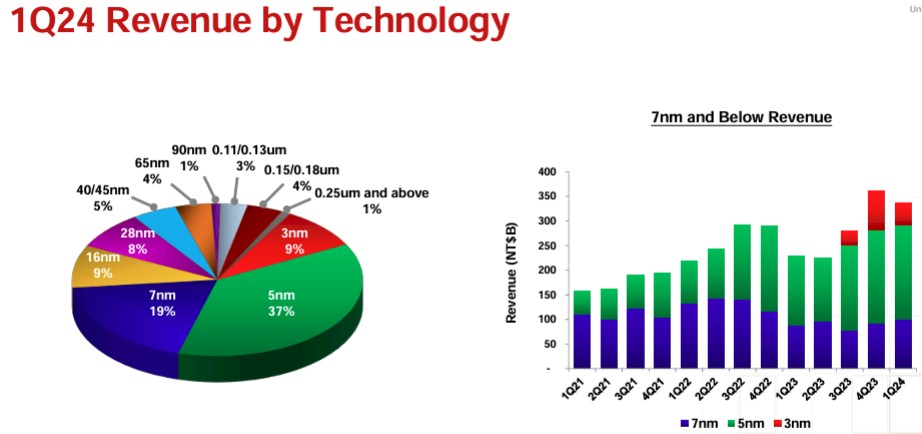

TSMC ended up with just a tad over $60 billion in the bank as the quarter came to a close, and its capital expenses were slashed by 42.1 percent to $5.77 billion as the company invests in future 2 nanometer and beyond technologies but has enough capacity to serve the needs of customers wanting to make chips in 7 nanometer, 4 nanometer, and 5 nanometer technologies. We assume that 3 nanometer is still a ramp, and we also think given the interest in 2 nanometer, some may jump from N4 to N2 processes while others are jumping from N5 to N3 processes.

As for that N2 process, Wei said that production will start in the final quarter of 2025, with meaningful revenue coming at the end of the first quarter to the beginning of the second quarter of 2026. That seems like a long time away, but it really isn’t. Wei also conceded that it has taken longer for N3 to reach the same yields and margins as the prior N7 and N5 generations. Aside from N3 being a lot more complex, TSMC also set pricing for N3 years ago before the inflation boom, and it has had to absorb some higher costs to bring it to customers even as it has raised its prices. For instance, electricity prices in Taiwan went up by 17 percent last year and went up 25 percent this year.

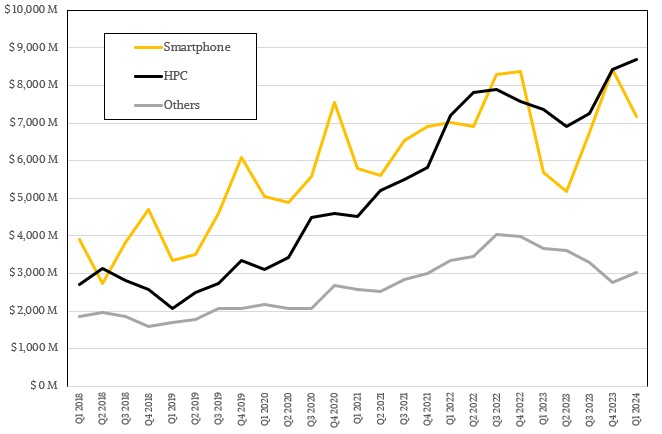

The other neat thing in the Q1 2024 numbers from TSMC is that its HPC segment, by which it does not mean HPC simulation and modeling or AI training or inference but by which it does mean any advanced manufacturing of CPU, GPU, and other high performance compute engines, became its largest segment for the fourth quarter out of the past five. And with the other quarter, HPC tied smartphone chip manufacturing.

The TSMC HPC segment accounted for $8.68 billion in sales, up 18 percent from the year ago quarter. Smartphone chip sales rose by 26.2 percent to $7.17 billion, but were down 16 percent sequentially from Q4 2023, when smartphones and HPC were tied at $8.44 billion in sales apiece. The IoT, automotive, and digital consumer electronics businesses have their issues and don’t add up to much – but for all we know are very profitable.

Ther good news is that wafer starts as measured in 12-inch (300 mm) wafer equivalents were up 2.5 percent sequentially and are more or less holding steady at around 3 million per quarter. That is still a far cry from the 3.82 million wafers that TSMC averaged in 2022 and the peak it set of 3.97 million wafers in Q3 2022 before the wheels came off of the PC and smartphone businesses. Only a few quarters later, the general purpose server market – meaning not AI servers augmented by GPUs – went into recession.

So it has been a rough couple of years. Perhaps it will be better in the future, as our rosy revenue and income projections for TSMC prognosticate.

The only way to accurately predict the future, however, is to live it. And because Heisenberg has a sense of human, as you are living it, it is harder to tell what is going on. You can know your position or your velocity, but you can’t know both with equal accuracy.

Heisenburger prognostications can be quite the gut bomb indeed … not sure whether to open one’s mouth or chew (with equal accuracy, at the same time) … the heisencheeseburger’s molten plasma, while yummily delicious, mostly furthers the related indeterminacies. It’s Monte Carlo roulette for the mouth (especially with those drippingly secret sauces!)! Accordingly, and within this rather specific perpspective of menu-driven algorithmic gastronomy, could Intel Foundries be on its way to becoming the high NA Burger King to TSMC’s evolutive McWafer (or not!)? “Inquisition minds” … 8^p

A recent video on the Intel’s High-NA EUV litho tool installation has an interesting part on the test wafers that ASML created. The smallest line spacing for the regular EUV in production is actually around 13nm. High-NA gets to around 10nm.

https://youtu.be/8i9rs4LNSlI?t=499

SWOT AICU. Get a license. https://labpartnering.org/patents/US11671054

Oscillator for adiabatic computational circuitry (US11671054)

Granted Patent | Granted on: 2023-06-06 “An adiabatic system is one that ideally transfers no heat outside of the system, thereby reducing the required operating power. The adiabatic resonator, which includes a plurality of tank circuits, acts as an energy reservoir, the missing aspect of previously attempted adiabatic computational systems.”

Diabatic Information Burning Engine, DICE, disruption Adiabatic Information Conserving Units, AICU.

“Marketing vs “Real” Performance

This observation that DICE GPUs are unable to sustain their peak clock speed due to power throttling is one of the primary factors that separates “real” matmul performance from Nvidia’s marketed specs.”

https://www.thonking.ai/p/strangely-matrix-multiplications

“DICE On-chip thermal hotspots are becoming one of the primary design concerns for next generation processors. Industry chip design trends coupled with post-Dennard power density has led to a stark increase in localized and application-dependent hotspots. These “advanced” hotspots cause a variety of adverse effects if untreated, ranging from dramatic performance loss, incorrect circuit operation, and reduced device lifespan. In the past, hotspots could be addressed with physical cooling systems and EDA tools; however, the severity of advanced hotspots is prohibitively high for conventional thermal regulation techniques alone.”

https://sites.tufts.edu/tcal/publications/hotgauge/

Now you know. Choose now. Choose wisely.