At every key leap in processing capacity in high performance computing – and just rattling off more than two decades from teraflops through petaflops, and now on the verge of exaflops in two years or so – there has been this tension between custom-built systems that break through performance barriers and more general purpose machines based on more off of the shelf components that cost less and tend to be fast followers.

It’s the difference between thoroughbreds and workhorses.

That gap between custom and commodity HPC systems, thinks Lenovo, is getting larger and larger, and the company, which became one of the dominant HPC system suppliers in the wake of acquiring IBM’s System x X86 server division five years ago, is putting a stake in the ground and committing to bring exascale-class technologies to the masses with the kind of machinery they are used to and understand well.

Headquartered out of Raleigh, North Carolina, the IBM HPC team that Lenovo acquired as part of the deal included Scott Tease, who was director of high performance computing for 14 years at Big Blue before joining Lenovo with that same role. Lenovo maintained the rights to the Platform Computing HPC middleware (now called Spectrum Computing by IBM) and GPFS parallel file system (now Spectrum Scale) as well as modernizing many of the functions of xCAT into Python and HTML5.

Lenovo doesn’t think we can go back to the good old days when most supercomputers were based on CPUs with some decent interconnects and lots of sophisticated software and storage, and said as much ahead of SC18 last year. While Lenovo has its share of big, capability-class supercomputers – notably the Super-MUC-NG system at Leibniz Rechenzentrum in Germany, the MareNostrum 4 system at Barcelona Supercomputing Center in Spain, and the “Marconi” system at CINECA in Italy – the company is focused on the middle ground in HPC and expands upwards and downwards from there. And it is unabashedly – and deservedly – proud of that fact. As Tease explained to us at the SC18 conference last year, the bread and butter HPC sale for Lenovo is for clusters that cost somewhere between $600,000 and $800,000, and these are workhose machines sold into government, academic, research, and commercial accounts. Significantly, Lenovo’s HPC business is growing and profitable.

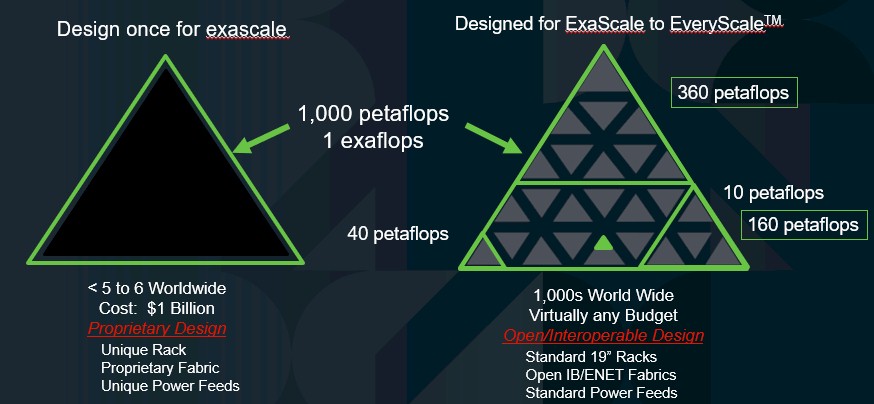

The issue that Lenovo is bringing up with its fractal theory of HPC, as expressed in its “From Exascale to Everyscale” campaign launched this summer, is that most HPC shops cannot afford or even consume some of the exotic systems that are being deployed as the initial exascale systems in the United States, China, Japan, and Europe. Lenovo has nothing against CPUs being equipped with exotic accelerators such as GPUs or FPGAs, and Tease hinted a bit about this fractal theory of HPC when he said last year that building big, hybrid concept machines mashing up CPUs and GPUs to do both traditional HPC simulation and modeling as well as AI machine learning workloads was great, but for any architecture to go truly mainstream in commercial accounts, it has to be used for other workloads, too, such as visualization, virtual desktop infrastructure, accelerated databases, and the like.

Here’s the kernel of the issue, according to Tease: “We were sitting in all of these briefings for exascale systems in the United States, China, and Europe, and we looked at what was being proposed and thought: No regular HPC client is going to be able install that rack. It probably won’t fit through their door or on their elevator. The racks won’t go on their normal datacenter floor because it’s too heavy. The networking and the power consumption is something that’s totally different than what they have. To us, it looked like the billions of dollars in exascale funding was just going to help the very top tier of the market. In the past, we have always watched leadership technologies come out and then it trickled down to everybody else. But it seems a little different this time to us, so we are making a commit to our customers, big and small, that we are going to design HPC in a way that smaller customers could get the same exact system as an LRZ or Barcelona or CINECA, albeit at a smaller scale.”

The way to bring HPC and AI systems to the masses, says Tease, is to think of a supercomputer architecture as being a fractal, which IBM researcher Benoit Mandelbrot, the discoverer of these wonderful mathemagical constructs, described as “a geometric shape that can be separated into parts, each of which is a reduced-scale version of the whole.”

With fractal HPC, the scale of the system changes, but the basic shape of the system – its core components and how they come together – does not change.

“Our worry is that we are moving away from the tradition that we’ve seen in the past of building commodity clusters in HPC, and making them any size you want,” explains Tease. “Through smart engineering, we want to make commitments to the customer to maintain options that fit into a 19-inch rack with standard height and depth and that run on traditional 200 volt or 240 volt power and do not require three-phase 480 volt or special DC power. We are going to build clusters on open fabrics, whether it is Ethernet or InfiniBand. We don’t want to force any kind of proprietary stuff or unnecessary lock-in with our HPC systems. We are going to do our best to fit into what you already do versus just designing this exotic system and then leaving you to figure out how to get a non-standard supercomputer into your datacenter.”

None of this, by the way, says that Lenovo is not interested in doing large, capability-class machines that are custom engineered if customers want to pay for it – just like Lenovo has volume-produced servers for the mainstream but also does custom server engineering for hyperscalers and cloud builders. For the right price and the right challenge, Lenovo would surely do that, says Tease. But Lenovo is much more interested in advancing the state of the art in the mainstream HPC market.

“The commitment we are making to HPC customers is that we will preserve the capability to exascale even if you don’t do non-standard things,” says Tease.

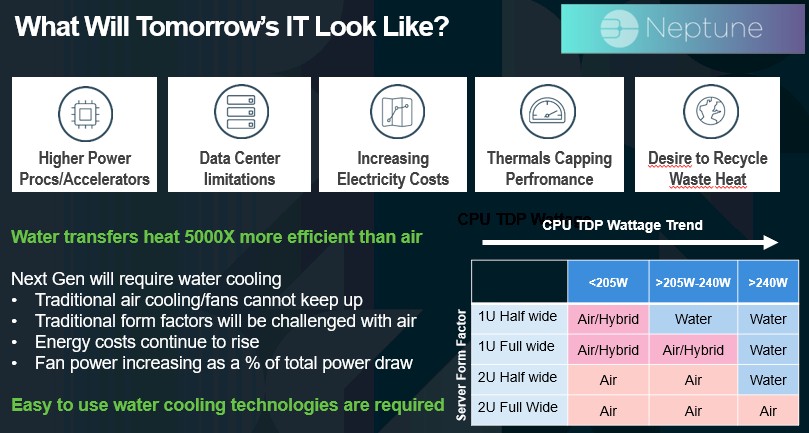

Lenovo’s Neptune water cooling efforts, which we covered in detail here last June, are a case in point. IBM had been using water cooling for its biggest, baddest mainframes for five decades, and there are limits to what air cooling can do, particularly as the thermals of CPUs, accelerators, and memory keep rising and companies want higher and higher densities of components to save datacenter space. Before the System x division was sold off to Lenovo, IBM brought some of these water cooling techniques over from the mainframe to HPC systems with the initial SuperMUC system at LRZ, which was commercialized more broadly and now accounts for over 40,000 nodes shipped to date. (Close to half of them have been installed at LRZ through several generations of the SuperMUC machines.)

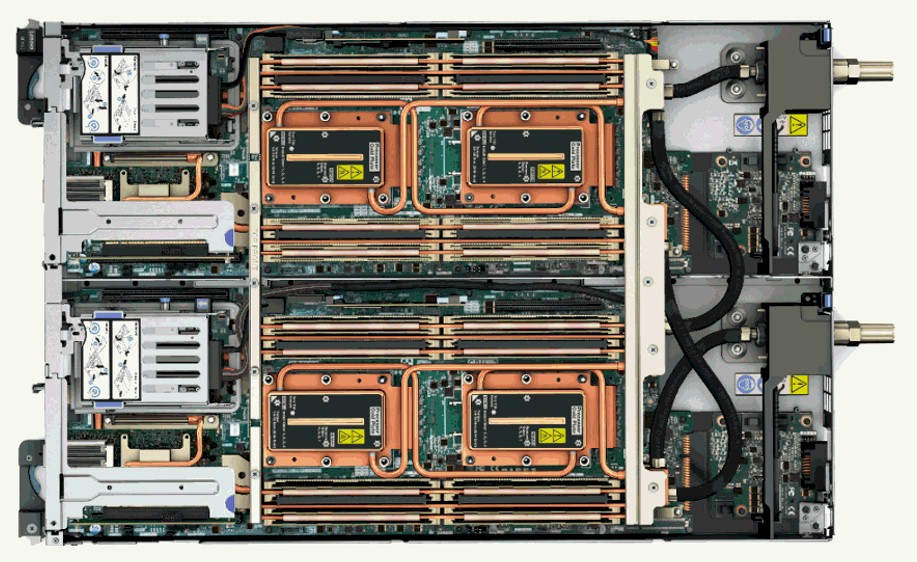

The ThinkSystem SD650 machine is the latest generation of direct water-cooled systems from Lenovo aimed at HPC shops. The system allows for up to two dozen high-bin CPUs, each with up to 240 watts of power draw, to be put into a 6U rack enclosure. The direct water cooling can work with a variety of CPUs, memory DIMMs, storage devices, and network interface cards. Over 90 percent of the heat of the components is pulled out if the system and transferred to the water and removed from the chassis. The next generations of the SD650 systems will have very dense mixes of CPUs and GPUs as well as all-CPU configurations in the same form factor, and allowing up to 2,500 watts (or more) per 1U in the ThinkSystem enclosure. The current SD650 system can do about 40 kilowatts per rack, and do so in a standard 19-inch rack, and the follow-on machine, coming later in 2020, will push it up to just under 90 kilowatts of power and heat dissipation in that standard rack.

This kind of heat density is done better with water cooling, and it is at the limit of air cooling. When you press up against those limits, you only have three choice: Go higher, go wider, or go water.

Even in cases where direct water cooling is not desirable or possible, Lenovo is adding thermal transfer modules, or TTMs, inspired by some of the technologies that are used by gamers to cool their overclocked systems. This is not getting the heat out of the datacenter, but moving the heat around the inside of the server chassis so traditional air cooling methods in the datacenter can be deployed to remove that heat.

“We’re trying to bring that technology up to a datacenter level, and you will definitely see a lot more of this in our roadmaps as we go forward,” says Tease. “Because we need to be able to move that heat inside the server. You have got to get heat away from the CPU and GPU parts faster than a traditional fan can do, and do it before the parts overheat and cause failure.”

Through a combination of efforts, Lenovo is looking to deliver the capabilities of technologies developed with exascale in mind to a much broader range of customers, though at a smaller, and oftentimes more user-friendly, scale. The future may belong to exascale, but Lenovo’s vision is that it is a future that should be made more inclusive.

Be the first to comment