If we could sum up the near-term future of high performance computing in a single phrase, it would be more of the same and then some. Although no “revolution” is in the horizon, the four major trends of the past decade – the expansion of artificial intelligence technology, processor diversification, cloud adoption, and provider consolidation – will continue to play out in 2020 and in some cases will become more pronounced.

Of all the aforementioned trends, the emergence of AI is probably the most significant, not only because it is driving the other three trends, but also because it has become a source of innovation for both HPC suppliers and consumers. That’s not likely to change in the year ahead or even for the foreseeable future.

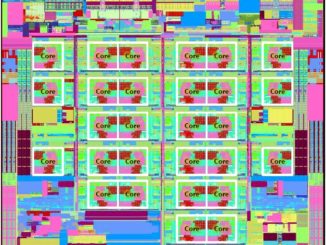

On the supply side, AI has influenced the design of processors destined for high performance servers, reflected in additional circuitry like the Tensor Cores in Nvidia’s Volta GPUs, the Vector Neural Network Instructions (VNNI) in Intel’s current and future Xeon CPUs, and the support for bfloat16 in future ArmV8-A processors. To a lesser extent, AI has also diversified HPC system designs, with deep-learning-optimized supercomputers like ABCI demonstrating the utility of nodes packed with multiple GPUs and high-capacity flash storage.

The introduction of accelerated supercomputers like Summit and Sierra has set the tone for how HPC and AI will comingle in the years ahead. In one of the most fortuitous accidents in the annals of supercomputing — the V100 GPU that ended up in those two systems was not originally envisioned as a machine learning accelerator – AI became irrevocably linked to supercomputing. Developers wasted no time in taking advantage of this versatility.

It’s no accident that most future exascale and pre-exascale systems, with the notable exceptions of Japan’s Arm-powered ”Fugaku” supercomputer and China’s upcoming Sunway machine, will use a hybrid host-accelerator architecture. And in most cases, the accelerator will be the processor of choice to build the neural networks.

Ironically, none of the initial exascale systems in the pipeline will use Nvidia parts. Nevertheless, this year we expect the company to launch its next-generation general-purpose datacenter GPU, presumably under the “Ampere” label. If so, the reveal is likely come at GTC 2020 this spring. Hardware upgrade aside, a bigger question is if Nvidia bifurcates its high-end Tesla offering into distinct HPC and AI lines. The GPU maker has had an inference accelerator line for a number of years, the latest offering being the Tesla T4, but has never pursued the same strategy for training.

The motivation to do so now is compelling, given the introduction of competing silicon from Intel/Habana and other AI-specific training chips from startups like Graphcore and Wave Computing. And since the Department of Energy snubbed the Nvidia Tesla GPU for the exascale work, the GPU-maker might conclude it’s time to come up with more targeted products.

If that bifurcation does come to pass, we suspect Nvidia will not remove the Tensor Cores from the general-purpose Tesla offering – as we suggested, HPC users want both 64-bit floating point horsepower and at least some tensor math capability in the same package — but rather come up with a new purpose-built GPU optimized for training, jettisoning the 64-bit baggage. The stars appear be aligning for such a product, if not this year, then next.

In 2020, Intel is expected to ship its Neural Network Processor for Training (NNP-T) and Neural Network Processor for Inference (NNP-I) in volume. But since these are not capable of 64-bit floating point computations, they are not like to find much traction in HPC machinery. Rather these chips are primarily geared to cut into Nvidia’s current dominance in the datacenters of hyperscaler and cloud builders. Even here, we don’t expect a dramatic market reversal over the next 12 months; momentum counts for a lot and Nvidia has ton of it in the machine learning market.

Intel’s “Cooper Lake” Xeon and presumably the follow-on “Ice Lake” Xeon will support both conventional floating point math for traditional HPC as well as bfloat16 for machine learning number crunching. So, to at least some degree, they will compete with GPU accelerators for dual HPC-AI work. It will be interesting to see to what extent HPC users are willing to forgo GPUs for this kind of ambidextrous capability in CPUs. That will depend on a number of factors, but especially how much tensor-based application performance can be extracted from these VNNI-enhanced CPUs and how much machine learning work is anticipated at a given HPC facility.

On that last criteria, users appear to be increasingly interested in applying machine learning to traditional HPC work. And that is being done across every HPC application domain, from financial services and chemical engineering to cosmology and climatology. That doesn’t mean these techniques will replace conventional physics simulations, but will be used to augment them in a way that significantly reduces the computational load for these applications. As we begin the decade in which Moore’s Law as it relates to CMOS chip manufacturing techniques is almost certain to fade into oblivion, devising more efficient methods of computation will become increasingly important – more important than the breaking the exascale barrier, adopting the latest processor, or buying an incrementally larger machine.

In that sense, the addition of AI techniques into HPC workloads is probably the most notable paradigm shift in the field since the transition from custom-built vector supercomputers to commodity clusters three decades ago. Certainly, any developers interested in refreshing their HPC codes are looking to inject AI into their workflows. While we are just at the beginning of this transformation, 2020 might be the year when AI and HPC become truly inseparable.

Piecewise linear diffusive mathematics, like the mathematical convolution operations used in deep learning, cannot be used to predict the behavior of nonlinear mathematics. Unfortunately, that is exactly where they are trying to be used in HPC. Human and monetary resources could be better directed elsewhere.

I was a member of the TI Advanced Scientic Computer hardware team until our 2 huge systems in Austin Texas were shut down! I still have several of those logic boards plus the blue book that we used to repair the units when we had a crash!