Ampere Readies 256-Core CPU Beast, Awaits The AI Inference Wave

How many cores is enough for server CPUs? All that we can get, and then some. …

How many cores is enough for server CPUs? All that we can get, and then some. …

Today is the ribbon-cutting ceremony for the “Venado” supercomputer, which was hinted at back in April 2021 when Nvidia announced its plans for its first datacenter-class Arm server CPU and which was talked about in some detail – but not really enough to suit our taste for speeds and feeds – back in May 2022 by the folks at Los Alamos National Laboratory where Venado is situated. …

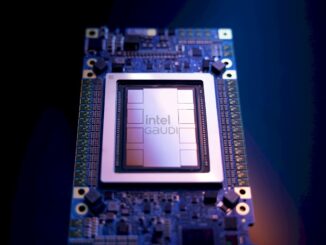

There was a time – and it doesn’t seem like that long ago – that the datacenter chip market was a big-money but relatively simple landscape, with CPUs from Intel and AMD and Arm looking to muscle its way in and GPUs mostly from Nvidia with some from AMD and Intel looking to muscle its way in. …

We have said it before, and we will say it again right here: If you can make a matrix math engine that runs the PyTorch framework and the Llama large language model, both of which are open source and both of which come out of Meta Platforms and both of which will be widely adopted by enterprises, then you can sell that matrix math engine. …

We have been tracking the financial results for the big players in the datacenter that are public companies for three and a half decades, but starting last year we started dicing and slicing the numbers for the largest IT suppliers for stuff that goes into datacenters so we can give you a better sense what is and what is not happening out there. …

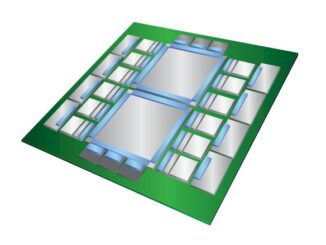

While a lot of people focus on the floating point and integer processing architectures of various kinds of compute engines, we are spending more and more of our time looking at memory hierarchies and interconnect hierarchies. …

If Microsoft has the half of OpenAI that didn’t leave, then Amazon and its Amazon Web Services cloud division needs the half of OpenAI that did leave – meaning Anthropic. …

Time is money when it comes to generative AI, as is the case with most technologies. …

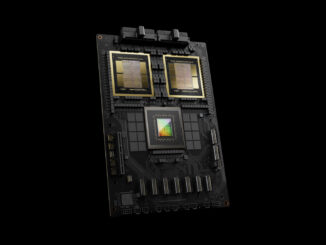

At his company’s GTC 2024 Technical Conference this week, Nvidia co-founder and chief executive officer Jensen Huang, unveiled the chip maker’s massive Blackwell GPUs and accompanying NVLink networking systems, promising a future where hyperscale cloud providers, HPC centers, and other organizations of size and means can meet the rapidly increasing compute demands driven by the emergence of generative AI. …

We like datacenter compute engines here at The Next Platform, but as the name implies, what we really like are platforms – how compute, storage, networking, and systems software are brought together to create a platform on which to build applications. …

All Content Copyright The Next Platform