At his company’s GTC 2024 Technical Conference this week, Nvidia co-founder and chief executive officer Jensen Huang, unveiled the chip maker’s massive Blackwell GPUs and accompanying NVLink networking systems, promising a future where hyperscale cloud providers, HPC centers, and other organizations of size and means can meet the rapidly increasing compute demands driven by the emergence of generative AI.

That includes even Nvidia’s own year-old DGX Cloud, officially unveiled at the 2023 iteration of the show, which combines its DGX AI supercomputer hardware and various software pieces – including the expanding Enterprise AI suite of offering – and can run either on the infrastructure of other major cloud providers or on its own on-premises DGX SuperPod datacenter platform

It was that point that Huang alluded to during his keynote address on the event’s opening day and reiterated during a lengthy Q&A session with the media analysts a day later, saying that while Nvidia does have its DGX Cloud, “we are not a cloud company. We are a platform company.”

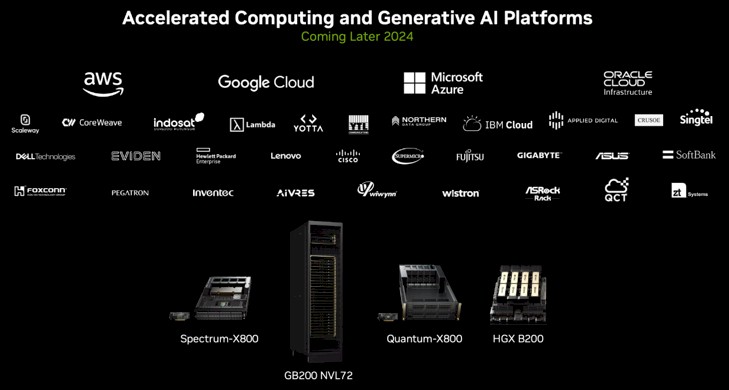

Indeed, when a list of some three dozen tech industry GTC sponsors and supporters popped up on the massive screen behind Huang during the keynote, the four listed at the top were Amazon Web Services, Microsoft Azure, Google Coud, and Oracle Cloud Infrastructure.

And they came to GTC announcing plans to bring Blackwell into their environments and others support for Nvidia and its technologies.

That makes sense. AI workloads require massive amounts of compute power and the infrastructure needed to run them are well out of the financial range for many organizations that nevertheless need them to compete. The cloud provides the necessary infrastructure and scalability combined with pay-as-you-go consumption models that can help keep costs within reach, despite challenges with data sovereignty and security that can come with both the cloud and AI.

More than 70 percent of organizations now use managed AI services, self-hosted AI SDKs and tools are in 69 percent of environments, and 42 percent of organizations are self-hosting AI models in the cloud. In the fourth quarter 2023, spending on cloud infrastructure services jumped $12 billion year-over-year, to almost $74 billion, driven in large part by the momentum behind generative AI.

At GTC, the top cloud providers looked to the Blackwell GPUs and NVLink network – and the various permutations that can be realized by through combinations of those products with other Nvidia portfolio offerings – as key tools for being able to handle the rapidly rising demand for generative AI compute, with AWS CEO Adam Selipsky saying that “Nvidia’s next-generation Grace-Blackwell processor marks a significant step forward in generative AI and GPU computing.”

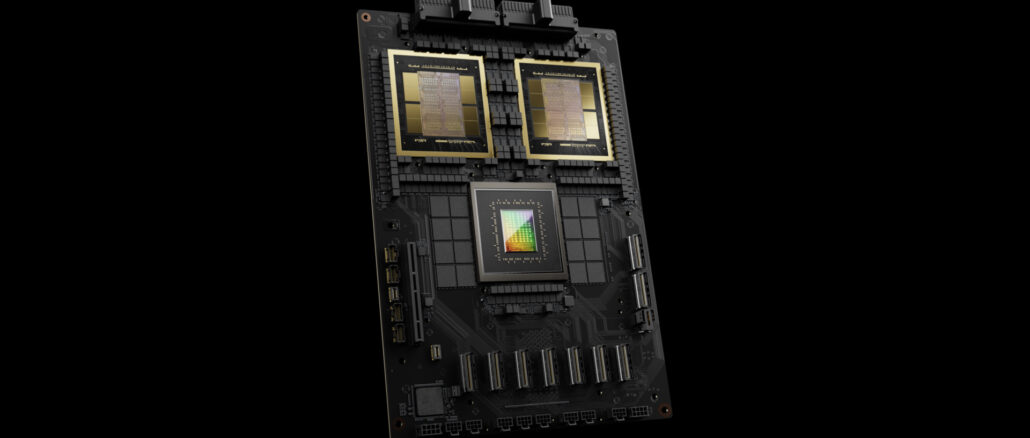

The GB200 Grace-Blackwell Superchip connects two Blackwell GPUs with an Arm-based Grace CPU via NVLink-C2C interconnect and also forms the basis of Nvidia’s GB200 NVL72, a liquid-cooled rack system that includes 36 Grace chips and 72 Blackwell GPUs, can run a trillion-parameter large-language model (LLM) for training jobs that can include such Mixture of Experts and similarly complex tasks, and deliver real-time inferencing.

AWS also is including Blackwell chips in the upcoming AI supercomputer codenamed “Project Ceiba,” a system announced at the cloud provider’s re:Invent show last fall that will be able to process 414 exaflops of AI jobs, be hosted on AWS, and used by Nvidia’s internal researchers and developers AI work in such areas as digital biology, robotics, and climate prediction.

Beyond Blackwell, AWS said it also is bringing Nvidia’s BioNeMo foundation models to its HealthOmics service aimed at healthcare and life sciences organizations to store, search, and analyze genomic, and transcriptomic, and other data.

Microsoft also is bringing the Grace-Blackwell Superchip to Azure instances that will be based on GB200 and Nvidia’s Quantum-X800 InfiniBand networking and will target frontier and foundational models for such AI functions as natural language processing, computer vision, and speech recognition. Microsoft has about 53,000 users on its Azure AI infrastructure.

The IT giant also said its Azure NC H100 v5 VM series – based on Nvidia’s H100 NVL platform that includes one to two H100 Tensor Core GPUs connected via NVLink – used for midrange organizations for such jobs as training, inferencing, and HPC simulations, is generally available. Other joint efforts include integrating DGX Cloud compute with Microsoft Fabric analytics platform.

Google Cloud is adopting Grace-Blackwell for its cloud environment as well as offering DGX Cloud services on top of that hardware. DGX Cloud also is generally available on Google Cloud A3 VM instances that powered by H100 Tensor Cores. In addition, Google is bringing Nvidia’s NIM inference microservices to the cloud and to Google Kubernetes Engine. The microservices are designed to make it easier for enterprises to deploy generative AI.

Oracle will bring Grace-Blackwell to its OCI Supercluster and OCI Computer services and that OCI will also take in Blackwell B200. Like Google, Oracle also is adding Grace-Blackwell to its DGX Cloud on OCI. The entire OCI DGX Cloud cluster will include more than 20,000 Grace-Blackwell accelerators and Nvidia’s CX8 InifiniBand networking, according to Oracle.

Oracle also said the two vendors are enabling governments and enterprises around the world to create “AI factories” that combine Oracle cloud and AI infrastructure as well as generative AI service with Nvidia’s accelerated computing and its own generative AI infrastructure.

The goal is to help these organizations with their data sovereignty efforts, according to Oracle CEO Safra Catz. The factories can run the cloud services locally and within their own premises.

“As AI reshapes business, industry, and policy around the world, countries and organizations need to strengthen their digital sovereignty in order to protect their most valuable data,” Catz said, noting that the combination of the vendors’ technologies and Oracle global cloud reach “will ensure societies can take advantage of AI without compromising their security.”

“AMD CEO Adam Selipsky” : AWS not AMD

These fools don’t say a word about their data centers not having the necessary power infrastructure to handle all that AI compute power demand. AI loads aren’t steady state like normal data center loads. It’s fun watching the power infrastructure struggle with the AI transient loads.

Grace is not an intel-based CPU

No kidding. Fixed.

Interesting! It sounds as though hyperscalers (AWS, MS, Google, and Oracle) are focusing on Grace-Blackwell integrated solutions (ARM-Blackwell) … I guess, in time, we will also hear more about x86-Blackwell, and the advantages and drawbacks of each pairing, possibly in terms of target workload. The NVL72 is so impressive that it seems to really bring the fight way over and into the x86 camp (at this time at least). These are fascinating times for competition …