The datacenter server has been the center of gravity for compute for decades. But in recent years, as relatively heavy compute – meaning more than in your PC or smartphone – has been embedded into all kinds of storage and networking devices and increasingly pushes out to the hyperdistributed computing platform commonly called the edge, that center of gravity has shifted.

And within a matter of years, there could be lots more server compute embedded in non-server gear in the datacenter and outside of the datacenter walls than is inside the fleet of servers inside the datacenters of the world. Before long, we may have to revert to telecommunications lingo and start calling any “centers of data” a point of presence, or POP.

With the Arm collective taking over a substantial portion of the embedded systems market from the Motorola 68K and its anointed heir, the IBM and Motorola PowerPC, as well as carving out new niches in smartphones and tablets, Intel had to do something to stop the onslaught. And in March 2015, at the behest of social networker Facebook, we got the “Broadwell” Xeon D-1500 and in November 2015 the Xeon D-1500 lineup was expanded to blunt the Arm assault. We did the math on Broadwell Xeon E5 versus Broadwell Xeon D platforms at Facebook to show how this was a density and performance per watt play, and it was successful within the confines of the hyperscale datacenter. By the “Skylake” Xeon D-2100 launch in February 2018, this low-end server chip was fast becoming the motor of choice for scale-out object storage and certain storage arrays as well as for X86-based network function virtualization workloads, and began pushing out to the edge. There was a “Hewitt Lake” Xeon D-1600 launch in April 2019 (which we somehow missed, partly because Intel did a soft launch), another rev of the chip with Broadwell cores that was tweaked for lower power and specifically for edge and IoT use cases. It was not a very important chip, we think, because even Intel is comparing the new “Ice Lake” Xeon D-2700 and D-1700 processors to the Skylake Xeon D-2100 series from 2018.

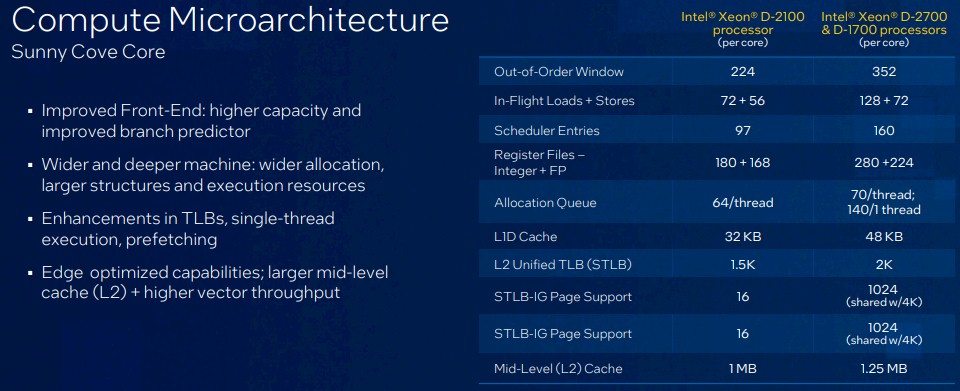

With the Ice Lake Xeon D series, it all starts with the “Sunny Cove” core that is employed in the full-blown Ice Lake Xeon SP processors announced last March; we drilled down into the Sunny Cove core and the Ice Lake architecture shortly after that launch. Everything about the Sunny Cove core is wider, deeper, and better, as you can see below:

The edge, as exemplified best at the moment by OpenRAN 5G base stations, which have a mix of CPUs and FPGAs, is getting more compute capacity as data processing, machine learning, and bulk storage is moving out to the edge because there simply is no way to backhaul all of this data back to a regional datacenter and process it in real-time with anything close to acceptable response times. That is why Intel was previewing them last year, saying they were in tech preview. The wonder is why the Ice Lake Xeon Ds didn’t come out first, given that the first time we saw a Broadwell core in a server chip was also with the Xeon D, which came out nearly a year ahead of the Broadwell Xeon E5 v4 chips for two-socket servers. This probably had something to do with maximizing the revenue stream from the limited 10 nanometer capacity that Intel could bring to bear across the Xeon lineup. The Xeon D drives a certain amount of volume, but less revenue and profit than the core Xeon SP does, and the Arm collective has come down to Amazon Web Services and Ampere Computing building beefy CPUs way more powerful than any Xeon D, so there is no competitive threat there. AMD is focused on the high end of serving with the Epyc lineup, too. So, Intel could pace itself with the Ice Lake Xeon Ds, and for all we know, key hyperscalers and equipment makers have been getting them since this time last year.

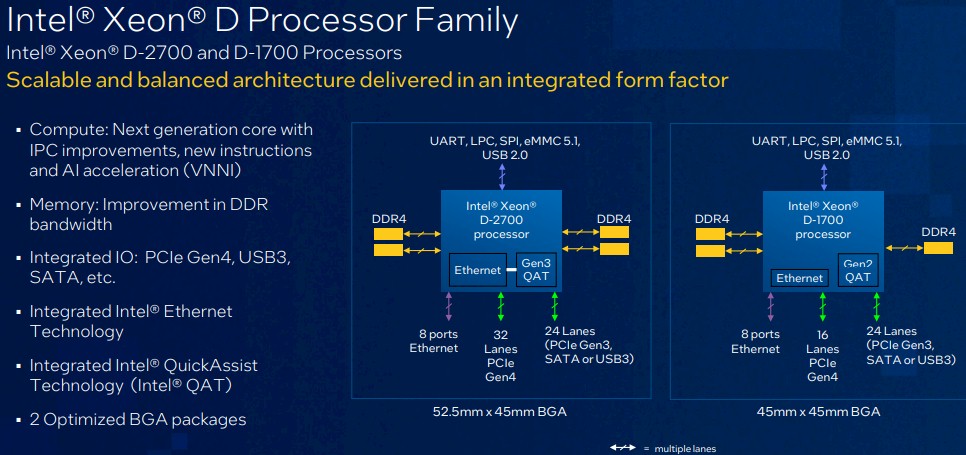

Intel rarely gives out die shots of its Xeon chips these days, so we don’t know exactly how many physical cores are on the Ice Lake Xeon D chips. What we do know is that the Ice Lake Xeon D line spans from 2 to 20 cores and that there is one package with a maximum of 10 active cores and another with a maximum of 20 cores. Both come in ball grid array (BGA) packages that mount directly onto system boards rather than plugging into more expensive server sockets like Xeon SPs use. This BGA package goes down to as low as 25 watts of power consumption, and can handle temperatures down to -40 degrees Celsius. Intel has not released the Ice Lake Xeon D SKU stack as we go to press – but says it will later today – so we can’t analyze that as yet. So we will talk architecture now and comparative price/performance across Xeon Ds later.

The past three generations of Xeon D chips have employed a multichip package, with the processor complex on one chip and the I/O southbridge, including peripheral ports and a network controller, on a separate Peripheral Controller Hub, or PCH. We strongly suspect that Intel is using a chiplet approach this time as well, but it is not clear how many unique chips are inside the Ice Lake Xeon D processor complex.

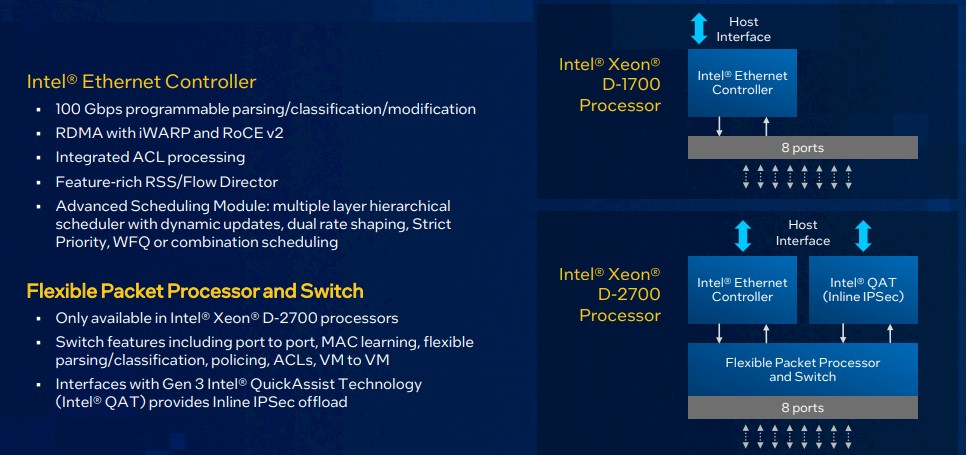

There are some important things to note about the Xeon D-1700 versus the Xeon D-2700. The Xeon D-2700 has everything turned on: 20 Sunny Cove cores, four DDR4 memory controllers, 32 PCI-Express 4.0 lanes for peripheral I/O, an Ethernet controller with eight ports that add up to 100 Gb/sec of throughput, and a QuickAssist Technology (QAT) 3.0 encryption engine that sits beside it. Both the Ethernet controller and the QAT controller are front ended by a baby switch and packet processor that exposes eight ports to the outside world, and that means companies don’t have to burn cores to run Open vSwitch switching software to support hypervisors and virtual machines running on the cores – it’s a hard-coded IP block. Like this:

With the Ice Lake Xeon D-1700, the core count drops to 10, one of the memory controllers is gone, and the QAT is dropped down from Gen3 to Gen2. The PCI-Express 4.0 lanes coming off the PCH drop down to 16 from 32. Both have 24 lanes of legacy I/O, including a mix of PCI-Express 3.0, SATA, and USB3 lanes.

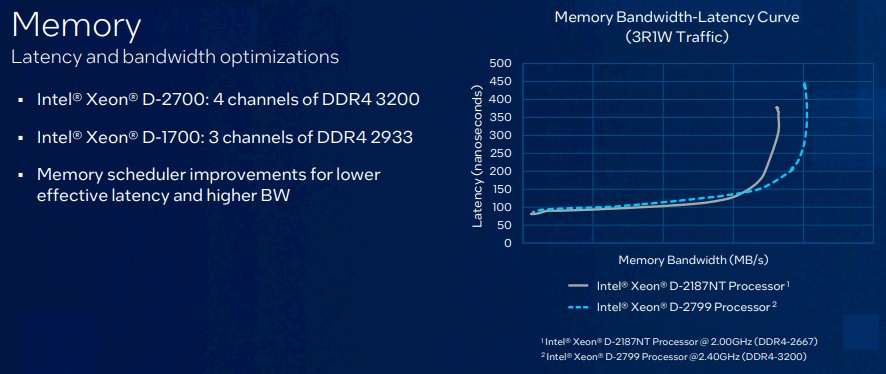

The memory bandwidth on the Xeon D-2700 is a little bit better given that the memory runs at 3.2 GHz compared to the 2.67 GHz of the Xeon D-2100, but don’t get too excited:

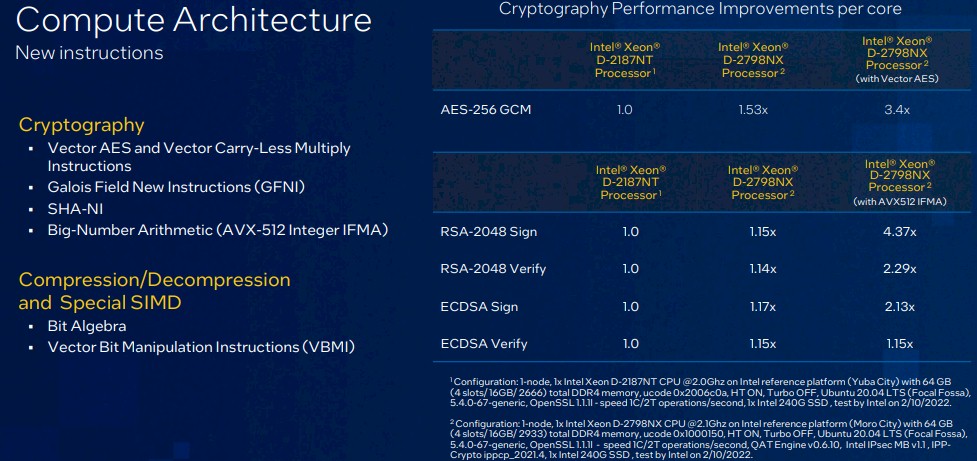

Those deploying the Xeon D processors are not running applications that are bandwidth constrained as much as they are compute constrained and they also need to encrypt data in flight and at rest. To that end, the Sunny Cove core has lots of new cryptographic instructions as well as data compression instructions, which will be important for storage appliances as well as for 5G base stations, and of course there are AVX-512 vector math units with mixed precision integer support to do all kinds of things, including running AI inference out there at the edge.

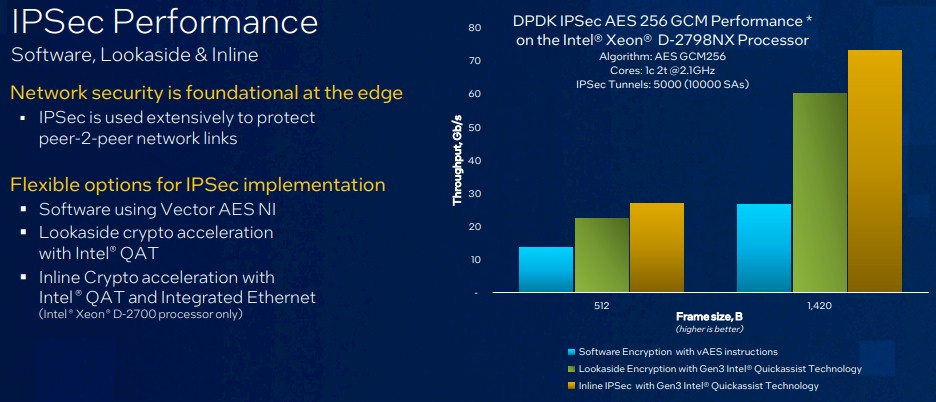

One of the most important accelerations with the Ice Lake Xeon D line, of course, is encryption, and Intel put together this chart to show how various means of doing AES-256 encryption stack up against each other, including running it on the Sunny Cove cores, running it on the QAT unit, and running it inline on the QAT unit behind the virtual switch:

There are many different ways to skin this AES-256 cat, but clearly the inline processing of IPSec encryption is superior and gives a significant boost over plain vanilla QAT acceleration. And both blow away running it on the Sunny Cove cores with their AES-NI instructions. Like so much better that there is no point in having the AES-NI instructions in there except for legacy application support.

Up next, we will drill down into the Ice Lake Xeon D SKU stack and see how these chips compare to prior Xeon D generations.

Thanks for this! Great article that popped randomly into my Google News feed this morning, looking forward to the SKU breakdown.