Whatever is going on with its competitive positioning against revitalized X86 server chip rival AMD, Intel clearly felt that it could not wait for the launch of its 14 nanometer “Cooper Lake” and 10 nanometer “Ice Lake” Xeon SP processors to address it. And so today the chip maker is taking the wraps off a dozen and a half new variants of the “Cascade Lake” Xeon SPs to better chase the opportunities out there.

The updated Cascade Lake processors, based on deep bin sorting and refinements on the 14 nanometer processes that are about as mature as any process Intel has ever had in its factories, are meant to better defend against the “Rome” Epyc 7002s that are beginning to have an impact on Intel’s psyche and that of the server base even if no one thinks Intel’s financials have been hurt very much by AMD. Intel has shipped over 30 million of the first generation “Skylake” and second generation Cascade Lake Xeon SP processors, and with spending up again at the hyperscalers and cloud builders, Intel’s revenues have risen on an annual basis some despite that slowdown in spending even if profits, for the full year, took a hit at Data Center Group.

Competition will do that. Intel either has to sacrifice volumes and let AMD take more business or cut prices and try to hold onto its volumes. When you sell the vast majority of the processors in the worldwide market, there is no way to go but down, although it is possible to maintain and ride revenue waves up and down as they come and go. Intel definitely had a rough Q1 and Q2 in 2019, but the market had cooled quite a bit then, too.

Intel is trying to be clever and use it ever-broadening SKU stack and its volume manufacturing on the well-established 14 nanometer process to cover more specific use cases for server buyers, and therefore blunt at least some of the attack from AMD. In January, the company quietly abolished the premium it was charging on top of its list prices for large memory access (between 2 TB and 4.5 TB per socket) on its Cascade Lake processors. Since that time, Intel abolished that premium, which cost $7,897 per chip and which was an impediment to the adoption of fat DRAM and Optane 3D XPoint memory anyway. The base Cascade Lake processors, include the reprise ones announced today, now top out at 2 TB of main memory per socket without any charge. Before January, you had to pay $3,003 additional per socket to have between 1 TB and 2 TB of memory per socket, and now you have to pay $3,003 to go between 2 TB and 4.5 TB.

It seems untenable that Intel could charge any premium at all for this, not with AMD offering full memory addressability on all SKUs, and Arm chips from Ampere and Marvell and Power9 chips from IBM also not putting governors on main memory with usage fees on them to remove those governors.

You can’t blame Intel for trying to make the money while it can, because there is no question that competition is going to start to hurt if that competition continues to execute – and execute well. We have to believe that Intel has been slashing prices like crazy on Xeon SP processors and these memory features, plus tossing in aggressive pricing on Optane memory and heaven only knows what else to help ODMs and OEMs close deals and keep customers in the Xeon fold. Luckily, the market is expanding fast enough to cover all of this, unless you look at Intel on an annualized basis. For the full 2019 year, Data Center Group’s revenues were only up 2.1 percent to $23.48 billion, and its operating profits actually fell by 10.9 percent to $10.23 billion.

The bottom line is getting affected more than the top line. No question about that. And the refresh on the Cascade Lake Xeon SPs announced today aim to help keep that top line as stable and as growing as is possible until Cooper Lake, which was expected late last year, and Ice Lake, which has been a long time coming, make their debut. Neither are expected to be barn burners, and we expect for Intel to compete on price as much as on features – and not to cut prices further on the Xeons (at least not officially) until it has to, and then it will come with the generational changes that can mask it by not having the SKUs map directly between generations.

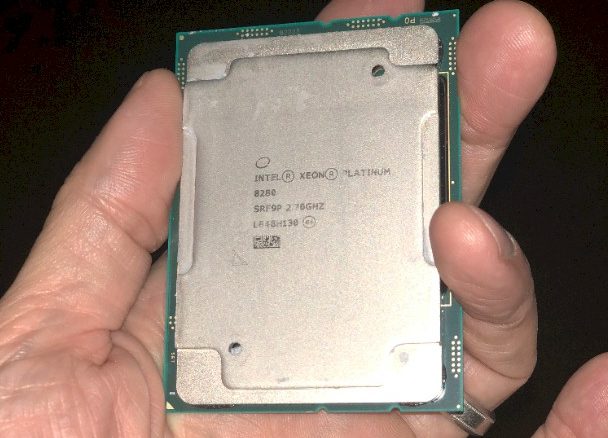

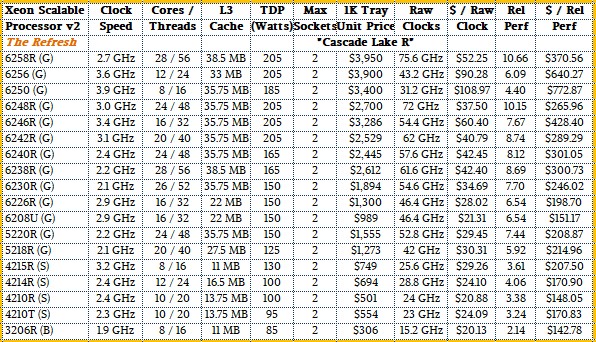

There are eighteen new Cascade Lake Xeons in this refresh, as Intel is calling it. All are aimed at machines with one or two sockets, so they are a bit different in that they cannot be used in four socket servers like many of the other Gold, Silver, and Bronze class machines in the original Cascade Lake SP lineup announced in April 2019. There is one Bronze variant of the Cascade Lake refresh four Silver Variants, and thirteen Gold variants. The new R SKUs come in Gold and Silver flavors, and the R is short for “Refresh” of course. These R parts include more cores, more L3 cache, or higher frequencies versus the prior Cascade Lake processors with the same (non-R) part number. The T SKU is a refreshed part and it is optimized for extended reliability (the T is short for Telco or Thermally Challenged), and it is aimed at communications equipment, industrial, or IoT uses where it tends to run hotter and for a longer period of time compared to the regular SKUs. The U SKU is a single socket (uniprocessor) part only. And, just to keep us on our toes, two of the new Cascade Lake SKUs do not have an R, T or U but they do have higher clock frequencies. (Perhaps an F designation was in order?)

With this refreshed Cascade Lake lineup, is basically reshuffling the SKUs, adding more cores and slightly higher base and Turbo clock frequencies so the updated Gold variants have decent price/performance and performance benefits over SKUs in the same relative position in the original Cascade Lake lineup.

According to Intel, the refreshed Gold variants of the Cascade Lake Xeon SP chips deliver an average of 1.36X better performance across a range of workloads and a 1.42X better bang for the buck compared to similar SKUs in the Skylake Xeon SP Gold lineup. While that is all very well and good, that doesn’t really help you figure out if the new Cascade Lake chips are better than the old ones, or how they might stack up with the Rome Epycs.

We can help a bit with the first comparison. The table below shows the basic feeds and speeds of the new Cascade Lake processors:

As we have done in the past, we have calculated raw aggregate clock speeds across all cores as a rough gauge of performance and then we have reckoned this relative performance against the midrange “Nehalem” Xeon E5540 processor from March 2009, which had four cores and eight threads running at 2.53 GHz, using IPC figures given out by Intel for each generational improvement. There have been no IPC changes in this Cascade Lake refresh. If you inflation adjust that processor’s price, then the Nehalem E5540 yielded a cost of $878 per unit of performance in 2019 dollars. As you can see, the new and improved Cascade Lake processors yield anywhere from $143 to $773 per unit of relative performance.

To drill down a bit, those low core count and higher clock speed parts – the Xeon SP-6256 Gold and SP-6250 Gold parts – have 6.1X and 4.4X the performance of that chip, but the bang for the buck is only a little bit lower with the $3,900 and $3,400 that Intel is charging for these chips. If you want high clocks, big L3 cache, and a relatively small core count (12 or 8) then it costs big bucks still. And there are many uses (such as high frequency trading and certain kinds of HPC and AI) where faster cores mean more than lots of cores. These chips can turbo up to 4.5 GHz with only one core activated, and that is also useful for certain applications.

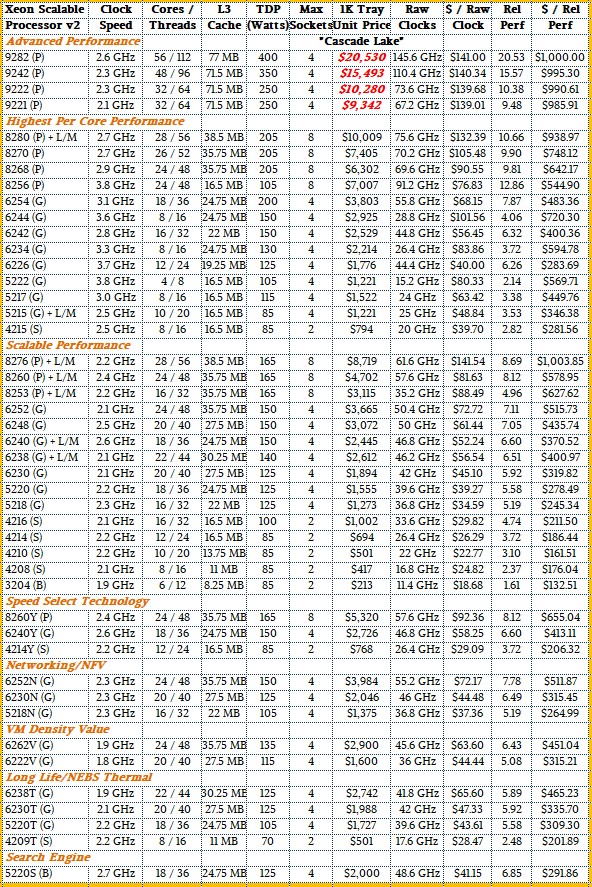

Here is the original Cascade Lake table so you can more easily make comparisons since last year:

In general, the extra cores cost extra money for some of the SKUs, and for some of the ones in the belly of the Xeon 6200 Gold series, the incremental cores, clock cycles, and L3 caches are tossed in for free or close to it. In others, the prices rise almost as fast as the feature creep. There is no simple rule of thumb that we can discern from our initial review of the two sets of SKUs. We presume that Intel believes this set of Cascade Lake processors better reflects what it can make and also what it can sell in the current market.

Intel is not providing much in the way of guidance as to how these different new SKUs are being used, as it did with the original Cascade Lake chips and their Skylake predecessors. That would have been helpful to OEMs, ODMs, and customers alike. There is no reason any of the 6200 Series Gold chips should be restricted to just two sockets – all of the other ones can go to two sockets. It is also not clear if these new Cascade Lake chips are limited to 1 TB per socket or 2 TB per socket, or can have their memory extended to 4.5 TB per socket with add-ons for selected SKUs. We presume they all top out at 1.5 TB with one DIMM per channel, and 2 TB with a mix of one or two DIMMs per channel and that customers have to pay extra for 4.5 TB large memory access when it is available. This was not a feature that was turned on with all Xeon SP processors, obviously. Which was no doubt annoying to many customers, particularly those buying Optane memory to extend their main memory. Intel seems to be at cross purposes here, and would do well to just stop nickel and diming – well, thousand dollaring and many tens of thousand dollaring – customers to death. Memory access is a right, not a privilege, at least on other servers and Intel would be wise to stop making this too complex right about now. It may have helped fill Intel’s pockets in the past two and a half years, but this complexity and creeping pricey featurism is going to backfire. Can you say IBM mainframe?

This all may have immense value that other chip and platform makers are not charging for, but the simpler solution – which the Intel Xeon once represented against proprietary and Unix systems – is the one that often wins at some point once the performance is in the right ballpark. AMD, Ampere, Marvell, and IBM are making cleaner arguments. There is always a chance to simplify with Ice Lake, when the competition will be even more intense than it is now.

A pair of statements in the fourth paragraph seems contradictory to me, or at least needs more explanation if I’m not reading the intent correctly. First, it states that “Since [January], Intel abolished [the premium it was charging on top of its list prices for access to more than 4.5 TB memory per socket], which cost $3,003 per chip”, which implies Intel dropped a charge with those parameters. But then shortly thereafter, it states that “now you have to pay $3,003 additional per socket to have 4.5 TB of memory [same parameters?]… but that is a lot less than the $7,897 Intel was charging before January.” So some number is misprinted in the text, there’s a timing sequence that’s not clear, or there’s a piece of context making it hard to interpret. Could you clarify? Thanks…

You are right. It was badly written. I think it is fixed now.

“Intel is trying to be clever and use it sever-broadening SKU stack…” probably should be “Intel is trying to be clever and use it’s ever-broadening SKU stack…”.

It is actually “its” in your correct. So screw this sentence, basically….

Intel’s XEON product portfolio looks a lot like “If you can’t dazzle them with brilliance, baffle them with bullshit”. It used to be sufficient to have a semiaccurate Intel decoder ring to choose a XEON model, but now one needs a multi-dimensional least-action hypersurface fitting reduction algorithm.

Well said, sir. Btu there is an official price/performance cut in there. Somewhere. They could have just cut price and not confused the crap out of customers.