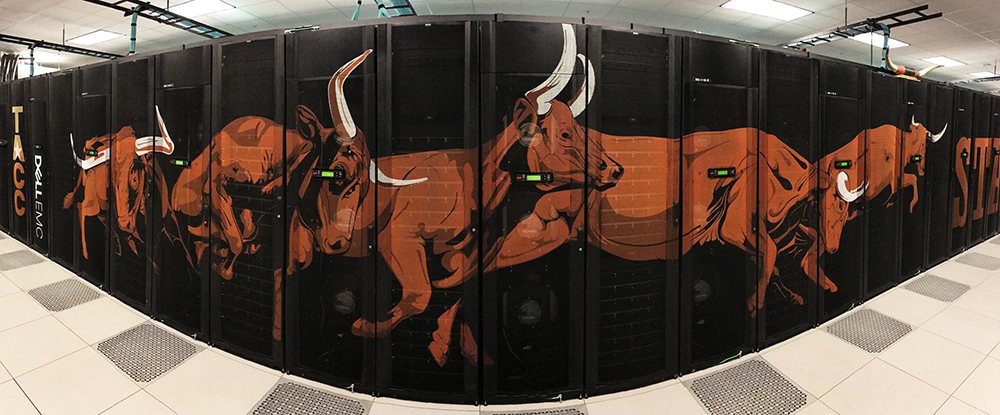

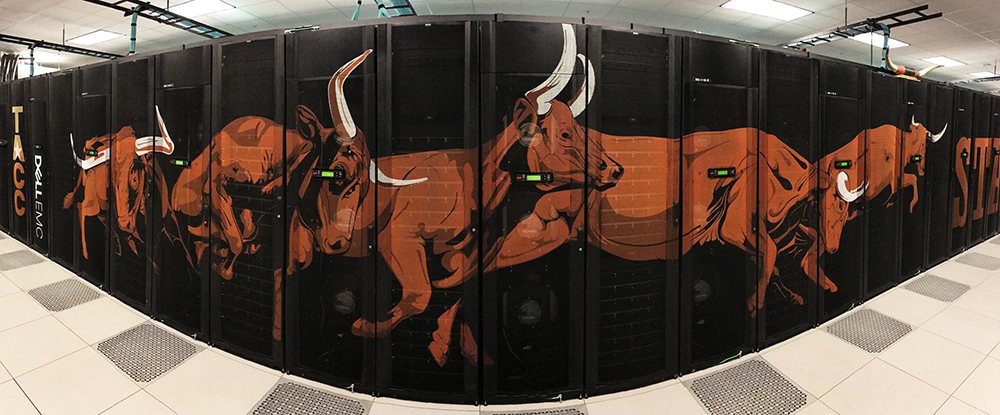

Putting TACC’s “Stampede3” Through The HBM Paces

When you host the workhorse supercomputers of the National Science Foundation, you strive to provide the best possible solutions for your scientists. …

When you host the workhorse supercomputers of the National Science Foundation, you strive to provide the best possible solutions for your scientists. …

Institutions supporting HPC applications are finding increased demand for heterogeneous infrastructures to support simulation and modeling, machine learning, high performance data analytics, collaborative computing and analytics, and data federation. …

Digitally prototyping complex designs, such as large physical structures, biological features, and micro-electromechanical systems (MEMS) requires supercomputers running sophisticated multiphysics solvers. …

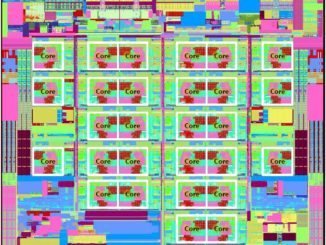

The drive toward exascale computing is giving researchers the largest HPC systems ever built, yet key bottlenecks persist: More memory to accommodate larger datasets, persistent memory for storing data on the memory bus instead of drives, and the lowest power consumption possible. …

With 5.4 petaflops of peak performance crammed into 760 compute nodes, that is a lot of computing capability in a small space generating a considerable amount of heat. …

All Content Copyright The Next Platform