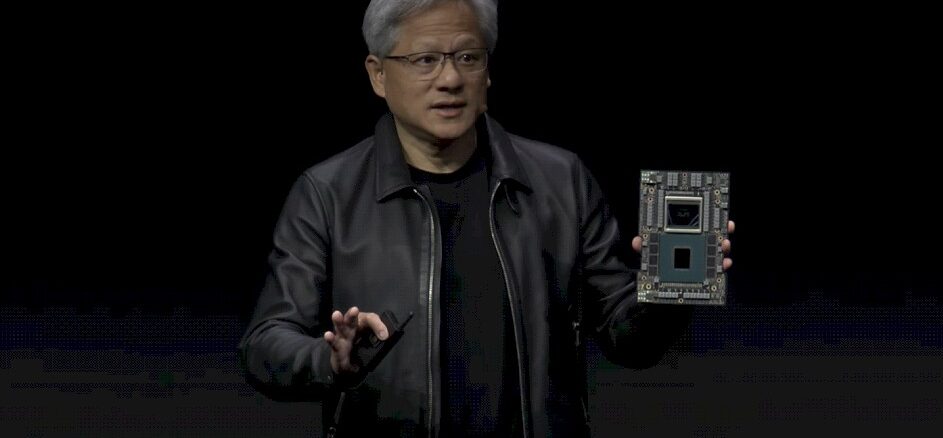

Nvidia Gooses Grace-Hopper GPU Memory, Gangs Them Up For LLM

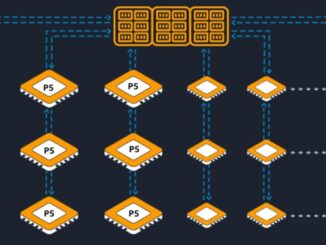

If large language models are the foundation of a new programming model, as Nvidia and many others believe it is, then the hybrid CPU-GPU compute engine is the new general purpose computing platform. …