There are many good processors out there, but there are few that are excellent and elegant inasmuch as they are co-designed for specific workloads and then do them very well.

The Sparc64-VIIIfx chip and related Tofu 1 interconnect used in the K supercomputer that ran at RIKEN for more than eight years were extremely efficient and ran actual workloads better than many hybrid CPU-GPU machines that have come out since then. The K supercomputer would still be useful today, we think, were it not necessary for the RIKEN lab to unplug it, which it did in August, to make way for the “Fugaku” Post-K machine, which will be based on the A64FX manycore Arm processor developed by Fujitsu. The hopes are high that Fujitsu can, once again, create an elegant machine that works very well on real HPC workloads, and there are customers who want to buy and test the architecture and invest in its advancement. But it is not always politically possible for Fujitsu to sell computers to such customers, or it is sometimes desirable to stick with a known vendor, as Cray certainly is in HPC centers in North America and Europe.

This, in a nutshell, is why Cray, which is now part of Hewlett Packard Enterprise, has inked a partnership deal with Fujitsu that will see Cray put the future A64FX processor into the “Storm” line of CS500 clusters and peddle them in the United States, in Europe, and presumably elsewhere around the globe outside of the Japanese market that Fujitsu can handle by itself.

Cray has been building Arm clusters for HPC customers based on Marvell’s ThunderX2 processors thus far, and this is a big and perhaps surprising addition to the arsenal of Arm engines considering the amount of floating point compute that the A64FX chip is expected to have over the ThunderX2 and the future “Triton” ThunderX3 processors. The deal with Fujitsu doesn’t mean that Cray will not partner with other Arm chip suppliers – mainly Marvell or Ampere at this point – to build systems, but we expect that the ThunderX2 and ThunderX3 will be used mainly in hybrid CPU-GPU systems or more modest CPU-only Arm clusters going forward and that the A64FX will be used in machines that want to a lot of math all on the CPU. There will no doubt be price and price/performance differences across these various Arm architectures when applied to HPC workloads, and all of these factors will determine the choices that organization make when they buy. Cray just wants to be able to sell as wide a set of compute and networking options as it can with both the CS500 clusters and its future “Shasta” high-end supercomputers, which will start rolling out at the end of this year and ramp up into the exascale era from 2021 through 2023.

“We are still shipping ThunderX2 systems, and we will continue to do so,” Brian Johnson, director of product management at Cray, tells The Next Platform. “We are bringing the A64FX to market because Fujitsu has brought the Scalable Vector Extensions and HBM memory that people have been really looking forward to, and putting it into the CS500 is the quickest possible way for them to get their hands on them. We have been actively involved with Arm on SVE, as has been Fujitsu, and we are eager to get this into the market.”

Initial customers that Cray has lined up for the CS500 with the A64FX processors include Los Alamos National Laboratory, Oak Ridge National Laboratory, Stony Brook University, University of Bristol, and, believe it or not, RIKEN. No, RIKEN is not building the future Fugaku system out of CS500 racks, but it is going to be putting a Cray cluster next to the custom Fugaku cluster that Fujitsu is building for RIKEN. (We would have thought, based on the intense interest that Sandia National Laboratories has had with Arm clusters that it would also be on this list, particularly with HPE building the “Astra” ThunderX2 cluster for that HPC center. Sandia did not wait for the Triton ThunderX3 processor, which still has not launched.)

It is perhaps appropriate to review a few things about the A64FX processor and the Fugaku system as well as its predecessors and their processors before discussing Cray’s plans for the A64FX. We went through the history of the K machine and its commercialized PrimeHPC FX100 server variants from Fujitsu back in June 2016, when the first details about the Post-K machine and its adoption of the Arm architecture with the SVE floating point extensions were divulged. The Sparc64-fx series of chips aimed at HPC workloads progressed from 8 to 16 to 32 cores and from the Tofu 1 to the Tofu 2 interconnect, and interestingly, the 32-core Sparc64-XIfx processor had integrated Hybrid Memory Cube (HMC) memory on the processor package, much as Intel’s “Knights Landing” Xeon Phi processors did. The Sparc64-XIfx processors had about 8X the floating point oomph (4X the cores and 2X the vector width) and the Tofu 2 interconnect had about 2.5X the bandwidth of the processors and interconnect used in the K supercomputer, but it was not deployed in an upgraded K system because it would have been very expensive to do so. The K machine was designed to break through 10 petaflops of floating point performance at double precision, and it accomplished that goal. With denser packaging and more nodes, Fujitsu could have broken the 100 petaflops barrier with a PrimeHPC FX100 system, but the Japanese government did not invest in such a machine; this was understandable considering that the K supercomputer cost on the order of $1.2 billion and that other architectures, notably CPU-GPU hybrid machines, were in vogue and could do double duty as HPC and AI systems.

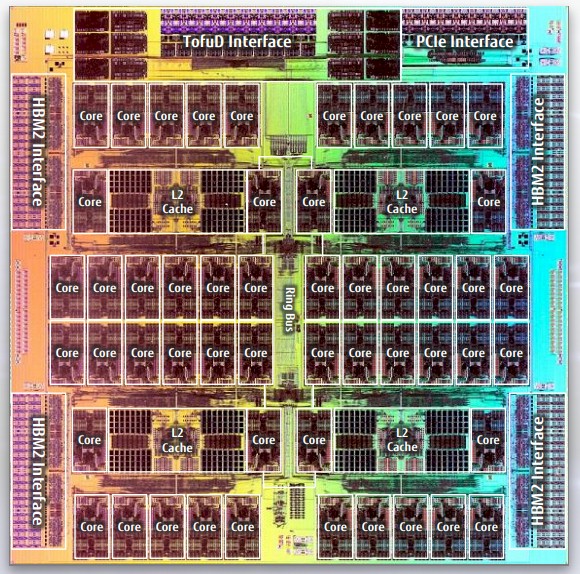

We profiled the Fugaku system prototype back in June 2018, when Satoshi Matsuoka, director of the RIKEN lab, revealed some aspects of the system. More of the architectural details of the A64FX processor were unveiled in August 2018, and Fujitsu and RIKEN decided to switch the Sparc cores for Arm cores and add 512-bit vectors to the cores as well as boosting the core count by 50 percent from 32 cores to 48 cores. (The Sparc64-XIfx had two helper cores for running system work, and the A64FX has four such cores.)

Satoshi has said that using the A0 stepping of the A64FX processor, a Fugaku node delivers 2.5 teraflops at double precision within a power envelope for the node – including processor, memory, and the controller for the Tofu 3 interconnect – of between 160 watts and 170 watts, or around 14 gigaflops per watt.

One interesting tidbit of information to consider about the A64FX processor: The 32 GB of HMB2 memory on the processor package has more than 1 TB/sec of memory bandwidth. That is significantly more than the memory bandwidth supplied by Nvidia’s “Volta” Tesla V100 GPU accelerator, which has 32 GB of capacity at 900 GB/sec of bandwidth, and it is an order of magnitude more than a Xeon SP socket can deliver.

It would have been interesting if Cray had also tapped Fujitsu’s Tofu 3 interconnect, which is also known as Tofu D and which we profiled here in detail last year, and it is a much more energy efficient interconnect and a more clever one, too, than its Tofu 1 and Tofu 2 predecessors. But you won’t find the Tofu 3 interconnect being installed on the CS500 systems from Cray, at least not initially. The Sparc64 and A64FX processors have an embedded PCI-Express controller on the die and it is this port that will allow for the A64FX nodes in a CS500 to be clustered together. Johnson says that customers will be able to use InfiniBand or Ethernet interconnects to lash together the Arm server nodes. At this point, Cray’s “Slingshot” Ethernet interconnect, based on its “Rosetta” ASIC, will not be available in the CS500 either, with or without the A64FX processor. And furthermore, at this point, according to Johnson, Cray is not offering customers the ability to put A64FX processors into the Shasta systems where the Slingshot interconnect is available. But nothing is precluding Cray from changing its roadmaps, Johnson adds. It all depends on customer demand.

The A64FX is at the heart of two academic supercomputers, as it turns out. The State University of New York’s campus at Stony Brook on Long Island received a $5 million grant from the National Science Foundation back in August to install clusters that stress test new technologies, and the resulting “Ookami” (Japanese for “wolf”) system will be based on Cray CS500 systems with A64FX processors.

And perhaps more notably, the “Isambard 2” system going into the University of Bristol, will also be based on the Cray systems with the Fujitsu A64FX processors. The original Isambard system, which was based on a Cray XC50 system using the “Aries” dragonfly interconnect and over 10,496 Arm cores across 164 server nodes, was instrumental in running benchmarks that proved that the ThunderX2 chip could stand toe-to-toe on HPC workloads with Intel “Broadwell” Xeon E5 and “Skylake” Xeon SP processors. It would have been natural enough for Bristol to just wait for the ThunderX3 chip from Marvell and move to the Slingshot interconnect in a Shasta machine, but that didn’t happen. We will circle back and find out what Bristol’s plans are for networking and storage on the Isambard 2 machine, and why it went with A64FX instead of ThunderX3 as the compute engine.

There is always a political angle to supercomputing at the national labs and to a certain extent at academic and other government supercomputing centers, and that is because they are publicly funded. So the Cray and Fujitsu partnership is a more collaborative approach than the two engaged in two decades ago. Back in the late 1990s, when the national Center for Atmospheric Research – the facility that bought he very first Cray-1A supercomputer back in 1976 – wanted to buy an NEC vector supercomputer to do weather modeling, Cray claimed that NEC, Fujitsu, and Hitachi were dumping their supercomputing systems on the market in the United States – meaning selling them at below cost. And Uncle Sam backed them up, imposing very large import duties on the gear to level the playing field. (Ironically, it was IBM that benefitted most by those import duties, having won years of contracts peddling Power-based systems to NCAR.) Neither Cray nor Fujitsu want to go through a trade war again, and hence they are cooperating.

As for RIKEN, it looks like the Japanese HPC center is as interested in exploring the differences between the Cray and Fujitsu software stacks and various interconnects when it comes to deploying AFX64 processors. RIKEN is at the heart of software development for the AFX64, and now Cray will bring some its expertise to bear alongside of Fujitsu. RIKEN just wants to squeeze every flop out of the Fugaku box, and this is one way to accomplish that goal. Oak Ridge is getting its CS500 so it can see how good the architecture is, and Los Alamos is doing much the same and made no bones about it.

“The most demanding computing work at LANL involves sparse, irregular, multi-physics, multi-link-scale, highly resolved, long running 3D simulations,” explained Gary Grider, deputy division leader for the HPC division at LANL, in the statement announcing the partnership between Cray and Fujitsu. “There are few existing architectures that currently serve this workload well. We are excited to see a potential solution and are happy to be helping prove this Cray and Fujitsu technology is a viable alternative for this need. Having this type of capability will be quite complementary to other resources in the NNSA computing complex.”

Clearly neither Cray nor its customers are ready to go in head first on this architecture. Putting this in the CS500, and not implementing Tofu means that Cray isn’t willing to invest much engineering resources into it, unless they can drum up more interest from customers. A few 200 node clusters isn’t much. Clearly the customers want to get their hands on these quickly, and explore their capabilities, but the big dollars are still being saved for Rome+GPUs. I wonder what this means for Marvel? Does this mean that ThunderX3 is late and getting later? Is this a stop-gap until Marvel gets SVE?

AFX64 is a cool design. It gives GPU-like memory bandwidth to a traditional CPU. That makes it much easier to program than a cpu/gpu hybrid system and provides much better scalar performance, though you do run into the issue of having under a GB of ram per cpu core, which is a significant limitation. One wonders if this limit will require a software re-write almost as big as porting to GPUs. All of this speaks to some of the choices Cray is making. HBM2 on the processor, and the tofu3 interconnect are savvy design decisions for Fugaku, which must optimize around huge scale and energy efficiency. However, for small clusters Tofu3 is likely a big step backwards compared to Tofu2, and may not compete well against infiniband. Similarly, the memory characteristics are likely great for custom-built codes, but may require substantial effort to run general purpose workloads. The things that make AFX64 great, are maybe the things that make is ill suited to compete with Xeon and Rome for most clusters. It seems as though AFX64 is actually an improved Xeon Phi.

Wouldn’t Fugaku be classified as MPP and not really a cluster?

“One interesting tidbit of information to consider about the A64FX processor: The 32 GB of HMB2 memory on the processor package has more than 1 TB/sec of memory bandwidth. That is significantly more than the memory bandwidth supplied by Nvidia’s “Volta” Tesla V100 GPU accelerator, which has 32 GB of capacity at 900 GB/sec of bandwidth”

I wouldn’t say an 11% increase in bandwidth is “significantly more”, especially when a dedicated card can easily move to a 4096-bit bus doubling the bandwidth to 2TB/s. If you have used the word ‘significant’ for 11% what word do you use for a 100% increase?