As expected, Intel will be the prime contractor for the first exascale supercomputer in the United States, which Argonne National Laboratory expects to be operational and capable of sustained exaflops performance by the end of 2021.

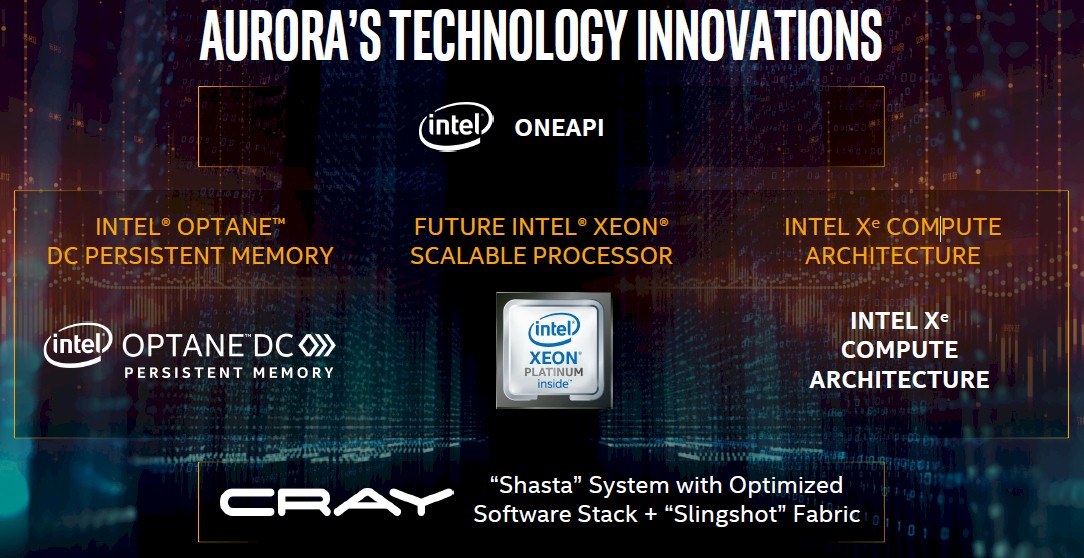

And as was also known, Cray will serve as the subcontractor with its “Shasta” architecture, which will be largely defined by advanced packaging and the forthcoming “Slingshot” interconnect that Cray created as an alternative to InfiniBand, Omni-Path, and its own previous generation “Aries” interconnect. This, combined with a future generation of Optane 3D XPoint persistent memory, will aim at reducing the major energy consumption of data movement, with the actual floating point and integer math performance coming from a future compute platform based on future Xeon SP CPUs working in tandem with the Xe discrete graphics cards that the new CPU and GPU architects at Intel revealed were under development back in December 2018, taking on both Nvidia and AMD in graphics display and computation using GPUs.

We imagine that the new CXL interconnect that Intel revealed it was working on last week as well as its OneAPI alternative to CUDA for compiling and distributing applications (or portions of them) across different CPUs, GPUs, and FPGAs, will also be a prominent feature of this updated Intel HPC hardware and software stack and will replicate some of the functionality of a leading-class supercomputer like the Summit system at Oak Ridge National Laboratory or the companion Sierra system at Lawrence Livermore National Laboratory.

Intel had originally proposed, and Cray was to build, a very different kind of Aurora system, with up to 50,000 nodes based on the “Knights Hill” successor to the “Knights Landing” Xeon Phi many-core processor that Intel created to take on big HPC jobs and then was tweaking to do a better job at AI workloads that are increasingly part of the datacenter and that are also often sitting side-by-side with HPC applications, or embedded within them. That original Aurora system was expected to be delivered sometime in 2018 as a pre-exascale system ahead of the delivery of the Summit and Sierra machines, which have “Nimbus” Power9 processors and which offload the bulk of their math processing to Nvidia Tesla GPU accelerators based on the “Volta” architecture; nodes are connected by a 100 Gb/sec EDR InfiniBand interconnect from Mellanox Technologies, which is in the process of being acquired by Nvidia. This new Aurora system is using an architecture that is distinct from – but clearly inspired by – the hybrid CPU-GPU architecture of the pre-exascale Summit and Sierra systems. In this case, it is nodes of Intel compute engines and memory hierarchy with Slingshot interconnect, itself an augmentation of Ethernet with quality of service and congestion management tweaks for HPC and AI workloads.

The details on the revised Aurora system, also sometimes referred to as A21, are very scant, but they match some of the speculation that was going around a year ago when some details of the software architecture of the system were revealed. It is a mix of processors with different levels and types of math and serial performance, as expected, but these are implemented on a single die as was anticipated by some and as has been implemented in a number of architectures, including the Sunway SW26010 processor used in the TaihuLight supercomputer today or the AFX64 processor to be used in the Post-K supercomputer being built by Fujitsu for RIKEN laboratory in Japan as its first exascale system.

The main thrust of the announcement today was to put a stake in the ground with the US flag for an exascale supercomputer to be ready by 2021. This means we will have to provide some informed speculation about this machine and what it might mean for the way some of the most powerful systems are conceived going forward. One thing that was clear during the briefing, however, is that traditional HPC simulations are important, but data analytics, particularly streaming data analysis and machine learning, are both critical as well – and drove the thinking about the revised architecture for the Aurora machine. According to Rick Stevens, associate laboratory director at Argonne, the national labs are developing over 100 applications that have an AI hook and the Exascale Computing Project’s machine learning program is working on more. In a conference call previewing the Aurora machine, Stevens added that both machine learning training and inference workloads were important for this future Argonne system.

The revised Aurora contract is coming in at a considerably higher figure than the original machine was expected to cost, with Intel, the prime contractor receiving over $500 million for the contract. (The original 180 petaflops Aurora was expected to cost around $200 million.) Cray will get more than $100 million of that upwardly revised budget for its Slingshot interconnect, the more than 200 Shasta cabinets that will comprise the system, and the Linux software environment that will glue it all together. Intel is also kicking in some of the software stack, and it is not clear where the lines between Intel and Cray code will be drawn. Aurora is now expected to be delivered in 2021, with acceptance by Argonne forecast to occur – and therefore revenue recognition by both Intel and Cray – to happen in 2022. Intel and Cray are still working out the details of the subcontract agreement between themselves, which is anticipated to be finished in the middle of this year.

With a whole new team in control of the datacenter business at Intel – new general manager Navin Shenoy from inside of Intel; Murthy Renduchintala, who is group president of the Technology, Systems Architecture & Client Group and also chief engineering officer, formerly of Qualcomm; Raja Koduri, chief architect and GPU designer formerly of AMD; and Jim Keller, senior vice president of silicon engineering formerly of Digital Equipment, Apple, and AMD – it is not at all surprising that they are taking a look at what has succeeded in the market and have decided to so something that looks a lot like what the OpenPower collective has created, but one that will no doubt have plenty of Intel twists and tweaks to it; this is akin to the way AMD’s success with the Opteron processors inspired Intel to completely gut its Xeon server chips and come up with a real competitive – some might say clone – of the Opteron processor with the “Nehalem” family of Xeons back in 2009. Admitting that the industry weaved when it bobbed is what set Intel up for a decade of unstoppable growth. All it had to do was bob, or in this case, Jim and Raja.

While we know that the future Aurora machine will use the Slingshot interconnect from Cray, we do not know precisely what generation of Xeon SP CPU and Xe GPU will be deployed in the system. There are a couple of different possibilities. The “Ice Lake” Xeon SP processors, Intel’s first 10 nanometer server processors and ones based on the new “Sunny Cove” cores, are due to start shipping in late 2019 with a quick ramp in 2020. The Aurora machine almost certainly does not use this processor. Intel is planning, as we discussed back in December, to update the Xeon line with the “Willow Cove” core sometime next year, with a cache redesign, transistor optimizations, and security enhancements probably relating to the Spectre/Meltdown mitigations, among other things. This kicker to Ice Lake in the Xeon family seems more likely. But it is more likely still that a successor to this Xeon chip, based on the future “Golden Cove” cores due in 2021, will be used, given that it will have boosted single-threaded performance as well as improved performance on AI workloads, presumably aimed mostly at inference.

On the GPU side, Intel is already working on a Gen11 GPU for delivery this year in mobile and workstation computers, which has a total of 72 execution units with 64 of them usable and delivering more than 1 teraflops of single precision performance; divide by two for FP64 double precision and quadruple that for FP16 half precision rates. The first true Xe GPU is expected in 2020, and Intel has been deliberately vague about what to expect here, considering its “Larrabee” GPU effort from a decade ago failed, leaving the door wide open for Nvidia to dominate GPU compute. (The Larrabee effort was revitalized as the Knights family of many-core offload accelerators and eventually free-standing compute engines, but Intel officially mothballed them last summer.) All that Intel has said about the Xe GPUs is that they will range in performance from “teraflops to petaflops” and that they will range from client devices to datacenter devices with broad differentiation across the portfolio of products. We are guessing that Intel will use the first generation Xe chip in the Aurora machine if it has to, and push to a more powerful second-generation Xe if it can, which would cut down on the node count.

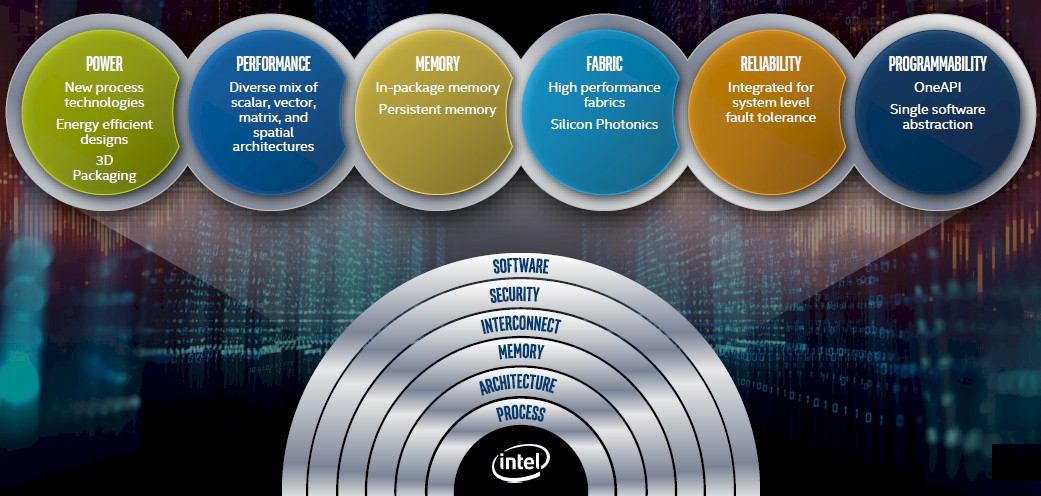

What we can say for sure is that Aurora is the first system that Intel is talking about that reflects the six pillars of compute, storage, and networking that the company is focusing all datacenter development around since the management of its core teams was shaken up last year. Rajeeb Hazra, now general manager of the Enterprise and Government Group at Intel and formerly just in charge of the HPC business, explained these pillars as part of the Aurora preview; these will be central to all Intel launches going forward, and you can expect that Intel will never just talk about any particular processor ever again, but rather the whole stack and how it all fits together.

“We have been talking quite a bit recently about six pillars of innovation that must come together to create systems and capabilities of this scale,” explained Hazra. “Innovations in power and energy efficiency, everywhere from new process technologies to designing new silicon to new ways to put together silicon with packaging innovations.”

Interestingly, the second pillar, you will see, is performance, and it includes a “diverse mix of scalar, vector, matrix, and spatial architectures,” and we think the latter one includes the Configurable Spatial Accelerator, a non-von Neumann configurable compute engine that we told you Intel was working on last summer. We would not be surprised to see a bunch of these CSA chips sprinkled around Aurora. But we don’t think they will be central to the system.

No offence to Raja but, if that’s who Intel is basing their entire strategy on (or even 50% of it), they need to seriously rethink their approach. Intel has a more fundamental issue other than not having a GPU, and the GPU Raja led architecture for at AMD we’re an absolute embarrassment (discraceful GPU really!!).

Intel needs to stop abandoning things, double down (perhaps even on a GPU) and build credibility – are they sticking with their AP CPU? Are they sticking with OPA? Are they sticking with Lustre? Are they sticking with HPC Orchestrator? Are they sticking with FPGA?

I cannot recall the last time I saw a an entity abandoning things at the pace Intel does…