We have spent the past several years speculating about what the “Summit” supercomputer built by IBM, Nvidia, and Mellanox Technologies for the US Department of Energy and installed at Oak Ridge National Laboratory might be. And after its architecture was revealed, we mulled it over and thought about how it might extend our knowledge.

Since the summer, when Summit was first fired up, researchers have been testing some of the applications that they have spent years in developing and extending to reach across its 4,608 compute nodes, which have a total of 202,752 cores across its 9,216 IBM “Nimbus” Power9 processors and a total of 2.21 million streaming multiprocessors across its 27,648 Nvidia “Volta” Tesla GPU accelerators. The cores and the SMs are the basic unit of compute parallelism in the machine.

Lashing together these pieces of hardware is the easy part; getting the funds to build machines like Summit can be trying, but the real challenge is creating software that can span this infrastructure. And researchers at Oak Ridge as well as the many scientists who collaborate with them have the added task of trying to integrate new machine learning techniques into the workflow of traditional HPC simulation and modeling workloads. The good news is that hardware that is good at HPC is also good for AI, which is a happy coincidence indeed.

We thought that it was time to talk about the applications that run on this machine and the important science that will get done. This is, after all is said and built, the point of supercomputing. So we sat down with Jack Wells, director of science for the Oak Ridge Leadership Computing Facility (OLCF), which houses the Summit supercomputer and its predecessor, “Titan,” which is also a GPU-accelerated system, to get a feel for what is happening with software on Summit.

Prior to the job of steering the scientific outcomes of the OLCF’s applications, Wells led both the Computational Materials Sciences group in the Computer Science and Mathematics Division and the Nanomaterials Theory Institute in the Center for Nanophase Materials Sciences at the lab. Before joining Oak Ridge in 1997, Wells a postdoctoral fellow within the Institute for Theoretical Atomic and Molecular Physics at the Harvard-Smithsonian Center for Astrophysics; he has a PhD in physics from Vanderbilt University.

Timothy Prickett Morgan: The hot topic of discussion in HPC circles these days is the mixing of traditional HPC simulation and modeling with various kinds of machine learning, and Summit can do both and do both well is our guess.

Jack Wells: It is so early, and it is really going to be challenging to integrate these things. It is not fully mature yet.

Perhaps the best place to start to talk about this is an application in genomics called Combinatorial Metrics, or CoMet for short, that did mixed precision at the exaops rate. This is actually an INCITE project on Titan, Summit’s predecessor, run by Dan Jacobson, chief scientist for computational systems biology division here at Oak Ridge. In this case, the genomics is part of a workflow that includes machine learning, data analytics, and molecular dynamics simulation all in the same project, and they won a one-year INCITE award to execute that. The early science work on this application workflow was done in June, and they really need Summit.

TPM: I saw that when the Summit machine was rolled out back in June, and they achieved 1.88 exaops using mixed precision on 4,000 of the IBM Power AC922 nodes, which was most of them. This was a 25X speedup compared to Titan, and 4.5X of that speedup was as a result of moving parts of the code to the mixed-precision Tensor Core units on the “Volta” Tesla GPU accelerators in the servers, which do matrix math at FP16 or half precision and output FP32 or single precision results.

[Editor’s Note: We did this interview before the ACM Gordon Bell finalists and prizes for 2018 were announced, but the work by Jacobson to try to identify the factors that might give rise to opioid addiction using CoMet was one of the winners for demonstrating sustained performance. The other winner was for an application at Lawrence Berkeley National Laboratory that, in collaboration with Oak Ridge National Laboratory, merged machine learning and HPC to do exascale-class weather modeling on Summit, which we covered in detail here.]

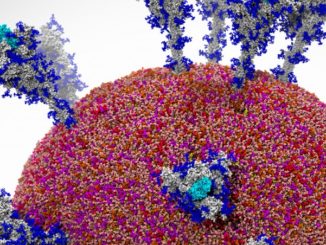

Jack Wells: This CoMet workflow begins with a genetic variation in genomes, and then finding associations, and then using information that is available from other sources to actually come up with molecular structures that can be tested to try to show what these interactions are at the protein level; they will do molecular simulations as well as small molecule docking. It is a very ambitious, scale-spanning approach, and the fact that it is going to be on a supercomputer with the data present and with a familiar software stack and tools gave it a good chance to be successful.

TPM: What is the software stack that CoMet is using for the simulation, analytics, and machine learning?

Jack Wells: The machine learning is performed in PyTorch, and the molecular dynamics code they are going to use escapes me right now. Right now, these things are going to be coupled through the file system. It is not that they are all wired together and streaming data from one to the other, but there is the potential to do that somewhere down the road.

TPM: This is a theory question, but do you think a lot of the HPC codes that are out there have datasets that can be used for machine learning and simulation to augment each other and have them be used in this fashion? There is a lot of text data and patient medical record data and other sources that could be brought to bear in this particular situation. But are there always cases where this is possible, and therefore this type of hybrid HPC-AI approach becomes commonplace?

Jack Wells: I don’t know if it will always work, but I do know that a lot of smart teams are widening their aperture and applying these techniques at many different levels. I can give you another example from our INCITE application readiness program.

It is a seismology simulation, called SPECFEM, done by Jeroen Tromp of Princeton University. The big goal is to create a global tomograph of the Earth’s mantle by basically inverting earthquake data, solving an inverse problem iteratively where you successively refine — maker more accurate — by propagating earthquakes through the mantle and comparing the simulated seismograms to the synthetic seismograms. That creates an error function at each seismogram that can serve as a source for a back-propagation through an adjoint equation that takes you one step in an interactive refinement to get a more accurate representation of the Earth’s mantle.

On Titan, they started with a couple of hundred earthquakes, and each earthquake has thousands of seismograms, and they have moved up about a thousand earthquakes and maxed out Titan. But the database has over 6,000 earthquakes in it and they can’t use them on Titan, but on Summit, they should be able to do it. Also, they have to filter the information in the seismograms to certain frequency ranges, and now they can open up that aperture and get more detail.

So, where are they using machine learning? Historically, the most labor-intensive part of this workflow has been in data pre-processing and cleaning because when they are bringing the data in, they have to make sure that all of the filtering is done appropriately and that any weird errors in the data are removed. A post-doctorate student who has a faculty job now, Yangkang Chen, was here as part of our application readiness program working with Professor Tromp at Princeton, has automated that data preparation step using machine learning algorithms.

That is just one type of problem, and they identified the opportunity to use machine learning and it needs to be done in an integrated way because this whole workflow is integrated – you are doing forward simulations, creating this error function, and then doing backwards simulations, and at scale on Titan and soon on Summit.

Another application comes from the University of Tokyo Earthquake Research Institute. This is a team that is usually a finalist in the Gordon Bell Prize competition. I don’t think this work is published yet [it wasn’t at the time of the interview], so I am hesitant to talk too much about it, but they do finite element analysis for earthquakes. The unstructured meshes can become entangled and can lead to slowdowns in convergence. There are some difficulties and historically they would insert a human in the loop and smooth out some of these entangled meshes so the iteration can proceed. Machine learning is learning to untangle the knots in these meshes. I am aware that Fred Streitz, chief computational scientist at Lawrence Livermore National Laboratory, has given talks where the same kind of phenomena are described, with machine learning being used to overcome these algorithmic challenges.

[We discuss the earthquake work at the University of Tokyo, including something called AI preconditioning, with Ian Buck, the general manager of accelerated computing at Nvida, in this interview from the SC18 supercomputing conference.]

The use of machine learning is coming to traditional simulation science areas to overcome a particular challenge. There is going to be, at least for us at Oak Ridge, non-traditional or experimental or observational data problems where simulation is going to augment the machine learning approach.

I think the no-brainer there is with training data. There are so many areas where people want to use machine learning to characterize images – it is essentially the same problem as finding cats, but the cats are images created by, for instance, X-ray scattering from a polymer or other kinds of images that need to be classified and processed. In these latter physics-based examples, we have wonderfully predictive models for what should be going on based on physics. So we can augment the data for training a neural network using simulation data plus the training data.

Of course, one of the big challenges is getting enough data that you can accurately train the networks. People are going to put these together in careful ways, with the assumption being that the simulation tells you what to expect and then add additional training data to maybe include information that may be new or novel or unexpected.

TPM: This is precisely what is happening with self-driving cars right now. They can’t get the billions of hours of road time with the experimental vehicles to gather enough varied data to fully train the neural networks, so researchers are starting to use simulated worlds running at a much higher speed to build up the training set library and therefore improve the effectiveness of the neural networks.

Jack Wells: If I look at this just from the point of view of my own career, when I was a post-doc studying theoretical physics, we wanted to ignore the data in order to have an accurate prediction that was unadulterated and uninfluenced by experiment and write our paper so we could compare it to the experimental paper. Or maybe if it was complicated, we would write a joint paper and work out all of this stuff together. Just fitting data had a bad reputation. We had to come up with something that we perceived as being pure and more elegant and more predictive using more and more accurate many body theory, for instance.

But the problems are really hard. And the problems we were trying to solve in nuclear structure physics or excitations of crystal materials for photovoltaics – these are really hard. And so progress has been slow. It is not fast. Supercomputers get faster, but they don’t get faster fast enough. We go through all of this effort and we get a factor of 10X when factors of 10X compared to the challenge is not that much. But there was all of this data out there that wasn’t integrated. Likewise, you can turn it around. Experimentalists trying to work on these things have models, but how are they integrating the data?

This is like trying to work on a task with one arm tied behind your back. What is happening is that people are taking a broader view of what all available resources are there in order to really try to make significant steps forward to try to solve these really hard problems. I think it is refreshing. We do not have to – and I will use a strange word for science – have doctrinal chains on to attack these problems. Now we can bring everything we have to the workbench and try to make progress. It ends up with us trying to integrate the data we have with the models that we have.

Be the first to comment