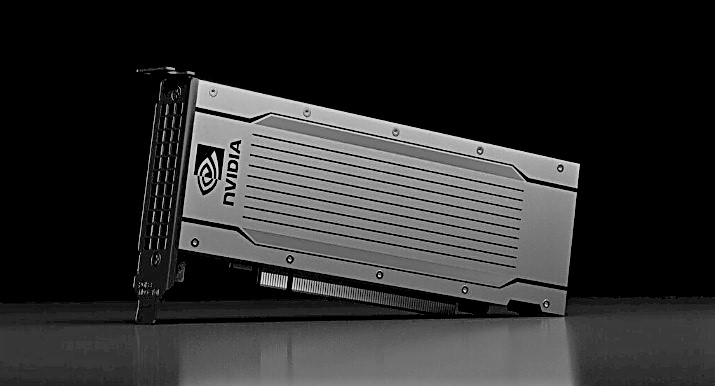

The Still Expanding Market For GPU Compute

At this point in the history of the IT business, it is a foregone conclusion that accelerated computing, perhaps in a more diverse manner than many of us anticipated, is the future both in the datacenter and at the edge. …