Moore’s Law is effectively boosting compute capability by a factor of ten over a five year span, as Nvidia co-founder and chief executive officer Jensen Huang reminded Wall Street this week when talking about the graphics chip maker’s second quarter of fiscal 2019 financial results.

Moore’s Law has really been utilized to drive down the cost of a unit of compute and then drive up utilization at the same time, a perfect example of the elasticity of demand to cost. And with Moore’s Law increases in performance slowing down on CPUs, it was inevitable, perhaps, that Nvidia – or some other company peddling parallel processors – would drive a new type of computing architecture that delivered the kind of performance and price/performance that would stay on the Moore’s Law track or beat it.

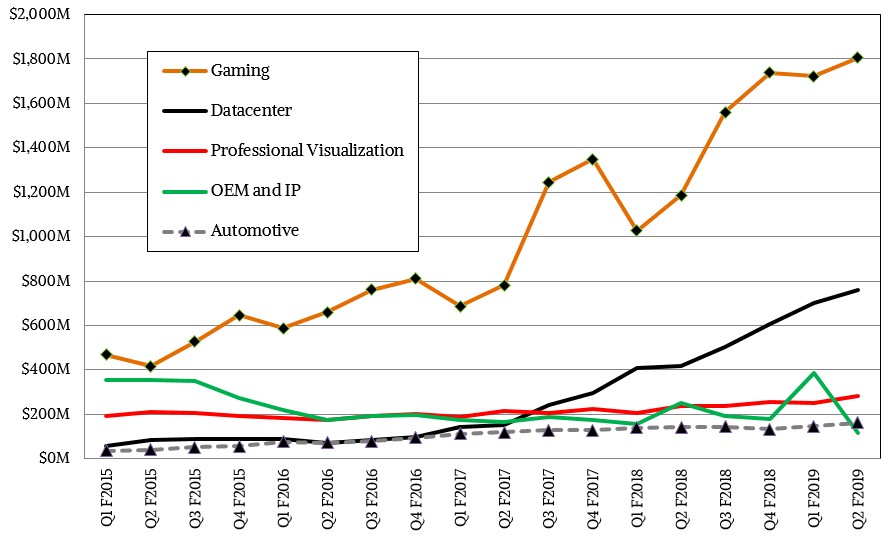

Something had to give, and HPC made it break and then machine learning widened the gap a whole lot further and now Nvidia’s datacenter business is itself growing faster than Moore’s Law, growing from just north of nothing about a decade ago to now humming along at more than a $3 billion annualized run rate as the second quarter ended in July. As fiscal 2015 was getting underway, Nvidia’s datacenter business was a mere $57 million, and a little more than four years later, it has brought in $760 million and probably has something on the order of 80 percent gross margins. There is no question that the growth rate in this datacenter business is slowing, as inevitably happens with all new waves of computing and the systems that deploy them, but over those four years the revenue stream from the glass house, dominated by machine learning and HPC, has grown by a factor of 13X and even if you assume a slowing of growth as Nvidia rides down the Moore’s Law curves and provides ever-larger chunks of compute for the same money, by the time 2024 rolls around the datacenter business at Nvidia could be as large as $25 billion a year. There are a lot of assumptions in that data, mainly that all kinds of compute become accelerated, interpreted by machine learning, and visualized on the fly, but that will be abut a factor of 80X growth in a decade.

We are setting the bar pretty high for Nvidia here, but no higher than Huang and his team at Nvidia has set for itself.

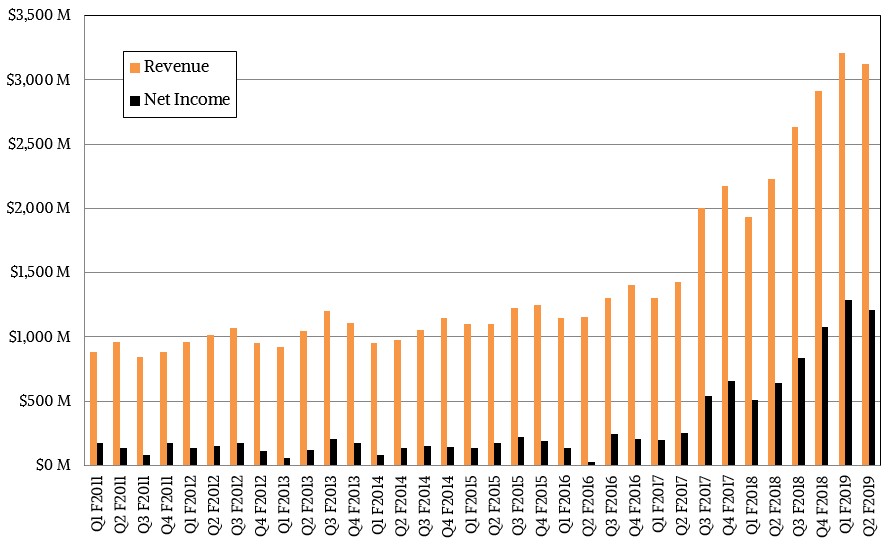

With that far off future in mind, let’s return to the present and take a look at what happened in the second quarter. In the quarter ended in July, Nvidia’s overall revenues rose by 40 percent to $3.12 billion, and net income rose by an even faster rate of 88.9 percent to $1.1 billion, and represented 35.3 percent of revenues. That is twice the profit rate that you might expect from a healthy Fortune 500 or Global 2000 company, to put it into perspective. And it is an amazing transformation of what used to simply be a maker of graphics chips for gamers on PCs.

Nvidia’s datacenter business, dominated by the Tesla GPU accelerators but also now seeing contributing sales from DGX systems sales as well as HGX hyperscale component sales based on NVLink and soon the new NVSwitch. We think that DGX system sales comprise probably somewhere between 10 percent to 15 percent of Nvidia’s datacenter revenues, and thus far all that the company has confirmed is that DGX drives “hundreds of millions of dollars” in sales per year at this point. We think that DGX plus HGX will eventually drive somewhere around a third of sales in the near term, which explains why Nvidia did it. Yes, Nvidia wants to control more of its platform. But it also wants to boost its revenues to tell a better story.

That’s what we would do, and that is what Intel has done and what AMD is trying to do.

The one thing that is not growing is the cryptocurrency market, which was expected to drive $100 million in sales in fiscal Q2 but only managed to bring in $18 million. Nvidia says it will be basically zero looking ahead, and this is ironic given the fact that the just announced “Turing” architecture, a derivative of the Volta architecture with real-time ray tracing engines built in and lots of oomph from modified Tensor Cores to do machine learning inference on the fly, was supposed to be something that would be appealing to the mining crowd. But that party seems to be over for Nvidia now, and without much explanation above and beyond some serious miners investing in ASICs and the hobby miners using the Pascal GPUs they already have bought. No matter. Mining was always just a few extra blobs of icing on the cake for both AMD and Nvidia. It was never supposed to be the cake.

In any event, once we get back from vacation – why do companies always try to do important things on our vacation? – we will be drilling down into the Turing architecture and its implications in the datacenter. Nvidia is set to give a deep dive on Turing at the Hot Chips symposium next week in Silicon Valley, and we will be there.

Here is the important thing about Nvidia: It has $7.94 billion in cash in the bank, it has widely respected parallel computing engines and now whole platforms, and perhaps the most sophisticated and widely adopted parallel computing software stack in history.

Moore’s law is only for the number of transistors that can placed in a given space. It has nothing to do with CPU “speed”/”Performance”, company performance, or any other metric.