If there is anything the hyperscalers have taught us, it is the value of homogeneity and scale in an enterprise. Because their infrastructure was largely general purpose as well as the same, it was possible to buy, deploy, and manage in bulk, and most assuredly the buying is the cheapest part. Because of their purchasing power, they are able to build infrastructure at a much lower cost than anyone else on the planet, and they can sell it – along with their expertise and advanced software – at a tremendous profit to us as a service.

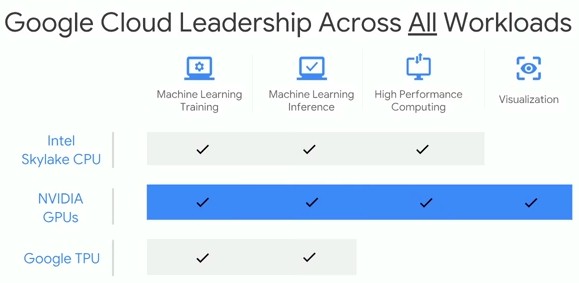

But there is such a thing as taking homogeneity too far, since one size of compute does not fit all workloads. And as a major cloud provider that wants enterprises to buy its raw infrastructure or deploy its machine learning services, Google can’t just rely on its own TensorFlow Processing Unit (TPU) accelerators to backend its machine learning services or the raw compute that it sells to companies on Google Cloud Platform.

This is why, despite the fact that Google is working on getting its TPU 3.0 machine learning coprocessors into the field behind services offered on Cloud Platform, the search engine giant that aspires to catch up and beat Amazon Web Services at its own game, has bought a slew of “Pascal” Tesla P4 GPU accelerators to make them available on its public cloud.

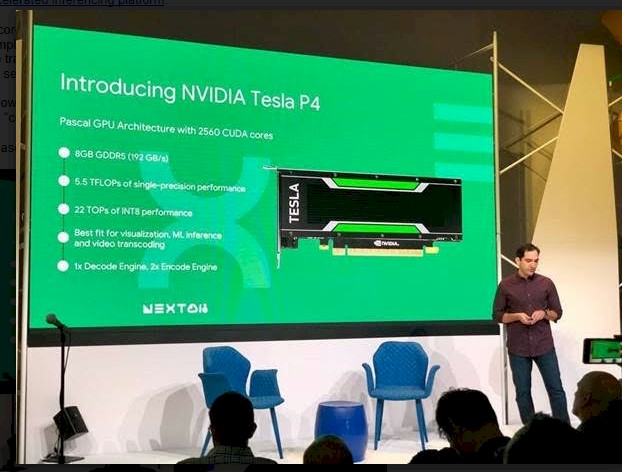

The Tesla P4s are based on the GP104 version of the Pascal family of GPUs and they include support for a low precision a four-way 8-bit integer dot product with a 32-but accumulate, which is a low precision math unit that delivers 21.8 teraops of performance in a power envelope of 75 watts. (Think of this as an early prototype of the Tensor Core units in the “Volta” GV100 GPUs that the current Tesla V100 accelerators use. The special math unit in the GP104 chip used a 2D array of four 8-bit integer chunks of data that accumulate to a 32-bit integer result, while the tensor core does a 3D array of four by four by four 16-bit floating point data that accumulate to a 32-bit floating point result.)

There is a 50 watt version of the Tesla P4 accelerator, too, which has some of the 2,560 CUDA cores turned off to push the power consumption down faster than the compute drops. The Tesla P4 accelerator has 8 GB of GDDR5 frame buffer memory and 192 GB/sec of memory bandwidth, which is on par with what a two-socket server based on Intel’s “Skylake” Xeon SP processors can deliver. With prices rising on the top-end Pascal P100 and Volta V100 Tesla accelerators, the Tesla P4s can still find their place. (It is not clear if there will ever be a Volta-based Tesla V4, but that would be interesting.)

The Tesla P4s were most enthusiastically adopted by social network Facebook, which used them for machine learning inference, but in a breakout session at Google Next 2018 conference this week, Ari Liberman, product manager for Google Compute Engine, the compute portion of Cloud Platform, said that instances backed by Tesla P4 instances would soon be available on Cloud Platform, allowing for companies to use them to chew on data. While the Tesla P4s were pitched by Nvidia as machine learning inference engines, they can also be used for virtual workstations, visualization, and video transcoding workloads and therefore are a good fit for a public cloud that wants to support a variety of workloads on its iron.

The word on the street is that Google plans to talk more fully about its plans for the Tesla P4s sometime later this summer.

Nvidia is, of course, happy to get a shout out from Google, which doesn’t tend to talk much about the specifics of its infrastructure except as it relates to its public cloud. “Our Tesla P4 GPUs offer 40X faster processing of the most complex AI models to enable real-time AI-based services that require extremely low latency, such as understanding speech and live video and handling live translations,” Paresh Kharya, head of product marketing for the Accelerated Computing division at Nvidia, said in a statement. “P4 GPUs deliver inference with just 1.8 milliseconds of latency at batch size 1, and consume just 75 watts of power to accelerate any scale-out server, offering best-in-class energy efficiency.”

We presume that the comparison above is for machine learning inference on a Tesla P4 versus a two socket Xeon server that is commonly deployed at hyperscalers for myriad workloads.

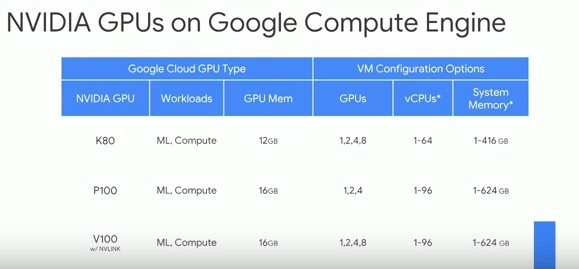

Google, like all of the major cloud providers, has already deployed the top-end Tesla V100 accelerators on Cloud Platform for companies to rent, and these are being pitched as compute engines for running HPC simulation and modeling workloads as well as machine learning training and machine learning inference. We did a monster comparison of all of the Tesla GPU accelerators back in February, and followed up with pricing comparisons on them all in March.

If you want to do just inference, and on the cheap, it is still not clear that the Tesla V100 is the better option compared to the Tesla P4. The HGX-1 hyperscale version of the Tesla V100, which we think runs at 918 MHz across its cores, delivers 50.2 teraops of INT8 performance for inference, but it only runs at 150 watts compared to the 250 watts of the PCI-Express version of the Tesla V100 and 300 watts for the SXM2 version with NVLink interconnect ports. If you do the math, that comes out to 334.9 gigaops per watt, but at an estimated cost of $7,000 per unit, that is $139 per teraops. The Tesla P4 can do 21.8 teraops on INT8, as we explained above, and that only works out to 290.7 gigaops per watt, so it is a little less efficient. But at $2,500 per Tesla P4, that works out to $115 per teraops. We suspect that Nvidia is cutting prices on the Tesla P4s a lot deeper than on the tesla V100s, which are in very high demand and in kinda short supply because of that.

If you companies want to use Tensor Core units for inferencing, which they can do if they modify their datasets and their applications, they could basically double their throughput and cut the cost per teraops by half. Which is why we think that in the long run, the INT8 units are going to be removed from future Tesla GPUs.

But for now, it all comes down to what applications companies have and what ones they will develop for the future. Google can easily slip a Tesla P4 or Tesla V100 or a TPU 3.0 coprocessor behind any one of its services, if it chooses to, which is one of the reasons why Google was always more enthusiastic about building a platform cloud at a higher level of abstraction (remember App Engine?) than a raw infrastructure cloud. But most companies still want to feel like they are doing their own IT and Google has to supply the piece parts so they can assemble their virtual systems on Cloud Platform.

In his presentation at Next 2018, Liberman said that Google wants to be able to give customers a place to run all of their workloads, and in many cases, they might not be using TensorFlow for their machine learning jobs, but some other framework that is not supported on the TPU.

So Google has to do something to attract these customers, and adding Pascal and Volta GPUs aimed at machine learning training or inference – or both – is important to give the company coverage. As the market matures, and frameworks settle on data formats and processing techniques that are reflected in the iron, we think the number of different processing engines on public clouds will shrink. But certainly not now, or any time in the near future.

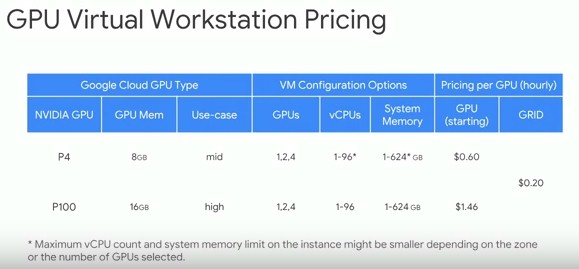

Liberman didn’t talk about using the Tesla P4 GPUs for machine learning very much, but rather focused on allowing them to be attached to any Compute Engine instance to create virtual workstations where the GPUs are used in passthrough mode to get very high performance. (The Tesla P100 is also being made available as a configurable GPU for Compute Engine instances.) It sounds like Google has banks of GPUs in enclosures that hook over a PCI-Express network to compute instances on its public cloud, and that means these same Tesla P4 and P100 instances can – and will – be used for machine learning inference and video encoding jobs, too. This is in addition to Compute Engine instances that have dedicated GPUs for HPC or machine learning. Here is what the entry pricing looks like for the Tesla P4 and P100 GPUs, and how the CPU and GPU compute can be spun up:

It will be interesting to see how Google prices its Tesla P100, V100, and P4 GPU accelerators in dedicated instances and on-the-fly workstation instances (created by pairing CPUs and Tesla P4 or P100 GPUs) against raw machine learning capacity on its home grown TPU 2.0 and 3.0 accelerators for TensorFlow. It is reasonable that Google will, in the long run, offer TPU capacity at a lower price to create a virtuous cycle to increase TensorFlow adoption, which it no doubt hopes will drive further TPU adoption, and around again and again and again. But in the meantime, the Nvidia GPU is still the engine of AI and HPC and it has a price and a service level agreement on Google Cloud Platform, while TPUs do not have either.

Be the first to comment