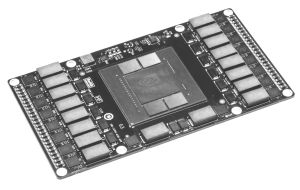

Deep Learning Drives Nvidia’s Tesla Business To New Highs

It is a coincidence, but one laden with meaning, that Nvidia is setting new highs selling graphics processors at the same time that SGI, one of the early innovators in the fields of graphics and supercomputing, is being acquired by Hewlett Packard Enterprise. …