In 2024, there is no shortage of interconnects if you need to stitch tens, hundreds, thousands, or even tens of thousands of accelerators together.

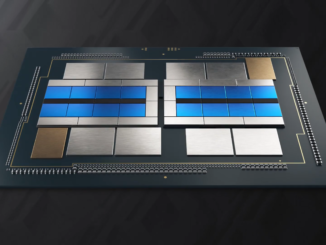

Nvidia has NVLink and InfiniBand. Google’s TPU pods talk to one another using optical circuit switches (OCS). AMD has its Infinity Fabric for die-to-die, chip-to-chip, and soon node-to-node traffic. And then, of course, there’s good, old fashioned Ethernet which is what Intel is using for Gaudi2 and Gaudi3.

The trick here isn’t building a big enough mesh, rather it’s fending off the massive performance penalties and bandwidth bottlenecks associated with going off package. It also doesn’t do anything to address the fact that HBM memory, on which all this AI processing depends, is tied in a fixed ratio to the compute.

“This industry is using Nvidia GPUs as the world’s most expensive memory controller,” said Dave Lazovsky, whose company, Celestial AI, just snagged another $175 million in a Series C funding round backed by USIT and a slew of other venture capital heavyweights to commercialize its Photonic Fabric.

We looked at Celestial’s Photonic Fabric, which encompasses a portfolio of silicon photonics interconnects, interposers, and chiplets designed to disaggregate AI compute from memory, last summer. Just under a year later, the light wranglers say they are working with several hyperscale customers and one big processor manufacturer about integrating its tech into their products. To our disappointment, but certainly not our surprise, Lazovsky isn’t naming names.

But the fact Celestial counts AMD Ventures as one of its backers and its senior vice president and product technology architect Sam Naffziger discussed the possibility of co-packaging silicon photonic chiplets the same day the announcement went out, certainly raises some eyebrows. Having said that, just because AMD is funding the photonics startup doesn’t mean that we’ll ever see Celestial’s chiplets in Epyc CPUs or Instinct GPU accelerators.

While Lazovsky couldn’t say with whom Celestial is partnered, he did offer some clues as to how the tech is getting integrated, as well as a sneak peek at an upcoming HBM memory appliance.

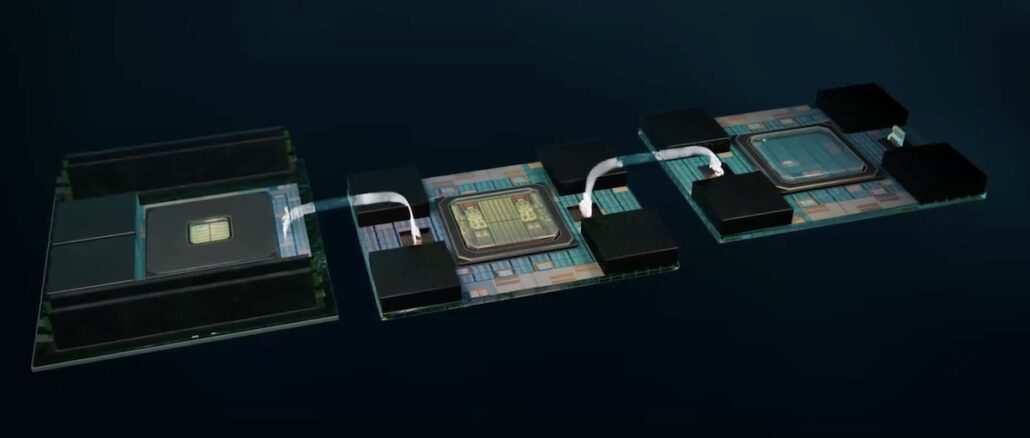

As we discussed in our initial foray into Celestial’s product strategy, the company’s parts fall into three broad categories: chiplets, interposers, and an optical spin on Intel’s EMIB or TSMC’s CoWoS called OMIB.

Unsurprisingly, most of Celestial’s traction has been on chiplets. “What we’re not doing is trying to force our customers into adopting any one specific product implementation. The lowest risk, fastest, least complex way to provide an interface into the Photonic Fabric right now is through chiplets,” Lazovsky tells The Next Platform.

Broadly speaking, these chiplets can be employed in two ways: either to add additional HBM memory capacity or as a chip-to-chip interconnect, sort or like an optical NVLink or Infinity Fabric.

These chiplets are a little smaller than an HBM stack and provide an opto-electrical interconnect good for 14.4 Tb/sec or 1.8 TB/sec of total bandwidth off chip.

With that said, we are told a chiplet could be made to support higher bandwidths. The gen-one tech can support about 1.8 Tb/sec for every square millimeter. Celestial’s second-gen Photonic fabric, meanwhile, will go from 56 Gb/sec to 112 Gb/sec PAM4 SerDes and will double the number of lanes from four to eight, effectively quadrupling the bandwidth.

So, 14.4 Tb/sec isn’t a cap, it’s a consequence of what existing chip architectures are equipped to handle. This makes sense, as any additional capacity would otherwise be wasted.

This connectivity means that Celestial can achieve interconnect speeds akin to NVLink, just with fewer steps along the way.

While the chip-to-chip connectivity is relatively self explanatory – drop a Photonic Fabric chiplet on each package and align the fiber attaches – memory expansion is another animal altogether. While 14.4 Tb/sec is far from slow, it’s still a bottleneck for more than a couple HBM3 or HBM3e stacks. This means that adding more HBM will only net you capacity past a certain point. Still, two HBM3e stacks in the place of one isn’t nothing.

Celestial has an interesting workaround for this with its memory expansion module. Since the bandwidth is going to be capped at 1.8 GB/sec anyway, the module will only contain two HBM stacks totaling 72 GB. This will be complemented by a set of four DDR5 DIMMS supporting up to 2 TB of additional capacity.

Lazovsky was hesitant to spill all the beans on the product, but did tell us it would use Celestial’s silicon photonics interposer tech as an interface between the HBM, interconnect, and controller logic.

Speaking of the module’s controller, we are told the 5 nanometer switch ASIC effectively turns the HBM into a write-through cache for the DDR5. “It gives you all of the benefits of capacity and cost of DDR and the bandwidth and the 32 pseudo channels of interconnectivity of HBM, which hides latency,” Lazovsky explained.

This isn’t that far off from what Intel did with Xeon Max or what Nvidia is able to do with its GH200 Superchips, he added. “It’s basically a supercharged Grace-Hopper without all the cost overhead and is far more efficient.”

How much more efficient? “Our memory transaction energy overhead is about 6.2 picojoules per bit versus about 62.5 going through NVLink, NVSwitch for a remote memory transaction,” Lazovsky claimed, adding that latency isn’t too bad either.

“The total round trip latency for these remote memory transactions, including both trips through the Photonic Fabric and the memory read times, is 120 nanoseconds,” he added. “So it’s going to be a little bit more than the roughly 80 nanoseconds of local memory, but it’s faster than going to Grace and reading up a parameter and pulling it over to a Hopper.”

From what we understand, sixteen of these memory modules can be meshed together into a memory switch, and multiple of these appliances can be interfaced using a fiber shuffle.

The implication here is that, in addition to a compute, storage, and management network, chips built with Celestial’s interconnects will not only be able to interface with each other, but a common pool of memory.

“What that allows you to do is machine learning operations, such as broadcast and reduce, in a very, very efficient manner without the need for a switch,” Lazovsky said.

The challenge Celestial faces is timing. Lazovsky tells us that he expects to begin sampling Photonic Fabric chiplets to customers sometime in the second half of 2025. He then expects it will be at least another year before we see products using the design hit the market, with volume ramping in 2027.

However, Celestial isn’t exactly the only startup pursuing silicon photonics. Ayar Labs, another photonics startup with backing from Intel Capital, has already integrated its photonics interconnects into a prototype accelerator.

Then there’s Lightmatter, which picked up $155 million in Series C funding round back in December, and is attempting to do something very similar to Celestial with its Passage interposer. At the time, Lightmatter chief executive officer Nick Harris claimed it had customers using Passage to “scale to 300,000 node supercomputers.” Of course, like Lazovsky, Harris wouldn’t tell us who its customers are either.

And there is also Eliyan, which is trying to get rid of interposers altogether with its NuLink PHYs – or enhance the performance and scale of the interposers if you must have them.

Regardless of who comes out on top in this race, it seems it’s only a matter of time before the shift to co-packaged optics and silicon photonic interposers kicks off in earnest.

Great to see competition heating up in this space of on-chip interconnects, here with mixed memory (HBM+DDR), and more generally in C2C/D2D scenarios, especially through CPO (which Nvidia considered too expensive and power-hungry for NVL72 at this time — and so, competitive dynamics is working to fix this as we speak!). With transparent HALs, these techs (from Ayar, Celestial, Eliyan, Lightmatter, …) will be key to seamlessly composing powerful choreographies of heterogeneous and distributed HPC/AI/ML systems IMHO, from fiber shuffle to cupid shuffle, through cha-cha-slide, and full-tilt boot-scoot boogie (eh-eh-eh!). Nvidia, MS/OpenAI (Stargate), ORNL (Discovery successor to Frontier), and EU-HPC all seem to be leaning this way, for their next computational efforts.

A great job by Celestial here!

1.8GB/Sec? Off by a few orders of magnitude?

THIS