There is no shortage of silicon photonics technologies under development, and every few months it seems like another startup crops up promising massive bandwidth, over longer distances, while using less power than copper interconnects.

Celestial AI is the latest contender to enter this space, having popped back up after a more than a year of radio silence with another $100 million of funding in hand and what they claim is a novel silicon photonics interconnect that spans the gamut from chip-to-chip, package-to-package, and node-to-node connectivity.

To be clear, we have heard similar claims from other silicon photonics startups in the past, though most have been more conservative in the problem they’re trying to address. Lightmatter, for instance, is looking to stitch together bandwidth hungry chips, like switch ASICs, using its Passage optical interposer. Meanwhile, Ayar Labs is looking to address off-package optics using its TeraPhy I/O chiplet.

Despite any similarities, that hasn’t stopped Celestial from winning over some heavy-weight investors including IAG Capital Partners, Koch Disruptive Technologies (KDT), and Temasek’s Xora Innovation fund. The company has also attracted the interest of Broadcom, which is helping develop prototypes based on their designs, which CEO Dave Lazovsky tells the Next Platform are about 18 months from shipping to customers.

Weaving The Photonic Fabric

When Celestial first appeared early last year, the company was focused on building an AI accelerator, called Orion, which was going to employ an optical interconnect tech. Since then, the company’s focus has shifted toward licensing its Photonic Fabric to chipmakers, though Lazovsky says the company is still working on Orion.

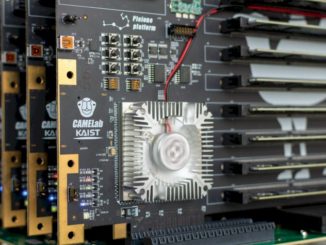

Speaking of the underlying tech, Celestial Photonic Fabric is based on a combination of silicon photonics and advanced CMOS technologies designed in collaboration with Broadcom using 4 nanometer and 5 nanometer process tech from Taiwan Semiconductor Manufacturing Co. This isn’t a “dumb” wire forwarding interconnect, either, Lazovsky emphasized. “We actually have routers and switch functionality at either end of the fiber.”

The most advanced form of the interconnect involves stacking third-party ASICs or SoCs atop an optical interposer or using the company’s optical multi-chip interconnect bridge (OMIB) packing tech to shuttle data between the chips. To us, this sounds a lot like what Lightmatter has been doing with Passage, which we looked at a while back, but Lazovsky insists that Celestial’s tech is orders of magnitude more efficient and can easily support multiple hundreds of watts of heat. Whether this is actually the case, we’ll have to wait and see.

For the initial design, Celestial’s Photonic Fabric is using 56 Gb/sec SerDes. With four ports per node and four lanes per port, the company says it can get around 1.8 Tb/sec for every square mm. “If you want to interconnect to a quad pack – four HBM stacks in a module – we can comfortably match the full HBM3 bandwidth,” Lazovsky claims.

For its second-gen Photonic Fabric, Celestial is moving to 112 Gb/sec SerDes and is doubling the number of lanes from four to eight, effectively quadrupling the bandwidth to 7.2 Tb/sec per mm2.

But, as you’d expect, the barrier to entry for this kind of thing is pretty high. To extract the maximum bandwidth offered by Celestial’s Photonic Fabric means designing your chips with the company’s optical interposer or OMIB in mind. According to Lazovsky, this essentially entails replacing your existing PHYs with its own tech. With that said, the interconnect doesn’t rely on proprietary protocols – it can work with them though – and instead is designed with Compute Express Link (CXL), Universal Chiplet Interconnect Express (UCIe), PCIe, and JEDEC HBM in mind.

The Photonic Fabric can also be deployed as a chiplet even by Lazovsky’s own admission “looks very similar to TeraPHY from Ayar Labs,” as well as a PCI-Express add-in card. PCI-Express is arguably the most pragmatic as it doesn’t require chipmakers to rearchitect their chips to support Celestial’s interposer or rely on the still nascent UCIe protocol for chiplet to chiplet communication.

The downside of PCI-Express is that it is an awfully large bottleneck. While Celestial’s optics are capable of massive bandwidths, an X16 PCI-Express 5.0 interface maxes out at around 64 GB/sec in each direction. If we had to guess, this option really exists as a proof of concept to get customers familiar with the tech.

The chiplet architecture, Lazovsky claims, is capable of far higher bandwidth, but is still bottlenecked by the UCIe interface at around 14.4 Tb/sec. We will note that UCIe has a long way to go before it is ready for prime time, but it sounds like the chiplet can be made to work with chipmaker’s proprietary fabrics as well.

Of course, the challenge facing these kinds of optical interconnects hasn’t changed. Unless you have a pressing need for bandwidth well beyond what’s possible using copper, there are plenty of existing and well tested technologies for physically stitching together chiplets. TSMC’s CoWoS packaging tech being just one example.

Over longer distances, even between packages, however optics start to make more sense, especially in bandwidth sensitive HPC and AI/ML oriented workloads. This is one of the first practical use cases Celestial sees for its Photonic Fabric.

Because the interconnect supports Compute Express Link (CXL), Lazovsky says it could be used to pool HBM3 memory.

The concept is similar to CXL memory pooling, which we’ve discussed at length in the past. The idea being that multiple hosts might connect to a memory appliance the same way they might connect to a shared storage server. Due to HBM’s prodigious memory bandwidth – upwards of 819 GB/sec – it can at most be placed a few millimeters from the die.

For those training large language models this can be a bit of a pain because of the fixed ratio of memory to compute you find on accelerators like Nvidia’s H100 or AMD’s MI250X. To get the right amount of one – say memory – could mean paying for more of the other than you actually need.

Celestial claims that its Photonic Fabric, when implemented correctly, can achieve sufficient bandwidth to not only support HBM3 at a distance, but eventually pool that memory between multiple accelerators.

“By building optically interconnected HBM modules, it changes the game,” Lazovsky said. “It’s potentially 20 percent the cost for the same memory capacity by taking away the need to scale your compute along with memory.”

So maybe this is the killer app that will not only make optical interconnects ubiquitous but bring composable infrastructure to the mainstream.

If this Celestial Fabric of Photonic pooling of HBM3 indeed delivers as advertised, at the corresponding high-bandwidth perf. point, then yes, wow, that’s two stoned-birds (bandwidth and capacity) for one’s tentacle (nominally joint-less) — a great prospect for composable heterogeneous cephalopods (leaving the competition up in smoke)! Some proof-of-concept benchmarks on test prototypes would be quite welcome IMHO …