Note: This story augments and corrects information that originally appeared in Half Eos’d: Even Nvidia Can’t Get Enough H100s For Its Supercomputers, which was published on February 15.

So, as it turns out, there are two supercomputers named Eos, and even the people in the press relations department at Nvidia, who were offering some details on an Eos machine apropos of nothing ahead of the GTC 2024 conference that happens in a week and a half but a month away when this was written, did not know this.

Based on the statements we found on the Nvidia site as well as press releases from the past, it sure looked like Eos was one size for running the MLPerf AI benchmarks (10,752 H100s) and was then downgraded to a smaller size (4,608 H100s) to run the High Performance LINPACK benchmark that is used to rank supercomputers. We didn’t call them to verify this because we thought we had it right – why would we think there were two Eos machines? – and because of that we didn’t give the Nvidia PR team a chance to figure out what the hell was going on, either. None of us meant to introduce and extend errors, and all of us want to get the right story out there.

We inferred based on erroneous data, and as we all know, that can cause a hallucination. . . .

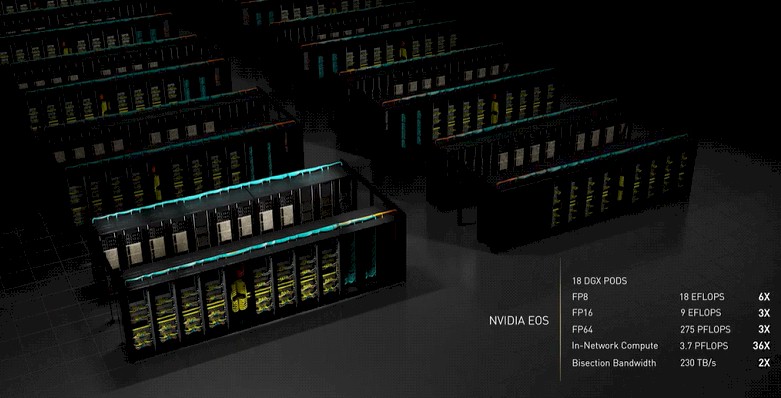

The real Eos, the one that Nvidia was talking about back in March 2022 when it announced the “Hopper” H100 GPU accelerator, has the feeds and speeds that we expected when Nvidia put out this chart back then:

What that real Eos machine does not do is use the NVSwitch memory fabric to interconnect 256 H100 GPUs into a shared memory SuperPOD, which is technically known as the DGX H100 256 SuperPOD but that name was not divulged at the time. That NVSwitch memory fabric was, as far as we were concerned, one of the killer new technologies to come out of Nvidia two years ago alongside the H100 GPU, and we still think that it is odd that this memory fabric – essentially a NUMA fabric across those GPUs so they literally act like a single GPU as far as software is concerned. If the original Eos machine is Half EOS’d in any way, this is still it. Perhaps there are software efficiency or cost issues with this extended NVSwitch fabric that we are unaware of. We think it is noteworthy, as we have said, that no hyperscaler or cloud builder has used this extended NVSwitch fabric to date, although Amazon Web Services is building the “Ceiba” AI supercomputer for Nvidia (and presumably replicating it for itself) using NVSwitch to extend the GPU shared memory across 32 Grace-Hopper hybrid CPU-GPU complexes that Nvidia calls superchips.

To be specific, as the people in Nvidia PR explained to us even before they realized there were two machines: “Eos compute connectivity uses 448 Nvidia Quantum InfiniBand switches in a 3-tier configuration of fully connected/non-blocking fabric that is rail-optimized, allowing for minimum hop distances. This results in the lowest latency for deep learning workloads. NVSwitch is used within the DHX H100, but not for internode communication.”

The second machine bearing the Eos name, as we have all come to find out thanks to our error, is called Eos-DFW, and it is installed at a CoreWeave datacenter in the Dallas-Fort Worth area. This is in and of itself interesting because as far as we know CoreWeave has three datacenter regions, which are named after the major airports nearest to them: US East LGA1 is in Weehawken, New Jersey; US Central ORD1 is in Chicago, Illinois; and US West LAS1 is in Las Vegas, Nevada. Looks like Nvidia got in one the ground floor of a forthcoming CoreWeave datacenter, which makes sense when you realize that Nvidia is an investor in this GPU cloud provider.

But Nvidia is by no means CoreWeave’s biggest provider of cash. In December, Fidelity Management & Research Company, along with additional participation from Investment Management Corporation of Ontario, Jane Street, JPMorgan Asset Management, Nat Friedman and Daniel Gross, Goanna Capital, Zoom Ventures, and others kicked in $642 million to get a minority investment. Nvidia participated in CoreWeave’s $221 million Series B funding in April 2023, and in August last year, Magnetar Capital and Blackstone Group led a $2.3 billion debt financing round with the help of Coatue, DigitalBridge Credit, BlackRock, PIMCO, and The Carlyle Group.

All told, CoreWeave has raised $3.5 billion across eight rounds of funding and debt, which is what it takes to build a GPU cloud. Somewhere around half of the cost of an AI server cluster is for GPUs, and another 25 percent or more is going for InfiniBand networking these days, so CoreWeave is a pretty good customer for Nvidia. So it is no surprise that Nvidia wants to be a good customer for CoreWeave, too. It is all enlightened self-interest.

This Eos-DFW machine is the one that has the 10,752 H100s and is the machine that was used to run the MLPerf tests. It is also a clone of the “Eagle” system that Microsoft used to run its LINPACK benchmarks for the November 2023 edition of the Top500 supercomputer rankings, which as 14,400 H100s and which gave the Eagle system the number three position on the list. Nvidia put the Eos-DFW machine to work doing AI training and inference runs immediately after running the MLPerf tests and did not see the point of running LINPACK on it when it had already done so for the original Eos system.

We get it. But we will point out that by doing that, Nvidia skews the Top500 rankings. The Eos-DFW machine would have a clear fourth rank on the list, and the “real” Top500 list doesn’t reflect this. (And in fact, these two machines, Eagle and Eos-DFW, would be ranked fifth and sixth because there are two machines in China – “Tianhe-3” and “OceanLight” – ahead of the “Frontier” system at Oak Ridge National Laboratory and the “Aurora” system at Argonne National Laboratory.) But inasmuch as Eos-DFW is probably used mostly for AI workloads, perhaps it doesn’t.

Which just goes to show how hard it is to rank so-called “supercomputers” and also how arbitrary it is to rank the Top500 the way that it is currently done. That ranking is not the top five hundred machines we know about, but the top five hundred that ran the test and bothered to submit results. This is how the middle of the list is dominated by hyperscalers, cloud builders, and service providers who submit results for machines that do not do either traditional HPC simulation or modeling or AI training as their day jobs.

We need a new list, but that is not what this story is about.

By the way, we forgot to ask if there were three Nvidia Eos machines. . . . Or four. Or five. Or more. Now, we have to worry about the non-obvious things we didn’t think to ask. <Smile>

A very welcome update on this most puzzling mystery of the missing EOS of DFW (worthy of a Banacek scenario, involving railroad switches, and cranes!). Running Linpack on that Eagle-twin could have boosted Nvidia’s “Vendor Performance Share” quite a bit on the latest Top500, giving it 900 PF/s of total aggregate oomph (Rmax), making it second only to HPE at 2.5 EF/s that includes the AMD-powered Frontier’s 1.2 EF/s ( https://top500.org/statistics/list/ ).

Then again, it was probably wise of Nvidia, strategically, to focus on MLPerf instead, as that’s the field (AI) for which the bulk of sales of these (Ewing-Barnes Dallas Family-feud) systems are expected to occur. The more HPC-targeted swiss-cheese Alps, and Venado rocket-sleigh, will surely undergo the whole suite of serious HPL, HPCG, HPL-MxP, Green500, and Graph500 tests (I think).