The top hyperscalers and clouds are rich enough to build out infrastructure on a global scale and create just about any kind of platform they feel like. They are just that rich, and by using their services at massive scale, all of us collectively pay for the many degrees of freedom that the hyperscalers and biggest clouds enjoy and that most IT organizations do not – and cannot ever – have.

To be specific, when we say rich, we are talking about Amazon Web Services, Microsoft Azure, Google Cloud, Alibaba Cloud, and Tencent Cloud, who cleverly have us footing the bill for their research, development, and production IT budgets.

The clouds run by IBM and Oracle come immediately to mind as a second tier. We think of Digital Ocean, OVHCloud, Linode, and a slew of cloud and hosting services run by the big telco carriers who are also in the cloud game in some fashion as a third tier.

The further down in the cloud tiers, the smaller the R&D budget for unique things.

And so all of these other smaller clouds, which are still large by any measure compared to any individual Global 2000 company that is not a hyperscaler or cloud builder, have to play to their niches. They cannot have a “not invented here” attitude. And they are certainly not rich enough to take on Nvidia’s HPC and AI stacks, which have been in development for a decade and a half and which now include a pair of complete software stacks that accelerates an aggregate of over 3,000 applications.

And that, is a nutshell, is why Oracle is making a big deal about a broad and deep partnership with Nvidia at its CloudWorld 2022 event today. Frankly, the second-tier, niche clouds like Oracle and IBM have no choice but to embrace whatever the popular AI stack is in the world, and they can decide to do or not do HPC as they see fit. But they cannot ignore the opportunity to add AI to the applications and middleware that they host, and they know, like everyone else, that Nvidia by far has the most developed and diverse AI stack. Thus, a partnership between Nvidia and Oracle is the highlight of the CloudWorld event.

An aside: As the dot-com boom was busting in 2001 and Oracle was trying to stir up excitement about its wares, the company launched the Oracle World event. In the wake of its acquisition of Sun Microsystems in 2009 and after the merger a few years later with Sun’s JavaWorld event, this became Oracle OpenWorld. Precisely what Oracle meant about “open” in its event name is not clear, but the World According to Larry Ellison certainly has no ambiguities in it. (Ellison is nothing if he is not amusing.) And starting this year, as the company is again shifting its attention, we have CloudWorld.

This latter name change is meant to signify that Oracle is not just fooling around in the cloud anymore.

Oracle had 430,000 customers using its operating systems, middleware, databases, and application suites worldwide in 2018 (the latest statistics we could find) and had over 25,000 downstream partners implementing and certifying its wares. It is the second largest software company in the world behind Microsoft, and it is building a cloud platform for Oracle customers who don’t want to deal with managing a datacenter anymore but who are not keen on trying to lift and shift their Oracle stacks to a cloud like AWS, Microsoft Azure, or Google Cloud.

Given the importance of the cloud and AI augmentation of applications, we wonder that Oracle has not yet taken a big stake in Nvidia, and perhaps it has and we just don’t know it.

Back in 2010, InfiniBand was such an important part of its Exadata database clustering platform that Oracle took a 10.2 percent stake in Mellanox Technologies, which had a market capitalization of a mere $718 million at the time. Call it a $72 million investment to ensure Oracle had access to InfiniBand chip designs and had guaranteed supply of InfiniBand adapter and switch chips that were integral to the Exadata systems. As Mellanox expanded its business and the stock went up and up, Oracle bled down its holding to a 7.4 percent stake sometime around 2016 (we are not sure when), which was when Oracle was engineering its own InfiniBand interconnects. Oracle’s stake in Mellanox dropped to 4.3 percent in 2018, and that stake was still worth $143 million at that time against a market capitalization of close to $3.4 billion for Mellanox. (So, yes, Larry Ellison made money on a supply chain and IP licensing deal. Quelle surprise. . . . ) It is unclear if Oracle benefited financially when Nvidia paid $6.9 billion in March 2019 to buy Mellanox. If so, that Mellanox investment has certainly paid off for Oracle.

Similarly, Oracle has invested over $400 million in Arm server CPU upstart Ampere Computing because its technology is key to the success of Oracle Cloud Infrastructure, or OCI, which is what Oracle calls its cloud.

Whether or not Oracle is taking a stake in Nvidia to hedge against its costs of licensing or buying Nvidia technology, it is certainly investing in Nvidia technology. At the CloudWorld event, Nvidia co-founder and chief executive officer Jensen Huang and Oracle chief executive officer Safra Catz outlined what the plan is, and it is fairly simple: Oracle will take it all.

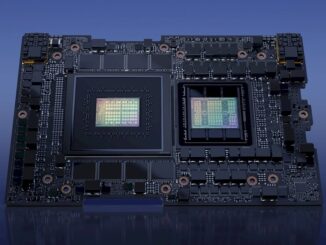

Oracle is adding tens of thousands of Nvidia “Ampere” A100 and “Hopper” H100 GPU accelerators to its infrastructure and is also licensing the complete Nvidia AI Enterprise stack so its database and application customers – and there are a lot of them as you see above – can seamlessly access AI training and inference if they move their applications to OCI. At the moment OCI GPU clusters still top out at 512 GPUs, according to Leo Leung, who is vice president of products and strategy for OCI and who has very long experience in cloud stuff with Oracle, Oxygen Cloud, Scality, and EMC.

To us, it looks like Oracle is adding capacity, meaning more GPU clusters, not scaling out its GPU clusters to have thousands or tens of thousands of GPUs in a single instance for running absurdly large workloads with hundreds of billions of parameters. Leung says that the typical OCI customers are still only wrangling tens of billions of parameters, so the scale OCI is offering is probably sufficient.

Leung was mum about when – or if – the NeMo LLM large language model service that Nvidia just announced at the fall GTC 2022 conference last month might be integrated into OCI, but we reckon that Oracle would rather not have a service running on AWS or Google Cloud or Microsoft Azure (where presumably these cloud LLMs run) linked to services running on OCI. And that means Oracle will eventually have to have enough GPU cluster scale to run the NeMo Megatron 530B model internally. That right there is 10,000 or more GPUs. So Oracle saying it is adding “tens of thousands” more GPUs is, well, a good start.

The two companies also announced that Oracle’s Data Flow implementation of Apache Spark running on OCI would be accelerated by GPUs using Nvidia’s RAPIDS environment, and that this combination of software is in early access. And finally, Oracle is also adopting the Clara framework for AI and HPC applications in the healthcare industry, which is used for medical imaging, genomics, natural language processing, and drug discovery. Oracle has an electronic healthcare record (EHR) system it got ahold of after it acquired Cerner in June for $28.3 billion, and this will presumably be integrated with Clara in some fashion.

Oracle has built 40 cloud regions around the globe, which are located in 22 countries; it has redundant regions in 10 countries, and has 11 of its regions interlinked with Microsoft Azure regions located nearby. The company has nine more planned regions in the works. There are two government regions for the US government plus three more dedicated specifically and solely for the US Department of Defense, and another two regions for the UK government. There are five regions in North America with one more announced, 15 in EMEA with five more announced, four in Latin America with three announced, and nine in Asia/Pacific with no additional ones announced.

Separately, Oracle has also announced a white label version of the OCI cloud, called Oracle Alloy, which allows its downstream hardware and software partners to put Oracle iron in their own datacenters and run it on behalf of customers. This is an interesting twist on the AWS Outposts idea, which is putting AWS infrastructure in your datacenter but AWS still runs it. The Accentures and Atoses of the world are going to love this option because it gives them the complete system integration control that they crave, and their customers will love it because they long since stopped caring about integrating IT systems or what they might cost to build and maintain.

One last thing: On the call with Nvidia and Oracle, we asked if the companies were working on ways to bring GPU acceleration to the core Oracle relational database that underpins the company and the applications that ride on top of that database.

“I would say that there’s some work happening there – nothing that we can announce currently,” Leung tells The Next Platform. “But I think overall, there’s some opportunity in terms of the overall data space and what we can do there. So no announcements currently, but there is work happening.”

Be the first to comment