Server makers Dell, Hewlett Packard Enterprise, and Lenovo, who are the three largest original manufacturers of systems in the world, ranked in that order, are adding to the spectrum of interconnects they offer to their enterprise customers. And when we say that, we really do mean spectrum, and specially we mean the combination of Spectrum-4 Ethernet switches and BlueField-3 DPUs that Nvidia calls Spectrum-X.

Nvidia has been minting coin selling InfiniBand switching to those building monstrous AI training clusters as well as a fair number of clusters that run HPC simulation and modeling workloads, pushing its networking business above the $10 billion level for the first time in the history of both its Mellanox Technologies acquisition and its Nvidia parent. In the latest quarter, when Nvidia’s networking business broke through that $10 billion annualized revenue run rate for the first time, and we think $2.14 billion of that was for InfiniBand and $435 million of that was for Ethernet/Other. (Other is NVSwitch presumably.) That is a very top heavy ratio, with InfiniBand outselling Ethernet 5:1 and growing at 5X year on year while Ethernet declined by 25.2 percent in Q3 fiscal 2024. (It is probably best to gauge this stuff on an annual basis, and we have incomplete data but our model with flesh out as the quarters roll along.)

Compare this to the broader datacenter switching market. If InfiniBand switching is driving half of InfiniBand revenues (adapter cards and cables are the other half), then the switch portion is somewhere south of $4 billion a year. For the trailing twelve months through June 2023, Ethernet datacenter switching drove $18.1 billion in sales, according to data from IDC. That is almost a perfectly inverted ratio of 1:5 InfiniBand versus Ethernet.

Admittedly, AI clusters are a kind of HPC, and a particularly intense one at that, but to another way of thinking about it, generative AI training and inference are the first HPC workload that can be universally applied to all organizations. So maybe the networking pie market share slices between InfiniBand and Ethernet should look more like the “generic” switching used in the datacenter than the distribution of interconnects among HPC systems. Even back in the old days of Mellanox before Nvidia bought it, InfiniBand only drove half of its revenues.

Time will tell and budgets will decide, but Mellanox was positioning itself for both the InfiniBand and Ethernet markets in HPC, hyperscale, and cloud computing long before Nvidia came along with $6.9 billion to buy Mellanox in March 2019. To be fair, since being acquired by Nvidia, Mellanox has picked up the SerDes circuits from the Nvidia team for both Ethernet and InfiniBand devices and has made sure that some of the goodies from InfiniBand that make it suitable for HPC and AI workloads have made it into the current Spectrum-4 Ethernet stack.

And that was always the intent, even before Nvidia had to gussy up its Ethernet portfolio with the Spectrum-X name as the Ultra Ethernet Consortium, backed by switch ASIC makers Broadcom, HPE, Cisco Systems and hyperscalers Microsoft and Meta Platforms, emerged to challenge the hegemony of InfiniBand in AI training and as Broadcom with Jericho3-AI, Cisco Systems with G200, and HPE with Rosetta (used in Slingshot switches) all started gunning for InfiniBand, which is largely controlled by Nvidia, with AI-specific switch ASICs. The Spectrum-X marketing name was a stunt, but the Spectrum-X technology is not and it is deliberately designed to shoot the gap between standard datacenter Ethernet and InfiniBand.

“Spectrum-4 is different from and behaves differently than Spectrum-1, Spectrum-2, and Spectrum-3,” Gilad Shainer, senior vice president of network marketing at Nvidia, tells The Next Platform. “When you build a network for AI, which is a network for distributed computing, then you need to look on the network as an end to end thing. Because there are things that you need to do on the NIC side and there are things that you need to do on the switch side because the network needs the lowest latency possible and the lowest jitter possible to keep down tail latencies. A traditional Ethernet datacenter network needs to have jitter, it needs to drop packets to handle congestion, but an AI network based on Ethernet cannot do this.”

Nvidia contends that the Spectrum-X portfolio can deliver somewhere around 1.6X the performance of traditional datacenter Ethernet running distributed AI workloads, and has said that InfiniBand can deliver another 20 percent performance boost beyond this.

The Spectrum-4 switches come in two flavors. The SN5600 has 64 ports running at 800 Gb/sec, 128 ports running at 400 Gb/sec, or 256 ports running at 200 Gb/sec; those 256 ports run at legacy 100 Gb/sec or 50 Gb/sec speeds if need be. There is a single 1 Gb/sec management port. The Spectrum-4 ASIC is rated at 51.2 Tb/sec of aggregate switching capacity and can process 33.3 billion packets per second. The SN5400 is based on a Spectrum-4 ASIC running at half the aggregate bandwidth (25.6 Tb/sec) without support for 800 Gb/sec ports and only driving 64 ports at 400 Gb/sec, 128 ports at 200 Gb/sec, and 256 ports at 100 Gb/sec and lower legacy speeds. The SN5400 has two 1 Gb/sec management ports for some reason.

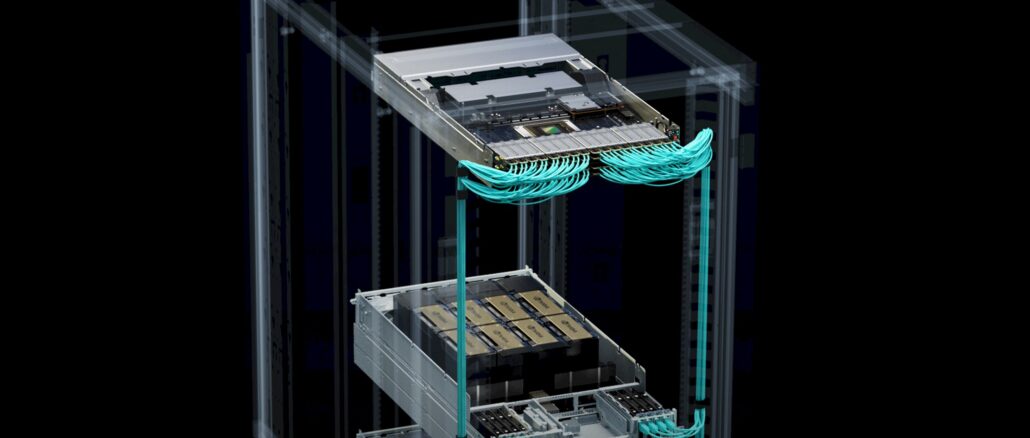

The special sauce with Spectrum-X is the adapter routing and congestion control for the RoCE protocol, which is the direct memory access technique borrowed from InfiniBand that many argue is still nowhere as good (and the evidence suggests it is not) but at least makes Ethernet lower latency than it otherwise is. For adaptive routing, the BlueField-3 DPUs are given the task of reordering out of order Ethernet packets and putting them into server memory over RoCE in the proper order. The in-band telemetry that drives congestion control in the Spectrum-4 switch is augmented by deep learning algorithms running in real-time on BlueField-3 DPUs that optimize settings on the infrastructure as users and conditions change.

The one thing that the Spectrum-4 switch does not have, however, are the SHARP in-switch processing capabilities that are part of the Quantum and Quantum 2 InfiniBand switches and that have also been added to the NVSwitch 3 GPU memory fabric. Which is a bit of a wonder, but maybe that is coming with Spectrum-5. Like InfiniBand, Spectrum-4 supports Nvidia’s NCCL implementation of the MPI protocol commonly used in distributed systems, and NCCL has been tweaked to run well on Spectrum-4 switches and BlueField-3 DPUs. This is one of the ways you get end-to-end performance gains, lower latency, and less jitter than running stock Ethernet.

We would love to see how InfiniBand stacks up to Spectrum-X, Jericho3-AI, and G200. Somebody, run some comparative benchmarks as part of an AI cluster bid and share with the world, please.

Nvidia itself might be able to do it because it is working with Dell to stand up a 2,000-node GPU cluster based on PowerEdge XE9690 servers based on “Hopper” H100 GPUs and BlueField-3 DPUs. The cluster, called Israel-1, is a reference architecture for just such testing and according to Shainer will eventually be part of the DGX Cloud, which allows Nvidia customers to figure up workloads on various infrastructure around the globe to test applications.

Dell, HPE, and Lenovo expect to have clusters based on the Spectrum-X reference architecture available in the first quarter of 2024.

Quote: “Somebody, run some comparative benchmarks as part of an AI cluster bid and share with the world, please”

I definitely second that motion!

This could be a job for Argonne, somewhere between ALCF and JLSE, where they get to play with the most wonderful toys ( https://www.alcf.anl.gov/alcf-ai-testbed , https://www.jlse.anl.gov/hardware-under-development/ ).

Maybe a more suitable name is Intelligent Digital Fabric.