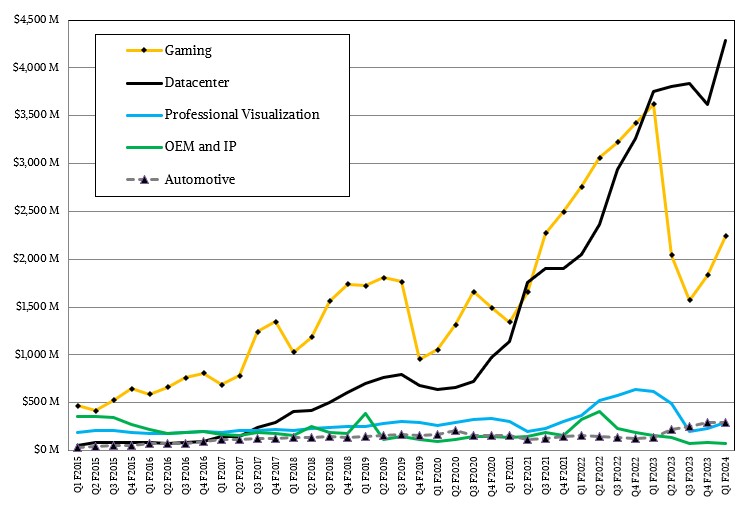

It is no surprise at all that Nvidia’s datacenter business is making money hang over fist right now as generative AI makes machine learning a household word and is giving us something akin to a Dot Com Boom in the glass houses of the world. It is also no surprise that its graphics card business is down but recovering a bit as the PC business sorts itself out in a post-pandemic world.

What we found interesting in Nvidia’s call with Wall Street analysts to go over the financial results for the first quarter of fiscal 2024, which ended in April, was the hinting by co-founder and chief executive officer Jensen Huang and chief financial officer Collette Kress that there was some kind of new Spectrum-4 Ethernet switching announcement on the horizon. And to be a little more specific, Huang added that this new networking was coming next week at the Computex trade show in Taipei, Taiwan and it is looking like it will happen on May 30, just after the Memorial Day holiday. (Thanks for making all of us who live in the United States work on a national holiday. Appreciate that.)

As we write this on Thursday morning, Nvidia shares are trading on Wall Street up 23.5 percent, and the company is about to set the record for the largest one-day gain in market capitalization in history and looks like it will be the first chip company in history with a $1 trillion market cap. This huge and immediate spike is because the company told analysts on Wednesday evening after the market closed that its sales in its fiscal Q2 would be around $11 billion, a rise of around 33 percent give or take and about 50 percent higher than Wall Street was expecting.

The cause? The Generative AI Boom. We think it doesn’t hurst that demand for GPU compute is far exceeding supply, driving up prices not only on the current “Hopper” H100 accelerators sky freaking high (to use a technical term) but also for the prior generation of “Ampere” A100 accelerators and indeed anything that AMD or Intel could make if they had spare capacity beyond the commitments they have on exascale supercomputer buildouts for national labs. And, by the way, they don’t have spare capacity. When a GPU accelerator rivals the cost of a core on an IBM System z mainframe, y’all know that you have struck gold or oil or something very, very good.

And thus, this is a seller’s market, and Nvidia is basically the only seller except on the fringes where the AI startups live. The good news for them is that Nvidia is hinting that the second half of the fiscal 2024 year will be even strong than the first half, and even with Nvidia increasing supply for its A100 and H100 compute engines and the NVLink and InfiniBand networking that supports them in massive AI training clusters and, for the moment at least for large language models, AI inference clusters.

Let’s talk about the networking announcement, such as we can infer it, and then get into the numbers for Nvidia’s datacenter business, which is without question and for the foreseeable future, going to drive the GPU and soon to be CPU and DPU powerhouse’s overall business.

“For multi-tenant cloud transitioning to support generative AI, our high speed Ethernet platform – with BlueField-3 DPUs and Spectrum-4 Ethernet switching – offers the highest available Ethernet network performance,” Kress claimed on the call with Wall Street. “BlueField-3 is in production and has been adopted by multiple hyperscale and CSP customers, including Microsoft Azure, Oracle Cloud, CoreWeave, Baidu, and others. We look forward to sharing more about our 400 Gb/sec Spectrum-4 accelerated AI networking platform next week at the Computex Conference in Taiwan.”

Nvidia unveiled the Spectrum-4 Ethernet ASIC, with 51.2 Tb/sec of aggregate bandwidth driving 128 ports running at 400 Gb/sec and 64 ports running at 800 Gb/sec, back in April 2022, so it is not a new chip although it is shipping this quarter apparently.

We think that Broadcom’s announcement of the “Jericho3-AI” StrataDNX chip for building AI clusters with Ethernet instead of InfiniBand has made Nvidia not only blink, but jump. We also think that the hyperscalers and cloud builders do not want to deploy InfiniBand at massive scale in their clusters because it tops out at somewhere around 40,000 endpoints in a world where these tech titans are deploying 100,000 endpoints in a single datacenter on Ethernet fabics with a Clos topology and leaf/spine network. We think further that Nvidia, like the standalone Mellanox before it, has been able to charge a premium for InfiniBand given the advantages it has for low latency and high message rates. But as we explained in the Jericho3-AI announcement, these large language models and recommender systems are moving massive amounts of big chunky data and message rates are not as important. And so, the hyperscalers and cloud builders are using these AI training workloads to make Nvidia and Broadcom give them Ethernet switch fabrics that do what they need for AI rather than adapting HPC networks based on InfiniBand for the job.

Nvidia will dance around this, and will argue that our interpretation is not correct – of this we are certain. But the fact is the hyperscalers and cloud builders only install InfiniBand begrudgingly because they have a near-religious zeal for Ethernet. No matter what technical arguments you might make.

Here is how Huang explained the situation and split the hairs ahead of the upcoming Spectrum-4 announcement, and we are quoting him in full so as to capture the full context during a time when InfiniBand had a record quarter and is running in excess of $1 billion a year, as we calculated a few weeks ago and as neither Kress nor Huang denied when analysts through such numbers out, and is set to have a record year, too. The $6.9 billion Mellanox purchase paid for itself a long time ago – and in a way that shelling out $40 billion or more for Arm Holdings would not have, even if it was a nice dream.

Inhale and read this slightly edited quote from Huang. We took out the ums and ahs and occasional repeated phrases, all of which is natural in speaking but which looks weird in print:

“InfiniBand and Ethernet target different applications in a datacenter. They both have their place. InfiniBand had a record quarter. We’re going to have a giant record year. And Nvidia’s Quantum InfiniBand has an exceptional roadmap, it’s going to be really incredible. But the two networks are very different. InfiniBand is designed for an AI factory, if you will. If that datacenter is running a few applications for a few people for a specific use case and it’s doing it continuously and that infrastructure costs you – pick a number – $500 million. The difference between InfiniBand and Ethernet could be 15 percent, 20 percent in overall throughput. And if you spent $500 million in an infrastructure and the difference is 10 percent to 20 percent and it’s a $100 million, InfiniBand is basically free. That’s the reason why people use it. InfiniBand is effectively free. The difference in datacenter throughput is just – it’s too great to ignore and you’re using it for that one application. However, if your datacenter is a cloud datacenter and its multi-tenant –it’s a bunch of little jobs and is shared by millions of people, then Ethernet is really the answer. There’s a new segment in the middle, where the cloud is becoming a generative AI cloud. It’s not an AI factory per se, but it’s still a multi-tenant cloud that wants to run generative AI workloads. This new segment is a wonderful opportunity and at Computex – I referred to it at the last GTC – we’re going to announce a major product line for this segment, which is an Ethernet-focused generative AI application type of clouds. But InfiniBand is doing fantastically and we’re doing record numbers quarter-on-quarter year-on-year.”

See the dancing? And make no mistake, Huang is by far the most eloquent conversationalist and technologist in the IT sector, and if Huang is dancing, it is because some distinction is subtle. And he can’t give the impression that InfiniBand is under any kind of pressure at all.

As we have pointed out many times before, there is a dance that InfiniBand and Ethernet have been doing for two and a half decades. And the long and short of it is that Ethernet steals all good ideas from InfiniBand and implements them less well but good enough to keep InfiniBand at bay and relegated to its HPC and now AI slice of the market.

But here is the thing. While the clouds and hyperscalers have been willing to deploy InfiniBand to do their AI research and create their internal models as well as to allow for the running of HPC and now AI training workloads on the cloud, we do believe that all things being equal, they would prefer Ethernet that looked like InfiniBand. And some of the top people in networking at major clouds have said as much recently and we poked a little fun at them back in February because many of the things they want Ethernet to do InfiniBand has done for a long time – and done well. If we had started making the transition to InfiniBand two decades ago, it would be no big deal. But instead, we are down to one, perhaps two if you count Cornelis Networks, three if you count some Chinese HPC labs (we don’t count them as different but derivative) suppliers of InfiniBand.

We think there is tremendous pressure to make a leaner, meaner Ethernet that is good at HPC and AI so the networks don’t have to be different inside these hyperscaler and cloud datacenters. And we also think this has important ramifications over the long haul for the InfiniBand market if these pressures result in Ethernet variants from Nvidia and Broadcom that give the same or better performance as InfiniBand on AI training work. Nvidia has to respond to Broadcom, which has been cooking up the Jericho3-AI for more than a year and which Arista Networks was talking up in a general way for more than a year, saying it could take on InfiniBand for AI training workloads.

We look forward to seeing what latent capabilities for AI training might be lurking in the Spectrum-4 ASIC from Nvidia, and we will tell you all about it next week.

With that, let’s spend a little time on the Nvidia numbers, which speak for themselves.

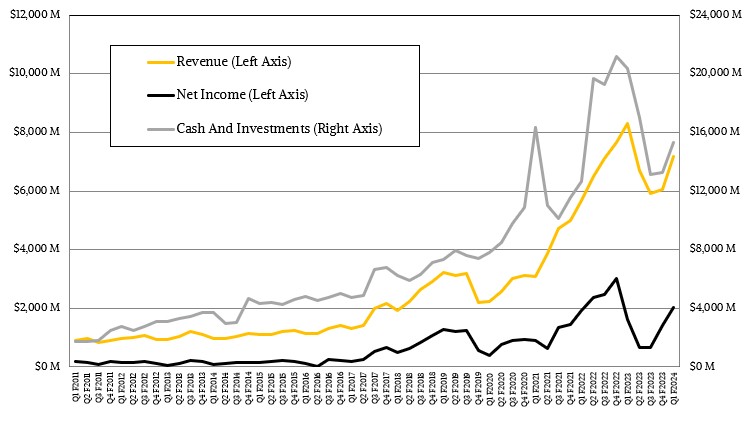

In the quarter, Nvidia’s revenues fell by 13.2 percent to $7.19 billion, but thanks to the product mix shift (and 70 percent gross margins on AI training gear), net income rose by 26.3 percent to $2.04 billion and accounting for 28 percent of revenue.

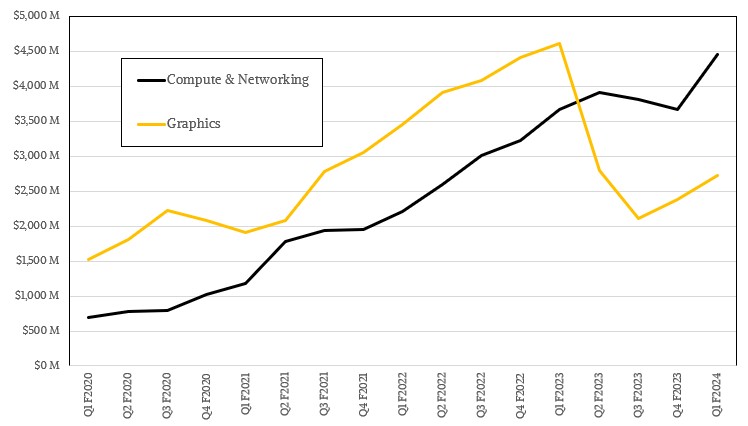

Nvidia’s Compute and Networking group had $4.46 billion in sales, up 21.5 percent, while the Graphics group, which sells GPUs for PCs and workstations, posted a 40.8 percent decline to $2.73 billion.

Here is the breakdown by Nvidia divisions:

The Datacenter division, which sells hardware and software into the glass house, is slightly different from Compute and Networking, had sales of $4.28 billion, up 14.2 percent.

Here is the paragraph that made Nvidia stock explode:

“Let me turn to the outlook for the second quarter fiscal 2024,” said Kress on the call. “Total revenue is expected to be $11 billion, plus or minus 2 percent. We expect this sequential growth to largely be driven by datacenter, reflecting a steep increase in demand related to generative AI and large language models. This demand has extended our datacenter visibility out a few quarters and we have procured substantially higher supply for the second half of the year.”

Our guess is that the tech titans and other big companies are prepaying to get Nvidia chips and systems, as happened for many vendors during the peak supply chain strain during the coronavirus pandemic, and they have to do capacity planning with Nvidia to ensure they can get what they need. Which means Nvidia knows more concretely how the 2024 fiscal year is going to play out than it might otherwise. And, Nvidia can charge the seller’s market price, which is another multiple on top of that demand.

And in this market, AMD and Intel could sell any GPU they can deliver in volume, despite incompatibilities. And it doesn’t look like Intel can ramp up volumes fast enough to meet demand, and it looks like AMD is trying like hell to do that while at the same time trying to get the Instinct MI300A GPU ready for the “El Capitan” supercomputer at Lawrence Livermore National Laboratory.

57% q/q upside forecast? – unheard of for a 30 year old company. Congratulations Nvidia, it’s been a long slog. Based on the opposite vectors of the stocks today, it looks like the technology leadership baton has been adroitly accepted by the Green team from the Blue team.

No kidding. Sheesh.

what does the Computex Specturm-4 ethernet switch announcement boil down to in terms of (1) performance vs. InfiniBand. and are we directionally moving away from InfiniBand (2) open vs. proprietary implementation, the scalability of ROCEv2 for open implementations

https://resources.nvidia.com/en-us-accelerated-networking-resource-library/ethernet-switches-pr?lx=LbHvpR&topic=Networking%20-%20Cloud

I’m working on it…. Patience, Gracehopper. HA!