If not for delays, the long-awaited Aurora supercomputer at Argonne National Lab would likely just be coming online. However, with Sapphire Rapids pushed to 2022, the lab is adding to its fleet of large testbed machines, including the forthcoming Polaris system announced this morning.

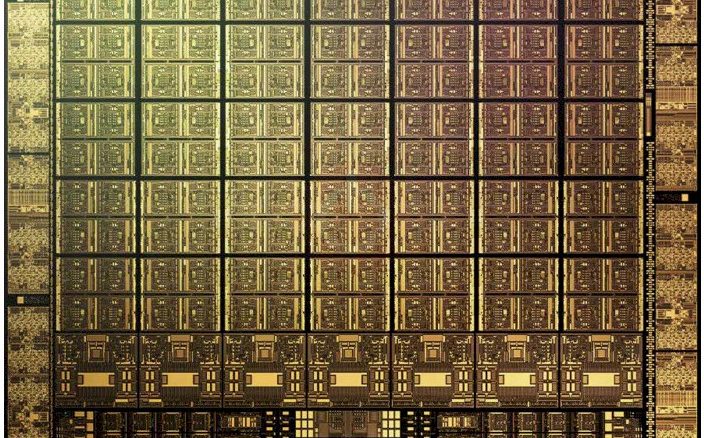

Since Argonne teams can’t test on the Sapphire Rapids and Xeon Xe architectures quite yet, they have gone the coprocessor route and commissioned HPE to build a 560-node system with four Nvidia A100 GPUs on each.

The peak theoretical performance of this “little” testbed is 44 petaflops — enough to give the machine a spot in the leading ten of the Top 500 supercomputers ranking.

Unlike some of its national lab counterparts in the US, Argonne has been less enthusiastic with large GPU deployments, often tending toward the CPU-only route. The one recent difference is the 11.7 petaflop-capable Theta supercomputer. The machine was originally an Intel-based HPE/Cray XC40 system but with CARES Act funding, the lab added 24 Nvidia DGX A100 nodes to the system (eight A100s with two AMD “Rome” CPUs).

Argonne’s architectural choice for Aurora came well before the AI/ML emphasis in supercomputing, with many labs bolstering GPU capabilities for the training capability along with an AL/ML-primed software stack. The Polaris system will allow the lab to delve into more AI/HPC hybrid work.

Polaris will allow users to prepare and scale their codes, and ultimately their science, for future exascale systems. Polaris will comprise more than 500 nodes of four GPUs each. The system will be fully integrated with the 200-petabyte file system ALCF deployed in 2020, with increased data sharing support.

Even though Argonne’s GPU emphasis isn’t as strong as it might be at other national labs, they do have a particular edge in AI. Argonne is home to an AI testbed we’ve been following closely. Machines housed there include a Cerebras CS-1 waferscale AI system, a Graphcore Colossus GC2 machine, a SambaNova Dataflow system, and some Groq hardware (although there is not much clarity on what they have or if it has arrived yet).

Applications running in the AI testbed include COVID research, deep learning for cancer type prediction, and massive astronomy simulations. One might expect work on these AI testbed systems to scale into the new, far larger pre-Aurora testbed.

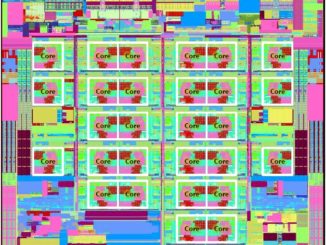

Dr. Kalyan Kumaran is a Senior Computer Scientist and the Director of Technology at Argonne’s Leadership Computing Facility. He emphasized to The Next Platform that this was a separate project and procurement since Argonne teams wanted a testbed system that would be at a larger scale. “We wanted a machine that would allow us to take a step up from current production resources, which are in the 10–13 petaflop range, to something larger and more modern with accelerators. The only direct connection with Aurora is that users will have this system to continue development.”

Kumaran adds that the HPE/Cray Slingshot interconnect is consistent across Polaris and future Aurora. That, along with shared programming models (OpenMP, MPI, Sycl), will also enable consistent work.

While it has taken time for Argonne to match GPU resources with other labs, Kumaran says GPU capabilities allow to support for the three pillars of HPC centers: providing traditional HPC simulation capabilities, allowing efficient AI/ML, and pushing data analytics.

The ability to integrate AI/ML into HPC workflows with GPU acceleration was clear to some at Argonne with Theta.

“The difference in computational performance accelerated our work almost instantly,” said Arvind Ramanathan, a computational biologist at Argonne who leads a group of researchers aiming to unravel the fundamental biological mechanisms of the virus while also identifying potential therapeutics to treat the disease. “Our data-intensive workloads — which combine AI, machine learning techniques, and molecular dynamics simulations — require significant brute force for the pace of discovery to proceed at a reasonable rate.”

The Polaris system is already being installed at Argonne and should be available for early access in early 2022, and by spring of next year will be opened more broadly. Core workloads include work on the ATLAS experiment (high-energy physics), fusion energy simulations with AI in the mix, neuroscience, and aerospace applications, according to Nvidia’s Dion Harris.

“Polaris is a powerful platform that will allow our users to enter the era of exascale AI,” said ALCF Director Michael E. Papka. “Harnessing the huge number of NVIDIA A100 GPUs will have an immediate impact on our data-intensive and AI HPC workloads, allowing Polaris to tackle some of the world’s most complex scientific problems.”

Be the first to comment