There are two different Auroras right now in supercomputing. There is the shape-shifting, legendary, and maybe even mythical “Aurora” and now “Aurora A21” exascale supercomputer that was being built by Intel with “Knights” many core processors and now, if Intel can get them out the door, with a combination of “Sapphire Rapids” Xeon SP processors and “Ponte Vecchio” Xe GPU accelerators, for Argonne National Laboratory. And then there is the real Aurora vector-engine based systems from supercomputer maker NEC that you have been able to buy for the past four years.

The Czech Hydrometeorological Institute (CHMI), which is the models and forecasts weather, climate, air quality, and hydrology for the Czech Republic, has just bought a liquid cooled hybrid supercomputer based on X86 processors and Aurora vector engines to radically improve its simulation capabilities. Headquartered in the capital city of Prague, notably with offices in the beer haven of Plzeň in western Bohemia. (Doesn’t that sound idyllic?)

Like many smaller meteorological institutions in Europe and some of the bigger ones in Japan and Asia throughout the decades, CHMI has been a longtime user of NEC parallel vector processors, which are akin to the original Cray vector machines from days gone by. And, interestingly, NEC is the last of the vector supercomputer makers that is still in the game, but just the same, it has had to go with a parallel processor-accelerator approach with the Tsubasa SX parallel vector architecture, based on the Aurora accelerators, to being down costs and boost scalability.

CHMI, whose lovely headquarters is shown in the feature image above, has been on the NEC vector computing ride for a long time. So it is not at all surprising that the meteorological agency would stick with this architecture, however modified it has become. And while many customers have moved away from the SX architecture (and those in the United States, like the National Center for Atmospheric Research in 1996, were prevented from buying NEC and Fujitsu supercomputer gear after dumping charges and import tariffs were placed on these two vendors after complaints by Cray.) The vector machines, like those of Cray and Fujitsu and even IBM with the vector-assisted 3090-VF mainframes from years gone by, were all good machines and elegantly designed. Through 2006, the last year we have any data, NEC had sold north of 1,000 SX vector supercomputers worldwide. It is probably close to double that now.

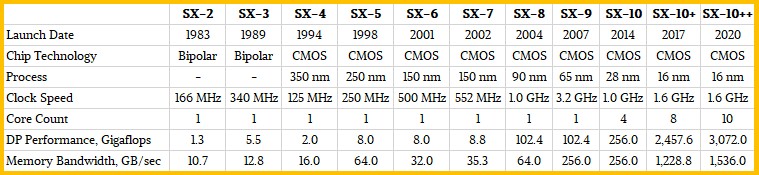

As we reminded everyone when the Aurora vector engines surfaced in the fall of 2016 ahead of the SC16 supercomputing conference, the most famous NEC vector supercomputer was the Earth Simulator system, which was the most powerful machine in the world from 2001 through 2004, which had 5,120 vector engines, based on its SX-6 design, delivering a then-stunning 35.9 teraflops at a cost of $350 million. This machine was upgraded to the ES2 system in 2009, using the SX-9 architecture and the $1.2 billion Project K supercomputer was supposed to have vector partitions made by NEC, but the Great Recession compelled NEC to pull out and leave the whole deal to Fujitsu for the engine and Hitachi for the interconnect. (Fujitsu eventually took over the whole project.)

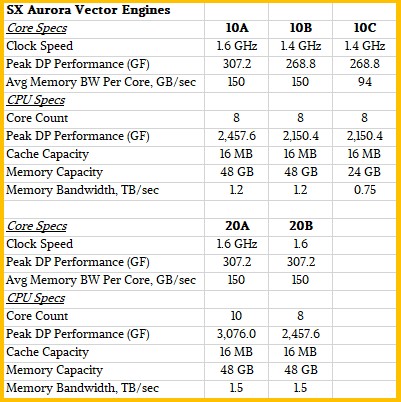

The feeds and speeds of the initial Aurora Vector Engine 10A, 10B, and 10C devices were detailed here, and, and we did a follow-up deep dive into the architecture a few weeks later there. At the time, the Aurora vector engines could absolutely beat Intel “Skylake” and “Knights Landing” and Nvidia “Volta” V100 GPUs on price and could beat Intel CPUs on double precision flops performance; the initial Aurora chips had 48 GB of HBM2 memory, and could beat the tar out of any of these other devices on memory bandwidth. With the kicker Vector Engine 20A/20B devices, NEC has boosted performance to 3 teraflops, up 22.5 percent compared to the prior Vector Engine A series at 2.45 teraflops and memory bandwidth to 1.5 TB/sec, up 25 percent from the 1.2 TB/sec with the HBM2 stack in the first Aurora chips.

CHMI ordered a new Tsubasa supercomputer from NEC’s German subsidiary last September and it was installed and operational in December, although the meteorological agency is just talking about it now. The cluster that CHMI has bought has 48 two-socket AMD Epyc 7002 series CPUs and eight of the latest Vector Engine 20B vector engines in the system’s PCI-Express 4.0 slots, for a total of 385 vector engines. Those 48 nodes have a combined 18 TB of HBM2 memory plus 24 TB of memory on the X86 hosts, plus a 2 PB parallel file system based on NEC’s LxFS-z storage, which is the Japanese company’s implementation of the Lustre open source parallel file system atop of Sun Microsystems’ (now Oracle’s) ZFS file system. The compute and storage nodes are interlinked using an Nvidia 200 Gb/sec HDR InfiniBand network, and they are also kept from overheating by direct liquid cooling.

The Czechoslovak Republic was established at the end of World War I in 1919, and shortly thereafter the National Meteorological Institute, the predecessor to the CHMI, was formed. The organization is an expert at air pollution dispersion models, and is also a big contributor, along with France, to the ALADIN numerical weather prediction system, which was in used by 26 countries when CHMI bought a 320-node Xeon E5 cluster from NEC with 7,680 cores and using 100 Gb/sec EDR InfiniBand interconnect to do its weather modeling; this system had 1 PB of LxFS-z storage.

Over the years, CHMI had had a fair amount of NEC systems, but because they do not often break into the Top500 rankings of systems that run High Performance Linpack and then brag about it (that is not the same thing as saying the top 500 supercomputers in the world for true HPC applications). We can’t find all of them, but we found a few. In 2008, CHMI had a single NEC SX-6/8A-32, which is a 32 vector engine system that is based roughly on the same technology as Earth Simulator, plus a bunch of Sun Microsystems Sun Fire Opteron servers for Oracle databases and other workloads. And in 2016, CHMI had two NEC SX-9 nodes, with 16 vector processors and 1 TB of main memory each, running its simulations, plus some Oracle T5-8 servers running databases. These, of course, are the engines used in the ES2 kicker to Earth Simulator.

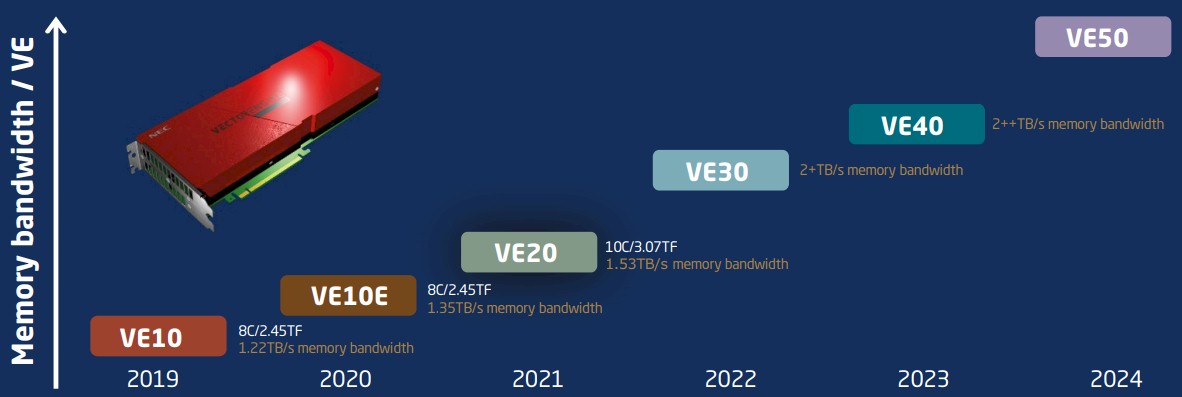

What NEC really needs now is to get an ES3 deal with the Japanese government – but given the success and cost of the “Fugaku” system at RIKEN, with its Arm processors and fat integrated vector engines, this seems unlikely. But stranger things have happened. NEC certainly has a roadmap going out a few years:

The question is how hard will NEC push performance alongside that bandwidth push shown above. Moving to 7 nanometer technologies could allow at least a doubling of performance. We shall see what NEC does next year.

Be the first to comment