In late 2018, HPC storage provider Panasas rolled out its re-engineered PanFS parallel file system based on Linux, a move designed to give the company and its technology a capability that has become key at a time when HPC-like workloads and emerging technologies like artificial intelligence (AI) and machine learning continue to spill beyond the confines of research institutions and government laboratories and into the enterprise: portability.

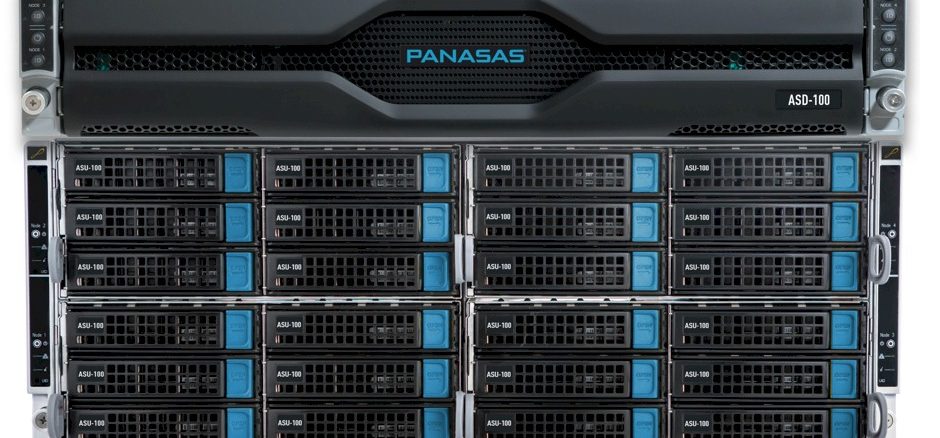

Prior to the introduction of ActiveStor Ultra and PanFS 8 that year, Panasas’ software ran on the vendor’s proprietary ActiveStor line of appliances. The new parallel file system offering was able to run on industry-standard hardware from Panasas – the ActiveStor Ultra was built on commercial off-the-shelf hardware – as well as third parties, enabling the vendor to not only broaden its customer base but also grow its ability to partner with OEMs, ODMs, and the channel, extending its reach in the enterprise space.

Tom Shea was the company’s chief operating officer when Panasas introduced ActiveStor Ultra and PanFS 8. It helped position the company to address the rapid changes in an increasingly distributed enterprise IT industry fueled by the rise of AI and similar workloads. Offering parallel file system software on proprietary hardware was not the way to move forward.

“One of the biggest things was we needed to make the software more portable,” Shea, who took over as president and CEO in September 2020, replacing Faye Pairman, tells The Next Platform. “I needed to be able to run it on different hardware of different price points. We had a really great appliance. It was beloved by our customers, but it was a one-trick pony in terms of our own hardware design, and unless we wanted to keep designing bespoke hardware, we couldn’t launch new products. Now we’ve got a Linux-based, very portable product. All of the file system internals have been pulled out of the kernel, so it’s very, very portable. What that means is I can have like the new product we just launched, Ultra, which is doing very well, but its sweet spot is about a petabyte as a starting configuration. Then we can look and we can say, ‘OK, that’s great for some of the customers that are going to scale between one and 20 petabytes, but what do we do about the life science guys that start at 250 terabytes and want to grow from there?’”

With the new portable architecture, organizations can leverage Panasas’ HPC storage software in systems from Supermicro or other hardware makers that Shea says the company is speaking with, though he declined to name them. “We can populate that with a couple of different levels and different types of flash, and we can launch a new product for a different point in the marketplace,” he says. “That’s something that we couldn’t do before. We were usually forced to force that one product onto the whole market and you can’t do that. It’s just too big. It’s too broad.”

That won’t do at a time when a broader range of organizations inundated with data and wrestling with an array of advanced technologies like AI, data analytics and containers and different file sizes. HPC workloads increasingly are emerging in enterprise datacenters and vendors are following suit with their products. IBM in April rolled out Spectrum Fusion, a container-native software-defined storage (SDS) offering that includes its Spectrum Scale parallel file system and is designed to make it easier for organizations to move data around their distributed environments that include the datacenter, cloud and edge by enabling them to use a single copy of data rather than having to make duplicate copies for each environment.

DDN, which acquired Intel’s Lustre parallel file system and related assets in 2018, reportedly is looking to make them more accessible to enterprises.

For 21-year-old Panasas, creating a portable version of its PanFS software has opened doors that the company is still taking advantage of under Shea’s leadership. Panasas since at least 2015 has been talking about expanding the reach of its technologies beyond HPC and supercomputers and the need to address enterprise demands is stronger even now. Over the past several months, Shea has been building an executive team with an eye toward partnerships and expanding the reach of Panasas’ into new verticals, such as financial services, trading and manufacturing.

“Every year that we continue to build our business, we see more and more of what I’m going to call enterprise HPC customers,” he says. “It started off a while ago with companies designing airplanes. We now have a large consumer products companies using our stuff to simulate ordinary consumer products – diapers, believe it or not. You’re getting to the point now where any kind of product development involves simulation and technical computing and HPC. We’re seeing a lot of life science customers. There are these Cryo-EM instruments that are spitting out this really interesting microscopic detail on the interactions between cells and drugs. There’s amazing genomics and microbiome. I mention all those because they all fit a basic pattern that wasn’t the case in the early days of HPC. These are really talented engineers or scientists or people trying to get something done in a very enterprise-like organization that really don’t have an interest in building their own file system or maintaining an open-source file system. What they want is an enterprise-like experience, but they can’t get that from NetApp or Isilon because they need something that’s super high bandwidth and suitable for a big compute cluster.”

Partnerships with system makers and the channel will be key for Panasas moving forward. The Super Micro partnership with public, but Shea says the company is talking with other OEMs about having the software certified to run on their hardware. It gives enterprises options when working with Panasas, the CEO says, adding that the company can deliver the storage “through our own partnership with Super Micro. You can work with the reseller of your choice. You could ultimately buy from somebody other than Super Micro if you’re not comfortable with that. And obviously, there’s a short list of OEMs out there that we’re talking to. That’s really what the partnership piece is about, is that sticking with our strengths. We’re really good at file systems in the software and the hardware is a really important piece of the system. But that’s not our expertise. We’d like to work with partners on that. That’s a main way we change the company.”

The cloud is a puzzle that Panasas still needs to figure out, Shea says. Enterprises are rapidly adopting hybrid cloud and multicloud strategies and most Panasas customers are running compute clusters and storage around the clock, so there is bursting to the cloud in a hybrid-cloud fashion. For organizations with small datasets, embracing the cloud makes sense because it’s not difficult to shift workloads to the cloud and between clouds. However, Panasas users can have HPC-sized storage datasets that are measured in petabytes.

“You need a much more data-centric view of how to do cloud in order for HPC to really take off there,” he says. “You need to minimize the number of times that you’re moving this around on the web. You need to provide tools that can accelerate that movement. We do see some HPC in the cloud. Even now we see the big guys trying. But as we talk about potential partnerships there, we’re looking at this. As you know, HPC in the cloud is going to be a bit of a cloud 2.0, where they have to change the way they work with data to be successful. Our goal is to be part of that phase.”

HPC in the cloud will be inevitable, but it will take longer than many people think, according to the CEO. Big cloud providers like Amazon Web Services and Microsoft Azure can easily spin up compute resources, but HPC storage is a different challenge.

“It’s easy to think of us as storage, but in HPC, the storage layer is almost part of the compute,” Shea says. “The model in Azure or anywhere else right now has been that you’ve got your compute and you’ve got your storage, and the storage isn’t a big deal. It’s just some files. That’s where I see the industry grappling with this. Now we’ve got this storage layer that actually needs to be integrated into the compute in a really, really tight way with amazing bandwidth between the computer and the storage.”

Cloud providers are working to address this issue, but many currently are at the tire-kicking stage, he says. Partnerships with these providers will be critical to Panasas. The vendor isn’t going into the cloud business or jumping into the compute side of the equation. It will lean on AWS, Azure, Google Cloud, and smaller providers to deliver the compute and the GPU capabilities that are increasingly in demand and give enterprises a consistent experience between the datacenter and cloud.

Be the first to comment