For the past couple of decades, IBM’s Spectrum Scale – formerly known as General Parallel File System –has had a solid standing as one of the two go-to file systems for HPC.

However, the emergence of modern workloads like artificial intelligence and analytics, coupled with the rapidly expanding growth of the hybrid cloud and the shift toward containers software development and movement within hybrid cloud and multicloud environments, are helping to fuel the adoption of HPC workloads in the enterprise and, thus, opening up opportunities for technologies like scalable parallel file systems to follow that trend and extend their reach in the IT world.

IBM already has taken steps to make Spectrum Scale more accessible to enterprises and their growing HPC-like workloads. The company has developed Spectrum Scale ECE (Erasure Code Edition), which made its Spectrum Scale RAID available as software and as a scale-out technology, being able to be shared among a cluster of services linked by a high-speed fabric. Its Spectrum Protect brings a range of security capabilities to the parallel file system.

More recently, rival Hewlett Packard Enterprise announced that it was supporting Spectrum Scale in some of its mainstream enterprise ProLiant servers and Apollo systems, aimed at such workloads as AI, deep learning and big data analytics. HPE sees the IBM storage software as a way to bring parallel file systems capabilities to enterprises as they look for tools to help them run and manage these new workloads that are becoming more prevalent in their operations and for alternatives to network-attached storage (NAS) that may be challenged in areas like performance and scalability and Lustre parallel file systems that have yet to make the transition to the enterprise, though DDN – which acquired Intel’s Lustre parallel file system and related assets – is reportedly is looking to make them more accessible to enterprises.

IBM this week is taking another step in expanding its parallel file system technology beyond HPC and supercomputers. The company is unveiling Spectrum Fusion, a container-native software-defined storage (SDS) offering that is aimed at enabling organizations that can enable a single copy of data to be travel between core datacenters, the cloud and the edge, rather than having to make duplicate copies for each environment.

“One of the key tenets of this is to expand hybrid cloud,” Eric Herzog, chief marketing office and vice president of worldwide storage channels for IBM’s Storage Division, tells The Next Platform. “When you think hybrid cloud originally, you think on-prem to cloud. Edge is a huge use case these days. We can span with Spectrum Fusion seamlessly with a single copy of data the edge, core and cloud. Spectrum Fusion will even work with other people’s storage – EMC Isilon, NetApp NFS, any S3-compliant object storage. We can use that without creating a duplicate copy of the data. Part of the value of Spectrum Fusion is traversing core, edge and cloud, particularly for AI and analytic workloads.”

The introduction of Spectrum Fusion is part of IBM’s larger strategy of putting its focus on hybrid clouds and AI, with the centerpiece of its strategy being the $34 billion acquisition of Linux and open-source stalwart Red Hat in 2019 and its various platform components, in particular the OpenShift Kubernetes container management technology and Red Hat Enterprise Linux operating system. IBM also is shedding its managed infrastructure business – creating a new hosting and outsourcing company called Kyndryl, which is set to launch by the end of the year – to make more resources available for its cloud and AI ambitions.

IBM sees the combination of Red Hat’s open software and its own technology like Watson AI and its infrastructure hardware and software – such as Spectrum Scale and, now Spectrum Fusion – as a winning combination as more data and applications are created, accessed, run, processed and store outside of traditional on-premises datacenters.

The Spectrum Fusion announcement comes on the first day of Red Hat’s virtual Red Hat Summit event.

While discussing the company’s most recent quarterly financial numbers earlier this month, IBM CEO Arvind Krishna spoke to analysts and journalists about the “transformative power of hybrid cloud and AI,” with hybrid cloud representing a $1 trillion opportunity, with fewer than 25 percent of workloads currently in the cloud.

“We are reshaping our future as a hybrid cloud platform and AI company,” Krishna said, noting that IBM’s strategy is platform-centric based on OpenShift with Linux, containers and Kubernetes at the foundation. “For us, the case for hybrid cloud is clear. Businesses have made massive investments in their IT infrastructure, and are dealing with specific constraints such as compliance, data sovereignty and latency needs in their operations. They need an environment that is not only hybrid but a hybrid platform that is flexible, secure and built from open-source innovation. This gives them a credible path to modernizing legacy systems with advanced cloud services and building cloud-native apps.”

Key to Spectrum Fusion is being able to access and use the data wherever it is with only a single copy – the datacenter, cloud r edge. Spectrum Scale has many of the same capabilities, but doesn’t have such features as backup, restore or discovery, according to IBM’s Herzog.

“We not only can do primary storage, but we can back it up, we can restore it, we can archive it and we could do that not only for the existing data that you put in to Spectrum Fusion, but the older data because Spectrum Fusion has a single global namespace and single file system that can traverse core, edge and cloud,” he says. “If there’s older an dataset data repository there, we can almost absorb them. We also have included in Spectrum Fusion extensive discovery capability, so we can create metadata cataloging and indexing with this discovery technology integrated in the Spectrum Fusion.”

The single data copy capability is designed to strengthen data security and compliance requirements from government regulations such as the EU’s General Data Protection Regulation (GDPR) and the integration with IBM Spectrum Protect offers data protection. An API enables developers to interact with it, enabling the discovery capability to extend also to Spectrum Scale and Elastic Storage System, which Herzog calls the physical version of Spectrum Scale. Spectrum Fusion also works with IBM’s Cloud Object Storage service as well with any other S3 object storage, as well as Dell EMC’s Epsilon and NetApp NFS.

“We took elements of our current products – Spectrum Protect Plus is integrated into Fusion or elements of it, Spectrum Scale is in there and so is our Spectrum Discover,” Herzog says. “We container-natived them and then we fused them into one product, Spectrum Fusion. This is not a suite; this is one product [with] single management console.”

It will integrate with IBM Cloud Satellite, a hybrid cloud offering that can run IBM Cloud on third-party hardware. It was introduced in 2020 and become generally available this year. IBM Cloud Satellite is part of a push by cloud providers and OEMs alike to offer their capabilities and technologies on both ends of the hybrid cloud fence – on premises and in the cloud. Amazon Web Services is doing this with Outposts, Google Cloud with Anthos and Microsoft Azure with Azure Arc. The integration of Cloud Satellite with Spectrum Fusion will enable organizations to manage cloud services in the datacenter, cloud or edge through a single management plane.

Spectrum Fusion also will integrate with Red Hat’s Advanced Cluster Manager, aimed at managing multiple OpenShift clusters.

Spectrum Fusion will be released in two consumption models. The first coming in the third quarter will be integrated into a hyperconverged infrastructure solution, a container-native offering that will include a rack containing X86 systems from IBM along with storage and networking preloaded with all the software, such as Kubernetes and the Red Hat offerings. The network nodes will be 100 GbE, the storage nodes will all be flash, no hard drives.

In early 2022, IBM will release a SDS version of Spectrum Fusion that can run on third-party hardware from the likes of Dell EMC, HPE and Lenovo. That’s similar to what IBM already does with Spectrum Scale and Spectrum Virtualize for Public Cloud.

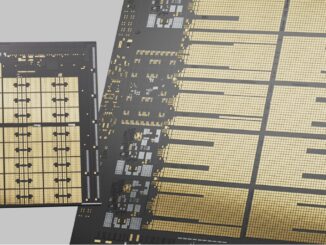

Along with Spectrum Fusion, IBM also is rolling out new and updated Elastic Storage Systems. The ESS 3200 is the next edition after the 3000, a 2U unit that offers throughput of 80 Gb/sec, double that of its predecessor. It offers up to 367 TB of capacity for each 2U node and supports up to eight 200 Gb/sec HDR InfiniBand or eight 100 Gb/sec Ethernet ports to drive high throughput and low latency.

The ESS 5000 has been upgraded to offer 10 percent more density, up to 15.2PB. All ESS systems also provide automated containerized deployment capabilities with the latest version of Red Hat’s Ansible automation platform.

Be the first to comment