As we well know by now, workloads at supercomputing sites large and small are changing with the introduction of machine learning and more complex applications that require both large and small files.

As noted a few months ago, the major HPC parallel file systems are having a tough time keeping pace with these changes, which means storage architects have had to double down to figure out how to also juggle more medium and small files. Luckily, there are options that extend beyond hard disk and while flash adds to the options, it also adds to the cost, especially for appliance makers who are eager to pack in ultimate performance and usability without being expensive enough to force buyers to look to less fully integrated options.

Few HPC oriented storage makers know the ebbs and flows of these requirements better than Panasas, which has been a long-standing appliance maker with its ActiveStor line, which is based on their own PanFS file system. In the last few weeks that string of appliances was refreshed to adapt to the mixed workload demand from both a hardware and software perspective.

As Curtis Anderson, a software architect at Panasas tells The Next Platform, the mixed workload demands, coupled with the array of capabilities provided by flash, means decision-making for storage is a bit more complicated. On the more straightforward hardware side, his teams have worked to further decouple the hardware and software as well as provide everything on industry standard hardware via their own appliances or via OEM partners in the future but in terms of software, the re-engineering around the challenges mentioned is worth describing.

PanFS 8 can now run on any properly configured and qualified storage hardware, which opens up possibilities for shops that are Lustre based but are looking for ways around some of its limitations for mixed workloads. It is not that handling such work on Lustre is impossible, it’s just that it takes some serious tuning and optimization, which means added IT resources to keep it humming when complexity grows. For some HPC centers, it can take a full time person or more to manage tens of petabytes—but Anderson says their goal with PanFS 8 and their new decoupled ActiveStor Ultra (we’ll get to that hardware in a moment) is to make it simple without the tradeoffs and a lot cheaper in terms of admin costs—something all vendors with expensive NVMe and flash-based appliances keep touting so the up-front costs sting a bit less.

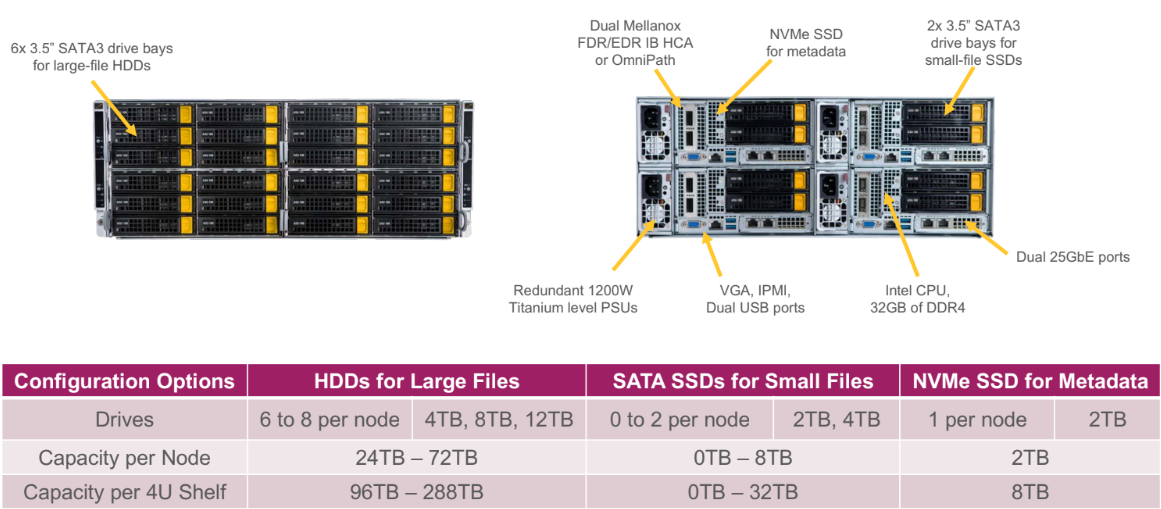

All HPC centers are going to have to start considering storage costs in new light. The workloads will demand it and t’s not just machine learning that is driving the mixed workload problem. Other areas like genomics are also adding more complexity and file size diversity. “We do well at large files because that’s the core of our parallel file system. We do well at medium and small files because of the way we stripe files across nodes inherently spreads out the hot spots that might otherwise slow things down, everything gets roughly equally loaded,” Anderson explains. But the interesting thing is that with NVMe, SATA SSD, and hard drive options, Panasas can separate the small files and metadata onto the SSDs for max IOPS, plunk the large files on the bandwidth-ready hard drives, and use low-latency NVMe to handle the metadata via a database.

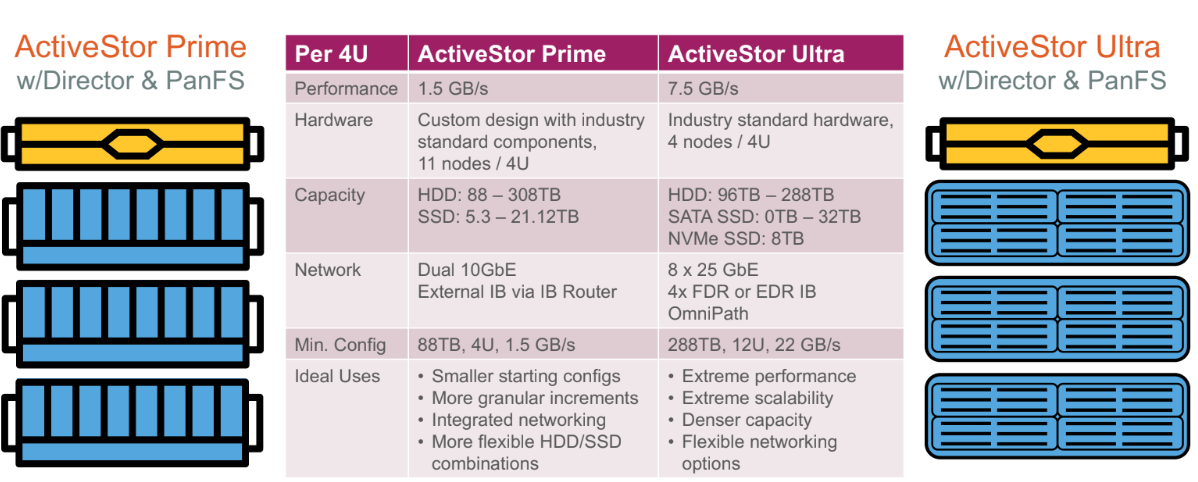

When Panasas re-architected for HPC with manageability, performance, and these increasingly important mixed workloads in mind, Anderson said they focused on three aspects. Modularity, flexibility via the hardware separation from software, and performance. “We have lagged with performance in the past but with our current ActiveStor offering, we can get the full capability of the underlying hardware and reach our ‘hero number’ of a fully configured setup that provides 75GB/s per rack.”

That performance lag from the past Anderson speaks to was because Panasas focused heavily on making their systems manageable and reliable—something that comes with its own tradeoffs. The original ActiveStor had a custom hardware design with the director functionality integrated. Last year, Panasas decoupled the director from enclosure and now, with Ultra, the new director and the storage are both separate and on industry standard hardware.

Going from proprietary to portable took some time, which explains why Panasas did not open up for OEMs earlier. “When the platform was first adopted it was treated as a true appliance with a lot of software customization to deal with the specific hardware pieces. It’s taken a lot of straightening and modularization to make this all portable,” Anderson adds.

With the refreshed hardware and PanFS 8, Anderson says they can now further distinguish themselves from Lustre and other file systems. “We have consistent performance regardless of complexity—there is no constant retuning. We go from dock to data in one hour versus days or weeks and there is no tuning as workloads change.” He says their largest installation of 25 petabytes requires one part-time admin and one of the admins for a 20 petabyte array consumes one-quarter of a full person’s time. This part can be harder to see but over the long haul, Panasas argues that doing the real math on HPC storage costs is far more nuanced than up-front investment.

“Up until now HPC storage buyers had to make a tradeoff. It they wanted the highest performance levels they sacrificed on manageability and reliability as that had to become more hands-on IT resource intensive. On the other side, if you wanted that manageability and reliability, there were some performance sacrifices. Our goal was to make all of three possible at the same time without the tradeoffs,” Anderson says, pointing to their own history of tradeoffs over the course of their product lines and everything finally comes together (while ironically being more disaggregated than ever) with ActiveStor Ultra.

The next thing beyond Ultra will be focused on super-low latency applications with much different requirements than traditional HPC. Think, for instance, inference where all-flash will be necessary at scale. Of course, that comes with an incredible cost and doesn’t make sense—a problem that Panasas is working to solve in the near future.

Much more about this and the more general state of HPC storage in this in-depth interview we recorded at the Supercomputing Conference (SC18) in Dallas recently.

Be the first to comment