During the Intel AI Summit earlier this month where the company demonstrated its initial processors for artificial intelligence training and inference workloads, Naveen Rao, corporate vice president and general manager of the Artificial Intelligence Products Group at Intel, spoke about the rapid pace of evolution in the AI space that also includes machine learning and deep learning. The Next Platform did an in-depth look at the technical details Rao shared about the products. But as noted in the story, Rao explained that the complexity of neural network models – when talking about the number of parameters – is growing ten-fold every year, a rate that is unlike any other technology trend we have ever seen.

For Intel and the myriad other tech vendors getting making inroads into the space, AI and components like machine learning and deep learning already is a big business and promises to get bigger. Intel’s AI products are expected to generate more than $3.5 billion in revenue for the chip maker this year, according to Rao. But one of tricks to continuing the momentum is being able to keep pace with the fast-rising demand for innovations.

In a recent interview with The Next Platform before the AI Summit, two of the key executives overseeing the development of the company’s first-generation Neural Network Processors – NNP-T for training and NNP-I for inference – echoed Rao’s comments when talking about the speed of evolution in the AI space. The market is taking a turn, with more enterprises beginning to embrace the technologies and incorporate them into their business plans, and Intel is aiming to meet accelerating demand not only with its NNPs but also through its Xeon server chips and its low-power Movidius processors, which can help bring AI and deep learning capabilities out to the fast-growing edge.

Gadi Singer and Carey Kloss, vice presidents and general managers of AI architectures for inference and training, respectively, also talked about the challenges the industry faces at a time when model sizes continue to grow and organizations are starting rolling out proof-of-concepts (POCs) with an eye toward wider deployments. The landscape has changed even since Intel bought startup Nervana Systems three years ago to challenge Nvidia and its GPU accelerators in the AI and deep learning space.

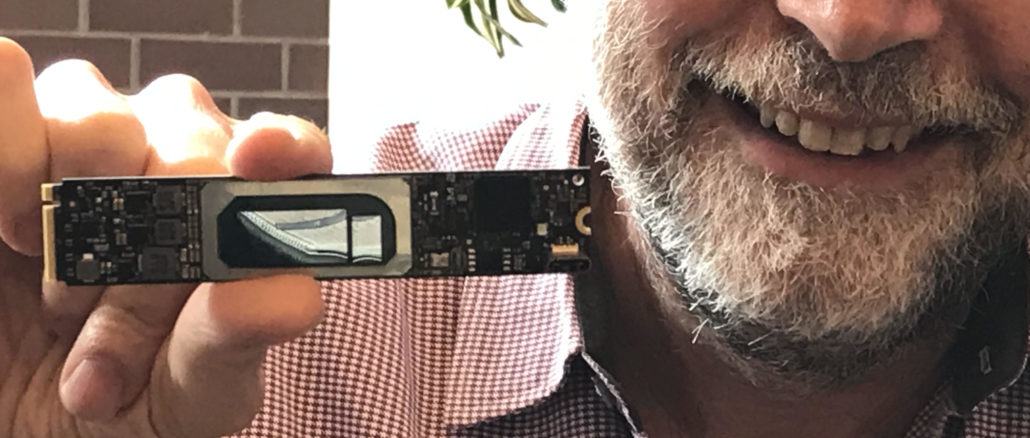

“What we see happening in the transition to now and toward 2020 is what I call the coming of age of deep learning,” says Singer, pictured below with an NNP-I chip, tells The Next Platform. “This is where the capabilities have been better understood, where many companies are starting to understand how this might be applicable to their particular line of business. There’s a whole new generation of data scientists and other professionals who understand the field, there’s an environment for developing new algorithms and new topologies for the deep learning frameworks. All those frameworks like TensorFlow and MXNet were not really in existence in 2015. It was all hand-tooled and so on. Now there are environments, there is a large cadre of people who are trained on that, there’s a better understanding of the mapping, there’s a better understanding of the data because it all depends on who is using the data and how to use the data.”

<<Intel Singer chip>>

The last five years were spent digesting what the new technology can do and building the foundation for using it. Now there’s a lot of experimentation and deployment merging, he says. Hyperscale cloud services providers (CSPs) like Google, Facebook, Amazon and others already are using deep learning and AI and now enterprises are getting into the game. Intel sees an opportunity in 2020 and 2021 to create the capabilities and infrastructure to scale the technologies.

“Now that it has gotten to the stage where companies understand how they can use it for their line of business, it’s going to be about total cost of ownership, ease of use, how to integrate purpose-built acceleration together with more general applications,” Singer says. “In most of those cases, companies have deep expertise in their domain and they’re bringing in deep learning capabilities. It’s not really AI applications as it is AI-enriched applications that are doing whatever it was those companies were doing before but doing it in an AI-enriched way. What Intel is doing, both from the hardware side and the software side, is creating this foundation for scale.”

That includes not on the AI, Xeon and Movidius products, but also such technologies as Optane DC persistent memory, 3D XPoint non-volatile memory and software, all of which work together and can help drive deep learning into a broader swath of the industry, Kloss says.

“It’s still the early days,” he says. “The big CSPs have deployed deep learning at scale. The rest of the industry is catching up. We’re at the knee of the curve. Over next three years, every company will start deploying deep learning however it serves their purpose. But they’re not there just yet, so what we’re trying to do is to make sure they have the options available and we can help them deploy deep learning in their businesses. More importantly, we offer a broad range of products because as you’re first entering deep learning, you might do some amount of proof-of-concept work. You don’t need a datacenter filled with NNP-Ts for that. You can just use Xeons, or maybe use some NNP-Ts in the cloud. Once you really get your data sorted out and you figure out your models, then maybe you deploy a bunch of NNP-Ts in order to train your models at lower power and lower cost because it’s part of your datacenter.”

It will be a similar approach when deploying inference, Kloss says. That means initially relying on Xeons, and once it becomes an important part of the businesses and enterprises look to save money and scale their capabilities, they can add NNP-I chips into the mix.

Balance, Scale And Challenges

Intel took a a clean-sheet approach to designing the NNPs and balance and scale were key factors, he says. That includes balancing bandwidth on and off the die with memory bandwidth and the right amount of static RAM (SRAM). Getting that balance right can mean 1.5 to two times better performance.

For scaling, training runs no longer happen on a single GPU. At minimum, training now runs on a chassis with at least eight GPUs and even after the NNP-T chip was in the Intel labs for only a few months, the company was already running eight- and 16-node scaling runs. Intel also incorporated a feature to help future-proof the chip, enabling it to move straight from the compute on one die to the compute on another die with low latency and high bandwidth, Kloss says.

Such capabilities are going to be critical in the rapidly evolving field, he says. When Nervana launched 2014, single-chip performance was important and the focus was on single-digit teraflops on a die. Now there are hundreds of teraflops on the die and even that isn’t enough, according to Kloss. Full chasses are needed to keep up.

“Just five years ago, you were training a neural [network] on single-digit teraflops on a single card and the space has moved so fast that now there are experiments where people are putting a thousand cards together to see how fast they can train something and it still takes an hour to train,” Kloss says. “It’s been an exponential growth. … In general, it’s going to get harder. It’s going to be harder to continue scaling at this kind of pace.”

Looking forward, there are going to be some key challenges Intel and the rest of the industry will have to address as parameter size increases and enterprise adoption expands, according to Kloss. At the same time, the trend will be toward continuous learning, Singer says, rather than separate inference and training. One of the challenges is size of the die, an area that the Open Compute Project’s OAM (OCP Accelerator Module) specification will help address, Kloss says. There also are the issues with growing limitations with SerDes (Serializer/Desirializer) connectivity devices and power consumption.

“You can only fit a die in there that’s a particular size,” he says. “It’s hard to go more than a 70x70mm package – which is enormous, by the way. The thing’s the size of a coaster. You can’t just keep adding die area to this thing. The power budgets are going up; we’re seeing higher power budgets coming down the pike, so instead of adding die area people will start adding power to the chasses. But fitting high-powered parts into the current racks is a problem.”

Regarding high-speed SerDes, it “basically fills the edges of the die, but you can’t go more than two deep or so on SerDes unless it’s a dedicated switch chip. [SerDes] links everything – PCI-Express is made up of SERDES, 100 Gb/sec Ethernet is made up of SERDES. Each lane can only go so fast, and we’re starting to hit the limits of what how fast you can go over a reasonable link. So over the next couple of years we’re going to start hitting limits in terms of what the SERDES can do on the cards and how fast they can go. We’re going to hit limits on how big a chip we can create, even if we could technologically go further. And then we’re going to hit power limits in terms of chassis power, rack power.”

The next designs will have to take all of this into account, Kloss says, adding that “the industry is going to hit these kinds of limitations, [so] it is going to be more and more important for total cost of ownership and for performance-per-chip to optimize for power.”

At the same time, such efficiency is going to have to come in future-proofed products that can address workloads now and those coming down the line, Singer says. That is where Intel’s broad base of capabilities – not only with the NNPs but also with its Xeon CPUs, Movidius chips, software and other technologies – will become important.

“By us creating a broader base and looking at the way to create the right engines but connect them in a way that can support multiple usages, we’re also creating headroom for usages that have not been invented yet but by 2021 might be the most common usages around,” he says. “That’s the pace of this industry.”

Be the first to comment