When Intel starts shipping its “Cascade Lake” Xeons in volume soon, it will mark a turning point in the server space. But not for processors – for memory. The Cascade Lake Xeon SP will be the first chip to support Intel’s Optane DC Persistent Memory, a product that will pioneer a new memory tier that occupies the performance and capacity gap between DRAM and SSDs.

Like Intel’s Optane SSDs, Optane DC Persistent Memory Modules (PMM) are equipped with 3D XPoint, a non-volatile memory technology co-developed by Intel and Micron. Optane PMM is designed to offer something reasonably close to DRAM performance, along with non-volatility. And since it’s about 10 times denser than DRAM, it promises to be a good deal less expensive. As a result, customers should be able outfit their servers with multiple terabytes of Optane DC without breaking the budget.

At least that’s the hope. The value of the Optane DC PMMs to customers will based on their price-performance measured against their application set. At this point, we don’t yet know the cost of any of the modules being offered, which will initially ship in 128 GB, 256 GB and 512 GB capacities. We also have only a rough idea of the performance. According to Intel, from a latency standpoint, 3D XPoint is about 10 times slower than DRAM and about 1,000 time faster than NAND SSDs. But those numbers offer only a hint at the performance once the technology is wrapped inside a DIMM that plugs into a memory bus.

Using internal benchmarking, Intel claims Optane DC can deliver 11 times the write performance and 9 times the read performance for Spark SQL workloads, compared to a DRAM-only system. Third party users who have received early access to the technology are no doubt doing their own tests. As we reported last August, Google is developing VMs that tap into Optane DC to speed up SAP HANA. Those results would be a good indicator of the product’s potential to accelerate other in-memory databases.

Recently, a team of a dozen researchers at the University of California at San Diego (UCSD) published the results of a study that measured the comparative performance of Optane DC memory using an array of memory and I/O-sensitive benchmarks.

They exercised the Optane hardware in two modes: as primary main memory, ignoring 3D XPoint’s non-volatile nature, and as an in-memory file store, which takes advantage of its ability to save its contents when power is switched off. The researchers claimed this study represented “the first in-depth, scholarly, performance review of Intel’s Optane DC PMM, exploring its capabilities as a main memory device, and as persistent, byte-addressable memory exposed to user-space applications.”

The hardware setup was pretty straightforward, in this case, a single dual-socket server powered by a couple of 24-core Intel Cascade Lake SP processors running at 2.4 GHz. Main memory was comprised of 384 GB of DRAM and 3 TB of Optane DC memory modules (256 GB per DIMM).

Using Optane as straight memory only makes sense for applications that require a larger data footprint than can be supplied economically by DRAM. In this scenario, the DRAM itself is relegated to the role of cache, with the idea that its superior performance will hide the lower bandwidth and higher latency of the Optane modules.

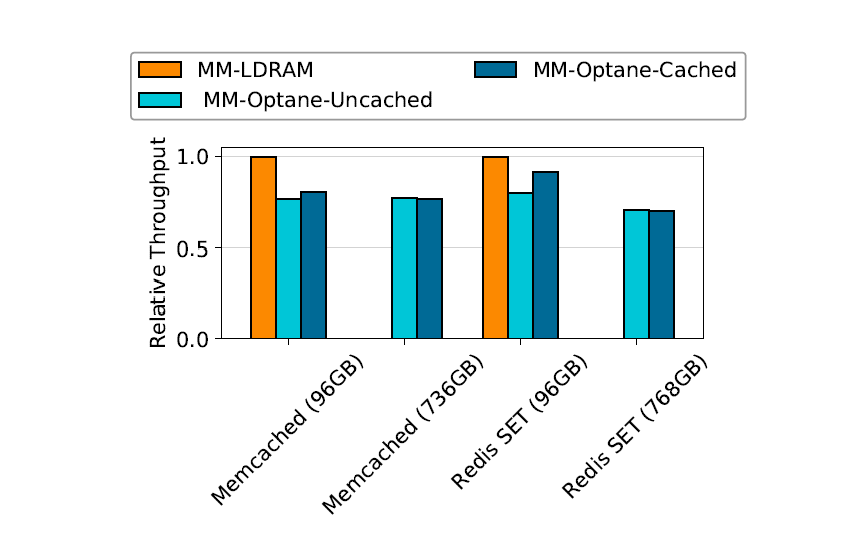

As it turns out, that approach works fairly well, at least for Memcached and Redis, two common in-memory frameworks. Compared to a DRAM-only set-up, throughput decreased by 8.6 percent for Memcached and 19.2 percent for Redis, when Optane DC modules were used as the memory, with DRAM as cache. If caching was disabled, that is, if the DRAM was not used to attenuate the relative slowness of Optane memory, performance dropped by 20.1 and 23 percent, respectively. The chart below illustrates the comparative performance differences.

The results here are not to demonstrate that DRAM is faster than Optane – everyone already knew that – but rather to determine how close Optane DC can come to conventional memory in performance. At a system level, the advantage of the larger memory footprint provided by Optane DIMMs would almost certainly result in better overall performance for applications dealing with large datasets, since in a less capacious DRAM environment, data would periodically have to be sucked into main memory over much slower storage devices.

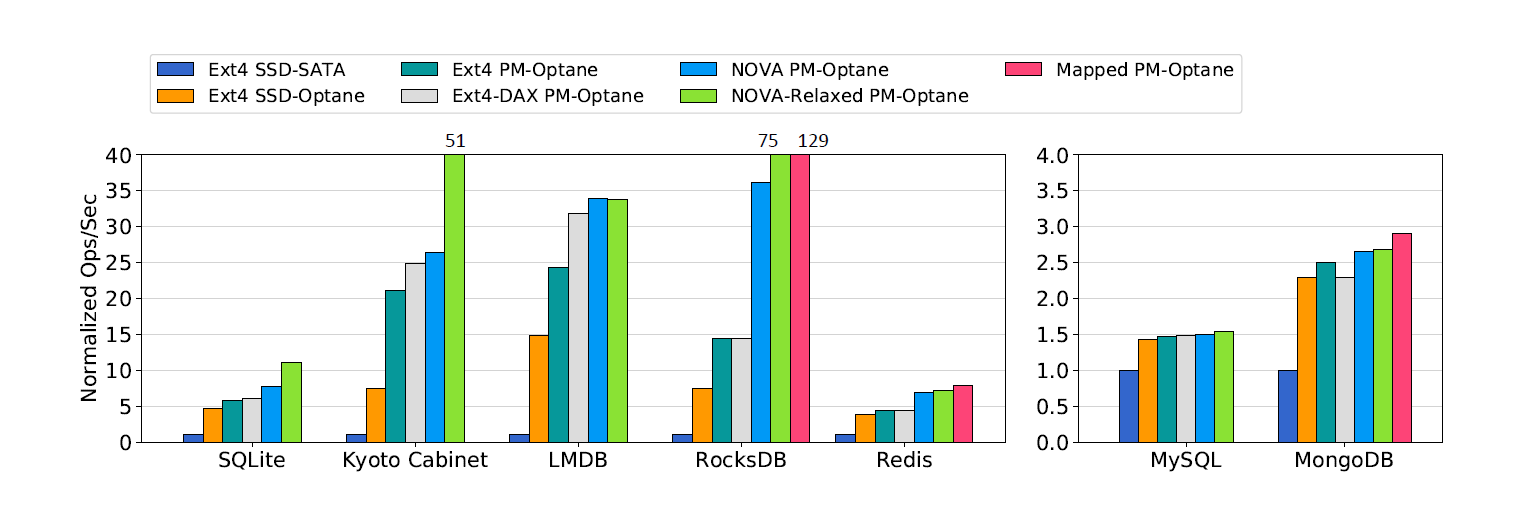

Speaking of which, the researchers also benchmarked how the product performed as persistent storage. In this scenario, the Optane DC modules are treated as a storage block device located in memory, while the DRAM fulfills its conventional role as volatile memory. One of the challenges here is that file systems typically do not support memory-based I/O, so options are somewhat limited.

In this case, the researchers used Ext4 and XFS, two disk-based files system which conveniently also provide a direct access (DAX) mode that can take advantage of non-volatile memory modules. They also used NOVA, a file system specifically designed for byte-addressable persistent memory. NOVA enjoyed some home-field advantage here, since it was developed at UCSD in the Non-Volatile Systems Laboratory, which is the same place this Optane study was conducted.

For these tests, the researchers compared the relative performance of Optane memory against a SATA SSD and an Optane SSD, as well as various file system configurations, across seven database applications. As illustrated by the chart below, in most cases, moving from SSD hardware to Optane DC PMMs provided a substantial performance boost. For the file systems optimized for direct memory access, results were more mixed, with the NOVA results providing the most consistent performance boosts. The NOVA-Relaxed variant, (which allows in-place file page writes in order to improve write performance for applications that do not require data consistency for every write) delivered the best performance of the various file system setups.

However, the best results were obtained by rewriting three of the applications (RocksDB, Redis, and MongoDB) to use memory mapped Optane DC instead of going through the file system loads and stores. This requires a greater level of effort, since the cache consistency normally provided by the file system has to be re-implemented in the customized software. But as the researchers pointed out, the potential for performance gains are larger.

The storage benchmark results clearly demonstrated the superiority of Optane memory over SSDs, even Optane SSDs, which have demonstrated their own advantages. The results also reflect the advantage of optimizing the file system software for a persistent memory environment. In general, greater software optimization yielded higher performance, which makes sense, but the magnitude of those performance increases was inconsistent across applications.

The bottom line is that for applications that require a larger memory footprint than can be supplied by DRAM, Optane DC has clear potential, with cost becoming a deciding factor. The situation is more complicated when using Optane DC as an in-memory storage device replacement, since results appear to be more sensitive to application type and the degree of software optimization that the customer is willing to pursue.

The study, which can be accessed here, is something of a work in progress. The current version leaves out the basic performance characteristics of the Optane DC hardware, which presumably will supply specific bandwidth and latency numbers rather than just comparative measurements. The researchers say these results will be added after the Optane DC PMMs become generally available.

Be the first to comment