The one thing that AMD’s return to the CPU market and its more aggressive moves in the GPU compute arena have done, as well as Intel’s plan to create a line of discrete Xe GPUs that can be used as companions to its Xeon processors, has done is push Nvidia and Arm closer together.

Arm is the chip development arm that in 1990 was spun out of British workstation maker Acorn Computer, which created its own Acorn RISC Machine processor and significantly for client computing, was chosen by Apple for its Newton handheld computer project. Over the years, Arm has licensed it eponymous RISC architecture to others and also collected royalties on the devices that they make in exchange for doing a lot of the grunt work in chip design as well as ensuring software compatibility and instruction set purity across its licensees.

This business, among other factors, is how and why Arm has become the largest semiconductor IP peddler in the world, with $1.61 billion in sales in 2018. Arm is everywhere in mobile computing, and this is why Japanese conglomerate SoftBank paid $32 billion for the chip designer three years ago. With anywhere from hundreds of billions to a trillion devices plugged into the Internet at some point in the coming decade, depending on who you ask, and a very large portion of them expected to use the Arm architecture, it seemed like a pretty safe bet that Arm was going to make a lot of money.

Getting Arm’s architecture into servers has been more problematic, and the reasons for this are myriad and we are not getting into the litany of it. One issue is the very way that Arm licenses its architecture and makes its money, which is great but which has relied on other chip makers, with much less deeper pockets who do not have the same muscle as Arm, much less AMD or Intel, to extend it for server platforms with features like threading or memory controllers or peripheral controllers. The software stack took too long to mature, although we are there now with Linux and probably with Windows Server (only Microsoft knows for sure on that last bit). And despite it all, the Arm collective has shown how hard it is to sustain the effort to create a new server chip architecture, with a multiple generation roadmap, that takes on Intel’s hegemony in the datacenter – which is doubly difficult with an ascending AMD that has actually gotten its X86 products and roadmap together with the Epyc family that launched in 2017 with the “Naples” processors and that has been substantially improved with the “Rome” chips this year.

All of this is background against what is the real news. And that is that Nvidia, which definitely has a stake in helping Arm server chips be full-functioning peers to X86 and Power processors, is doing something about it. Specifically, the company is making a few important Arm-related announcements at the SC19 supercomputing conference in Denver this week.

The first thing is that Nvidia is making good on its promise earlier this summer to make Arm a peer with X86 and Power with regard to the entire Nvidia software stack, including the full breadth of the CUDA programming environment with its software development kit and its libraries for accelerating HPC and AI applications. Ian Buck, vice president and general manager of accelerated computing at Nvidia, tells The Next Platform that most of the libraries for HPC and AI are actually available in the first beta of the Arm distribution of CUDA – there are still a few that need some work.

As we pointed out last summer, this CUDA-X stack, as it is now called, may have started out as a bunch of accelerated math libraries, but not it comprises tens of millions of lines of code and is on the same order of magnitude in that regard as a basic operating system. So moving that stack and testing all the possible different features in the host is not trivial.

Last month, ahead of the CUDA on Arm launch here at SC19, Nvidia gave it out to number of key HPC centers that are at the forefront of Arm in HPC, notably RIKEN in Japan, Oak Ridge National Laboratory in the United States, and the University of Bristol in the United Kingdom. They have been working on porting some of their codes to run in accelerated mode on Arm-based systems using the CUDA stack. In fact, of the 630 applications that have been accelerated already using X86 or Power systems as hosts, Buck says that 30 of them have already been ported to using Arm hosts, which is not bad at all considering that it was pre-beta software that the labs were using. This includes GROMACS, LAMMPS, MILC, NAMD, Quantum Espresso, and Relion, just to name a few, and the testing of the Arm ports was done in conjunction with not only key hardware partners that have Arm processors – Marvell, Fujitsu, and Ampere are the ones that matter, with maybe HiSilicon in China but not mentioned – or make Arm servers – such as Cray, Hewlett Packard Enterprise, and Fujitsu – or who make Linux on Arm distributions – with Red Hat, SUSE Linux, and Canonical being the important ones.

“Our experience is that for most of these applications, it is just a matter of doing a recompile of the code on the new host and it runs,” explains Buck. This stands to reason since a lot of the code in a hybrid CPU-GPU system has, by definition, been ported to actually run on the Tesla GPU accelerators in the box. “And as long as they are not using some sort of bespoke library that only exists in the ecosystem out of the control of the X86 platform, it has been working fine. And the performance has been good. We haven’t released performance numbers, but it is comparable to what we seen on Intel Xeon platforms. And that makes sense since so many of these applications get the bulk of their performance from the GPUs anyway, and the ThunderX2, which most of these centers have, is performing well because its memory system is good and its PCI-Express connectivity is good.”

Although Nvidia did not say this, at some point, this CUDA-X stack on Arm will probably be made available on those Cray Storm CS500 systems that some of the same HPC centers mentioned above are getting equipped with the Fujitsu A64FX Arm processor that Fujitsu has designed for RIKEN’s “Fugaku” exascale system. Cray, of course, announced that partnership with Fujitsu and RIKEN, Oak Ridge, and Bristol ahead of SC19, and said that it was not planning to make the integrated Tofu D interconnect available in the CS500 clusters with the A64FX iron. And that means that the single PCI-Express 4.0 slot in the A64FX processor is going to be in contention on the A64FX processor, or someone is going to have to create a Tofu D to InfiniBand or Ethernet bridge to accelerate this server chip. A Tofu D to NVLink bridge would be even better. . . . But perhaps this is just a perfect use case for PCI-Express switching with disaggregation of accelerators and network interfaces and dynamic composition with a fabric layer, such as what GigaIO is doing.

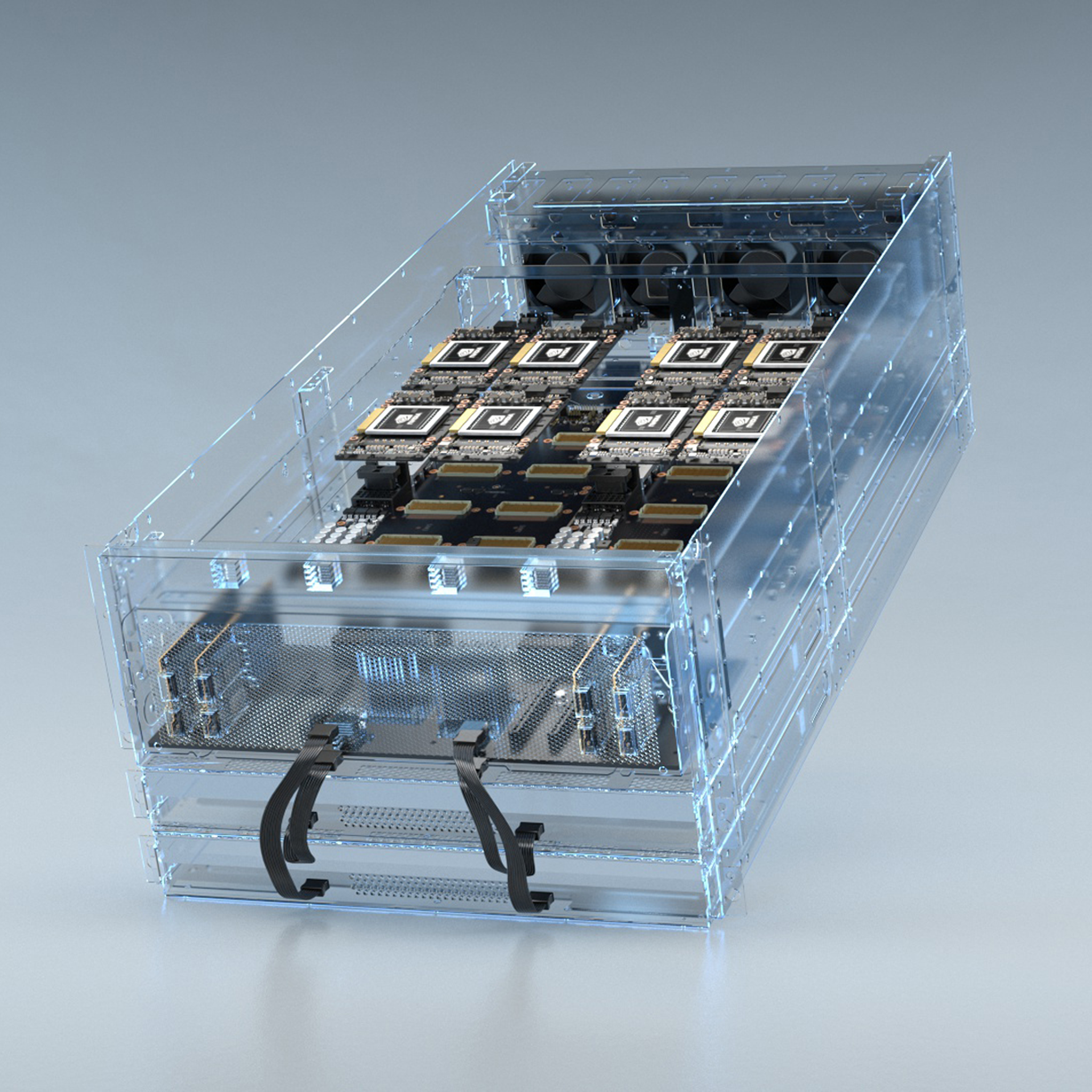

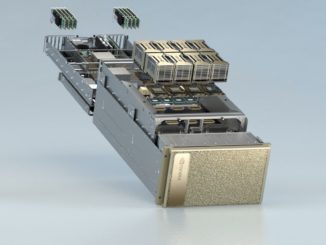

That’s not Nvidia’s concern today, though. What Nvidia does want to do is make it easier for any Arm processor plugged into any server design to plug into a complex of GPU accelerators, and this is being accomplished with a new reference design dubbed EBAC – short for Everything But A CPU – that Nvidia is making available and that is shown below:

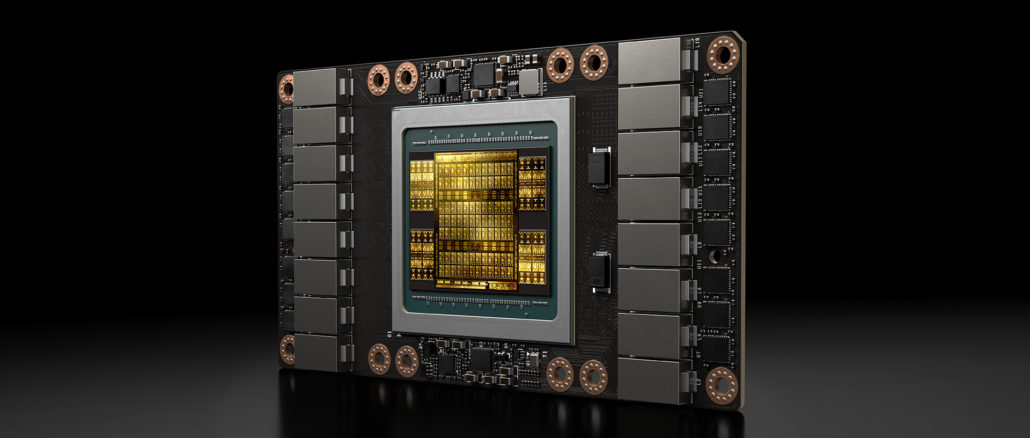

The EBAC design has a modified GPU tray from the hyperscale HGX system design, which includes eight “Volta” Tesla V100 accelerators with 32 GB of HBM2 memory on each. The GPUs are cross-connected by NVLink so they can share data and memory atomics across those links, and the tray of GPUs also has what amounts to an I/O mezzanine card on the front that has four ConnectX5 network interface cards running at 100 Gb/sec from Mellanox Technologies (which Nvidia is in the process of buying) and four PCI-Express Mini SAS HD connectors that can lash any Arm server to this I/O and GPU compute complex. In the image above, it looks like a quad of two-socket “Mustang” ThunderX2 system boards, in a pair of 1U rack servers, would be ganged up with the Tesla Volta accelerators. Presumably there is a PCI-Express switch chip complex within the EBAC system chip to link all of this together, even if it is not, strictly speaking, composable.

There is probably not a reason it could not be made composable, or extended to support an A64FX complex. We shall see. If anyone needs to build composability into its systems, now that we think about it, it is Nvidia.

Be the first to comment