The edge is getting a lot of attention these days, given the fast-growing amount of data that is being generated by the proliferation of devices and systems located outside of the traditional core datacenter. It’d be difficult to find an OEM or component maker that isn’t stretching their product portfolios to include the edge and investing a lot of money and effort to establish their presence out there. Hewlett Packard Enterprise President and CEO Antonio Neri last year said his company was investing $4 billion over four years to develop and expand its capabilities in such areas as the internet of things (IoT), artificial intelligence (AI), machine learning and distributed computing, all keys to addressing what he calls the Intelligent Edge.

Despite all the effort and talk around edge computing, how it will play out and what it will eventually look like remains a work in progress. Enabling technologies like 5G networking and Gen-Z interconnect are still on the horizon, for example, though it’s clear that AI, machine learning and analytics are going to have to be coupled with the proper amounts of compute and storage to not only collect, process and store that data as close as possible to the devices that are creating it but also to sort through the data and decide what is relevant, what isn’t, and what needs to be sent to a datacenter or cloud.

And if it’s going to scale, the infrastructure at the edge will have to be done on industry-standard equipment, according to Robert Hormuth, CTO for Dell EMC’s Server and Infrastructure Systems unit.

“The big thing about this to make it scale has got to be commercial, off-the-shelf technology,” Hormuth tells The Next Platform. “If we’re not going to adopt standard compute, whether it be in rack-mount servers, tower servers or modular servers, something at that scale, we’re going to just continue to live in the world of embedded computing, where everything is a one-off special unicorn, special rainbow, and you never get scale. Can we do all this with traditional embedded computing? Sure. It’s all a one-off, one-size-fits everything … but it doesn’t get scale, which doesn’t help customers scale. Are we going to see a big wave of kind of standard, traditional computational gear in many MDCs [micro datacenters]? Yes, I think we will end up with some other at-scale standardization or shorty chasses or shorty racks or things that are very close, but they’ve got to be at scale for any of this to work or else we’re making all our customers go out and find unicorns.”

Dell EMC has a host of such standard systems that includes its Networking Virtual Edge Platform 4600, a 1U single-socket system powered by an Intel Xeon D-2100 processor as well as other technologies from the chip maker, such as QuickAssist Technology for security and compression and Intel’s Data Plane Development Kit. The company also has PowerEdge XR2 rugged systems for small and harsh environments, the 2U PowerEdge R740 server (right) for such workloads as private clouds and AI, and micro modular datacenters via its Extreme Scale Infrastructure program, all of which leverage Intel chips, Nvidia GPUs and other standard components.

Dell EMC has a host of such standard systems that includes its Networking Virtual Edge Platform 4600, a 1U single-socket system powered by an Intel Xeon D-2100 processor as well as other technologies from the chip maker, such as QuickAssist Technology for security and compression and Intel’s Data Plane Development Kit. The company also has PowerEdge XR2 rugged systems for small and harsh environments, the 2U PowerEdge R740 server (right) for such workloads as private clouds and AI, and micro modular datacenters via its Extreme Scale Infrastructure program, all of which leverage Intel chips, Nvidia GPUs and other standard components.

However, while much of the infrastructure equipment will need to be industry-standard and off the shelf, there will be room for specialized technologies, Dell Fellow Jimmy Pike tells The Next Platform. Google, Intel, Nvidia and others, for example, have created or are developing silicon designed for such tasks as machine learning inference, which will play an increasingly important role in analyzing the mountains of data being generated at the edge.

“We like to have one-size-fits-all or have something specialized for everything,” Dell Fellow Jimmy Pike tells The Next Platform. “The truth of the matter is, it’s more along the right-size gear, the right-size equipment with the right capabilities. I would say it’s all about edge, it’s all about traditional computing, about specialized computing.”

Edge computing is part of a back-and-forth that’s been playing out in the tech industry for decades. When IBM’s mainframes were king, computing was a very centralized environment. That changed with the ascent of client/server, when computing became more distributed, and then things started moving back to a centralized model with the rise of the cloud. With IoT and the edge, it’s becoming distributed once again.

“If you look at all of those pendulum swings, it’s always been driven by something,” Hormuth says. “What we’ve come up with is kind of a functional model that has something to do with the cost to compute cycles, the cost of moving the data, the size of the data and data complexity. If you look back in history at every one of those pendulum swings, one of those variables … caused it to swing, and it always swung to something bigger. Client/server was a much bigger market than the terminal mainframe. Terminal mainframe is still not dead, but client/server was bigger, mobile cloud was bigger and IoT/edge is going to be bigger. I would almost argue that with this one, just the size of the data, data complexity and the cost of moving the data is so high that it’s causing it to swing towards the IoT/edge. The cost of compute right now is continuing to come down, Moore’s Law, while it’s struggling, it’s not dead. Then we’ve got other new ways to do compute, so I don’t think it’s the cost of compute that’s causing it to swing this time. It’s just the sheer size, complexity [of the data].”

The upcoming enabling technologies will play a role in how the edge begins to shake out. Telecommunications companies like AT&T and Verizon are laying down networks for 5G, which will deliver faster speeds, more bandwidth, greater capacity and multi-gig capabilities. Gen-Z promises higher-throughput and lower-latency fabrics. Both technologies will be crucial to the advancement of the edge.

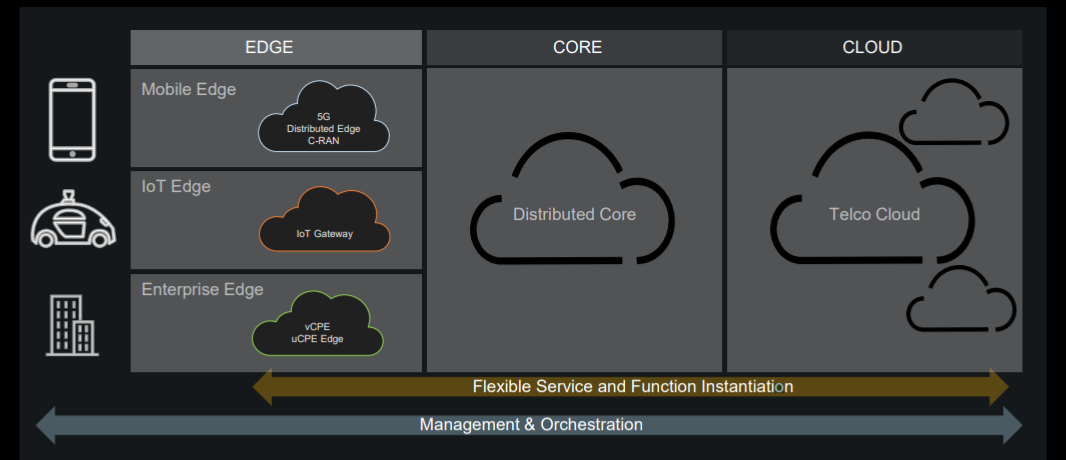

Eventually the edge will evolve to become part of a widely distributed computing environment that also envelopes somewhat traditional datacenters and the cloud. The idea that pushing more compute and storage to the edge or into the cloud will lead to the emptying out of core datacenters – and put a dent into the infrastructure businesses of companies like Dell EMC, HPE, IBM, Lenovo or Cisco—doesn’t make sense, according to Pike. Dell EMC’s vision looks like this:

“There are new ways of doing things that are going to emerge,” he says. “We’ve been pretty open to the idea that multicloud is going to exist. … It’s sort of dangerous to stand up and say, ‘Hey, this one way is the way you do stuff,’ because one size doesn’t fit all. It looks to me like it’s going to get even more diverse as we go forward. It doesn’t look like that the emergence of stuff at the edge reduces the amount of stuff you need either in your on-premises datacenters or in your cloud.”

“It’s just going to be this distributed computing world where some of their stuff is going on in the cloud, some is going to be in a datacenter, some’s going to be in the edge and some is coming from the side of a mountain,” Hormuth says. “Those that figure it out, they’re going to be the ones whose stocks have more pop than others.”

Lines are already beginning to blur, and not just as enterprises adopt more than one public cloud for their data and applications and containers and Kubernetes make it easier for them to manage and move data around these multicloud environments. Public cloud providers are beginning to move into on-premises datacenters. Through its Outposts initiative, Amazon Web Services is offering AWS equipment for enterprise datacenters. Google Cloud Platform last month rolled out its Google Services Platform to enable customers to run its cloud services and applications on-premises, and Microsoft Azure and IBM Cloud have already made similar moves.

VMware’s Project Dimension is a way of bringing VMware Cloud into datacenters.

As the enterprise computing environment becomes more distributed to encompass on-premises datacenters, the cloud and the edge, the need for composable infrastructures – memory-centric infrastructures enabled by next-generation technologies like Gen-Z and storage-class memory that essentially creates a massive pool of IT resources that can be dynamically deployed to meet workloads needs—will come into play. HPE and Dell EMC both are pushing composable infrastructure efforts, as are smaller companies like Liqid and TidalScale. Late last year, Juniper Networks bought composable startup HTBase.

“The idea of being able to right-size the resources in a system and have a fully composable system, we have absolutely bought into that,” Pike says. “The idea that you can have resources – especially expensive resources – that aren’t trapped to a single server, that you can repartition, redistribute those and use them on a more fluid basis, that is one of the reasons why we are so into Gen-Z. … That is a big thing that we want to see happen. We believe it is going to be a big deal.”

Be the first to comment