More than five years ago, Nvidia, driven by its co-founder and CEO, Jensen Huang, turned its considerable focus to developing technologies for the revitalized and burgeoning artificial intelligence space.

Having successfully driven the company’s GPUs into the datacenter as HPC application accelerators to improve the performance of systems while keeping a lid on the power consumption – due in large part to the introduction of CUDA – Huang at the time said the future in HPC and enterprise computing laid in the ability to harness the capabilities of AI, and that the parallel computing capabilities of Nvidia’s GPU would be foundational to that effort. As Moore’s Law continues to slow, the industry needed ways to continue to accelerate performance.

Fast forward to the present, and Nvidia has become a central player in AI and such subsets as machine learning and deep learning, certainly on the training of neural networks and increasingly in inference. The company three years ago jumped into the deep learning arena with product likes Tegra X, a 256-core GPU that brought a terabyte of processing power to deep learning workloads, and the GeForce GTX Titan X for training neural networks, and over the years followed by the Drive PX 2 for Ai in automobiles, the Tesla V100 GPU based on the Volta architecture and powering the company’s hybrid GPU-CPU DGX supercomputers for AI.

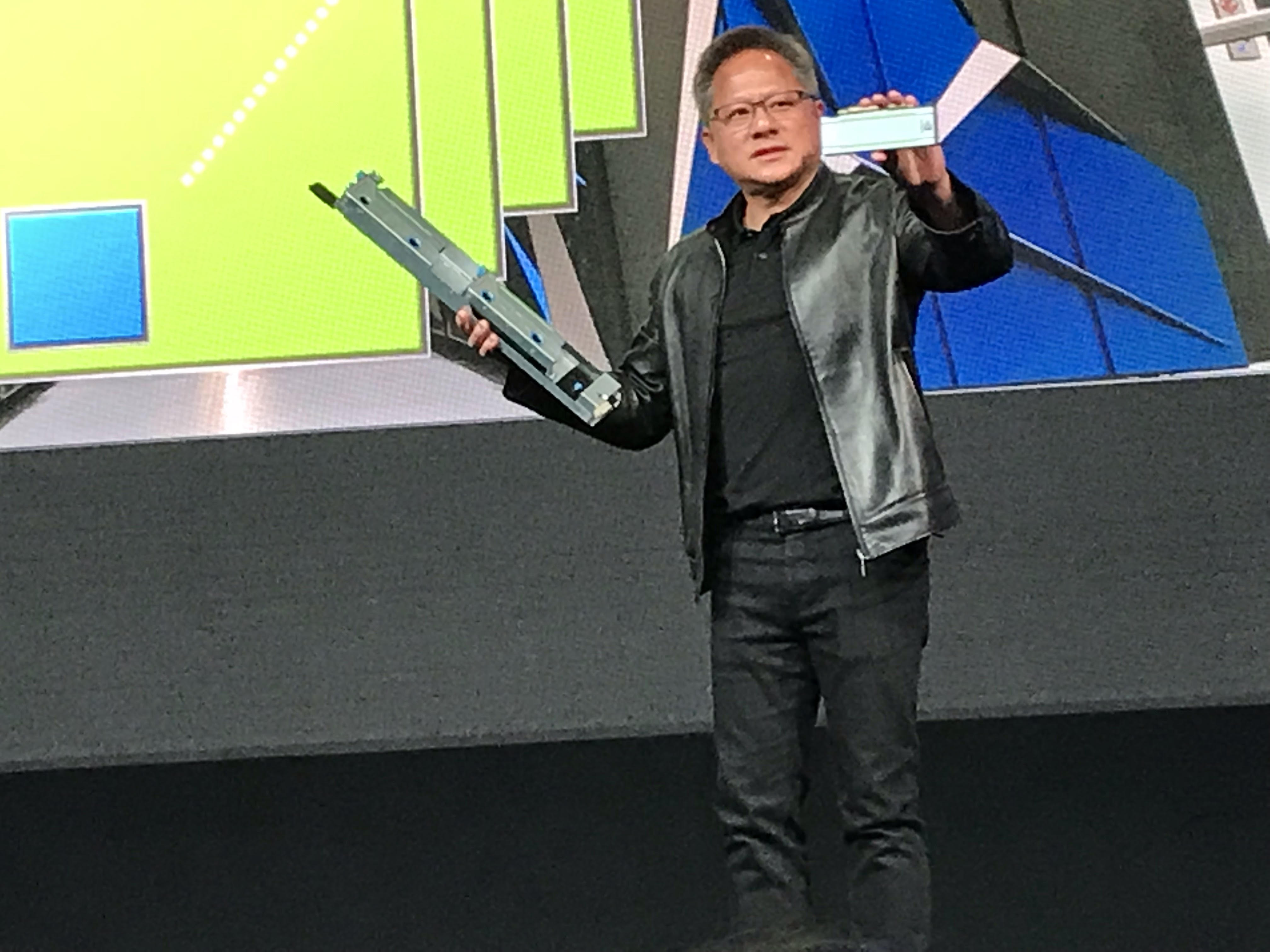

The strategy has been around both hardware and software, from the TensorRT inference software – TensorRT 4 was released in the second quarter – and the Nvidia GPU Cloud (NGC) catalog of integrated containers optimized to run on the company’s GPUs to the Tesla P4 and P40 accelerators that launched two years ago with machine learning inference in mind and, more recently, the T4 Tensor Core GPU that was released a couple of months ago and that Huang displayed during his keynote address this week at the SC18 show in Dallas (below).

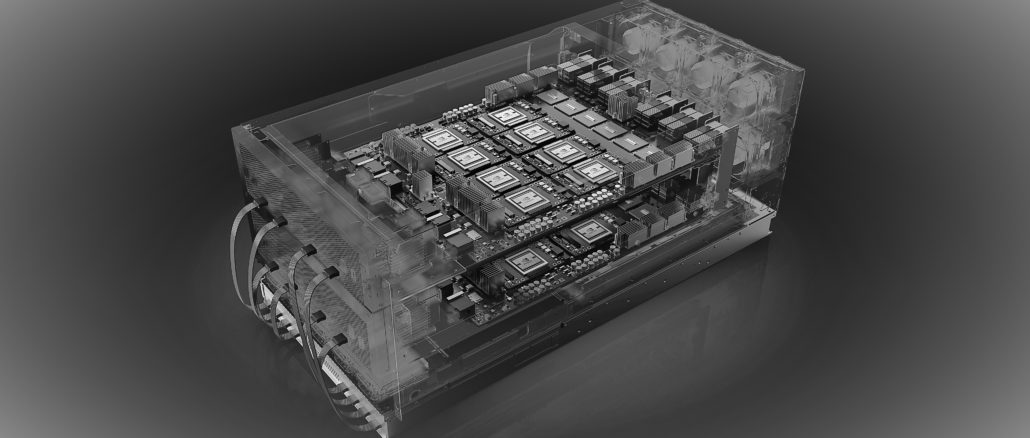

The company is bringing its DGX-2 technology to its HGX-2 server platform so that OEM and ODM partners can integrate it into their own designs. The first of the DGX-2 systems, which each include 16 Tesla V100 GPUs linked through Nvidia’s NVSwitch technology and provide more than 2 petaflops of compute power, will be delivered to a number of institutions, including the Oak Ridge National Laboratory, Sandia National Laboratories, and Brookhaven National Laboratory, and Pacific Northwest National Laboratory.

Huang and other executives have said that AI, machine learning, deep learning and other AI technologies have been good for business. In the second quarter, the company’s datacenter business generated $760 million in revenue, an 83 percent year-over-year increase, driven in large part by demand from hyperscalers whose cloud services are increasingly leveraging AI techniques, according to chief financial officer Collette Kress.

“Our GPUs power real-time services such as search, voice recognition, voice synthesis, translation, recommender engines, fraud detection, and retail applications,” Kress said during a conference call in August. “We also saw growing adoption of our AI and high-performance computing solutions by vertical industries, representing one of the fastest areas of growth in our business.”

During the same call, Huang said that inference in particular would become a key part of the datacenter business.

“There are 30 million servers around the world in the cloud, and there are a whole lot more in enterprises,” Huang said. “I believe that almost every server in the future will be accelerated. And the reason for that is because artificial intelligence and deep learning software and neural net models are going to prediction models, are going to be infused into software everywhere. And acceleration has proven to be the best approach going forward. We’ve been laying the foundations for inferencing for a couple or two, three years. And as we have described at GTCs, inference is really, really complicated. And the reason for that is you have to take the output of these massive, massive networks that are output of the training frameworks and optimize it. This is probably the largest computational graph optimization problem that world has ever seen.”

After years of building out the hardware and software portfolio for AI workloads, Huang came to the SC18 show to talk about how those technologies continue to be embraced by cloud providers and other tech vendors and how accelerated computing continues to grow in an evolving HPC field. He noted that the V100 GPUs are key the Summit supercomputer at the Oak Ridge National Laboratory, which sits atop the Top500 list of the fastest systems in the world – as well the number-two system, Sierra, at the Lawrence Livermore Lab. In addition, 127 of the 500 systems on the list use Nvidia GPUs.

The T4 GPU – based on Nvidia’s Turing architecture – is now being adopted by Google Cloud Platform as well as OEMs like Dell EMC, Lenovo, IBM and Hewlett Packard Enterprise and ODMs in 57 server designs. The multi-precision capabilities in the T4 means the T4 can deliver performance at multiple layers of precision, from 8.1 TFLOPS at FP32 to 260 TOPS of INT4. It can fit into any Open Compute Project hyperscaler server design, and organizations can use a server with two T4 GPUs in place of 54 CPU-only servers for inference workloads. For training, at two-T4 server can replace nine dual-socket CPU-only servers, according to Nvidia.

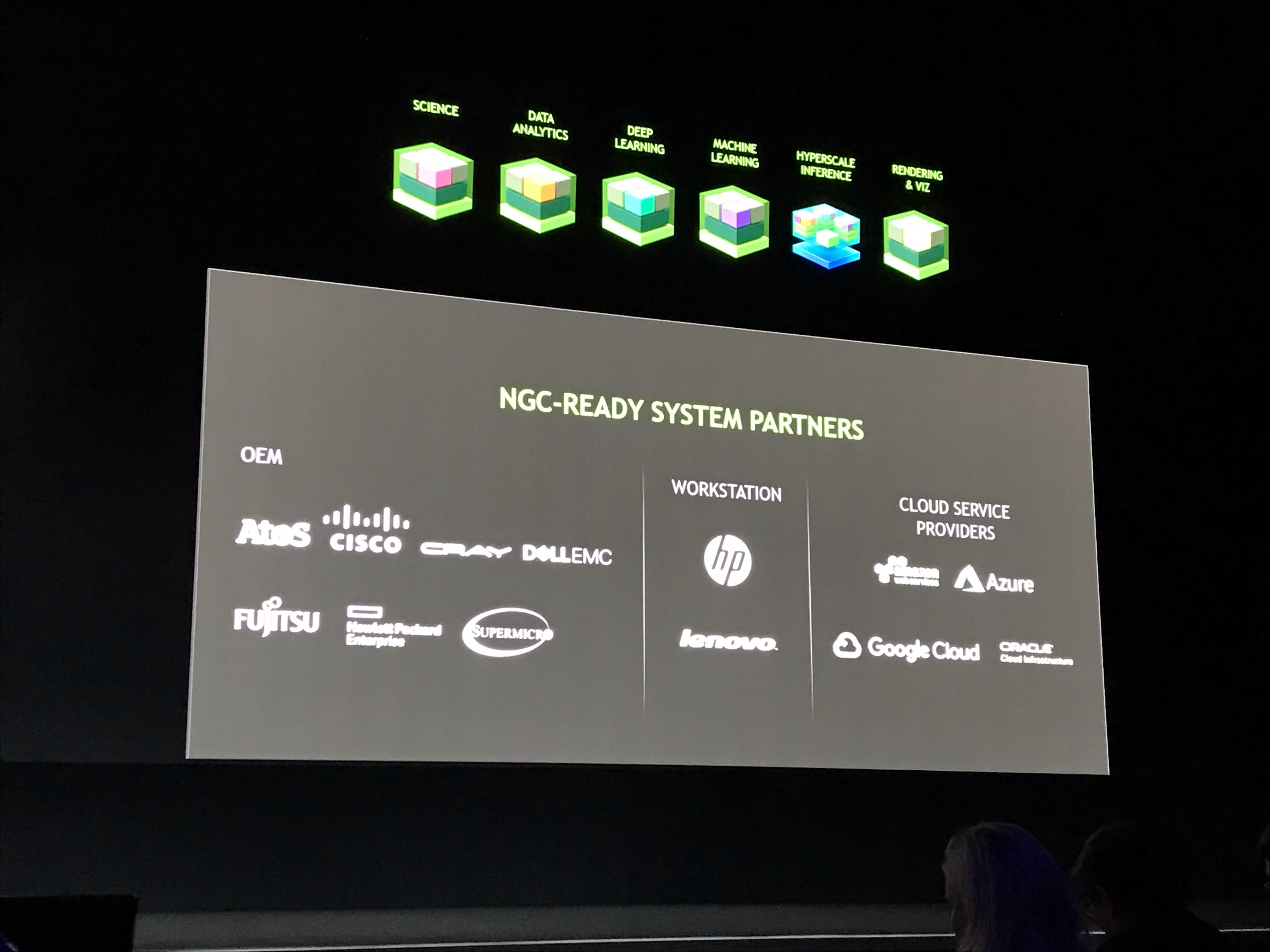

Huang also noted that the company’s NGC container registry has grown the number of frameworks and applications from 18 last year to 41 now, touching on everything from deep learning an HPC to HPC visualization, with new software including ParaView, Rapids, MILC and Matlab. Nvidia also is offer new multi-node HPC and visualization containers to enable organizations to run workloads on large-scale clusters. The five contains support multi-node deployment, making it easier to run large computational workloads on multiple nodes with multiple GPUs per node, and improvement over trying to use message passing interface (MPI) in containers for deployments over multiple servers.

The NGC contains also can be used natively with Singularity’s container technology, and a new NGC-Ready program that will certify systems for NGC. Initial systems include Cisco’s UCS C480ML, Dell EMC’s PowerEdge C4140, HPE’s Apollo 6500, ATOS’s BullSequana X1125 and Supermicro’s SYS-40w9GP-TVRT.

“The HPC industry is fundamentally changing,” Huang said, noting that the architecture was designed to test the laws of physics and simulate the equations of Einstein, Maxwell and Newton “to derive knowledge and to predict outcomes. The future is going to continue to do that, but we have a new tool and this new tool is called machine learning. Machine learning has two different ways of approaching it. One of them requires domain experts to engineer features. Another one, using neural network layers at its lowest level, inferring learning what the critical features are by itself. Each one of them has their limitations and each one of them has their applications, but both them will be incredibly successful. The future of high-performance computing architecture will continue to benefit from scale up, but it will also be scaled out. … In the future, architecture will be scaled up and out, whether it’s high-performance computing or supercomputing.”

The key, though, is the software, and for Nvidia, CUDA is what makes its software coherent and compatible, he said.

“Accelerated computing is not about the chip. You’re ultimately trying to think about the application first, about the researcher first, about the developer first, and to create an entire stack that allows us to overcome what otherwise would be called the end of Moore’s Law. Accelerated computing is about accelerating stacks. When you’re accelerating stacks and you don’t have a coherent architecture, I have no idea how people are going to use it.”

Can you please clarify what does that mean – “…256-core GPU that brought a terabyte of processing power”? Is that teraflop or …?