For years, enterprises have wanted to pool and then carve up the myriad resources of the datacenter to enable them to more efficiently run their workloads, reduce power consumption, and improve utilization rates. It takes what seems like an endless series of technologies advances to move towards this goal. But, ever so slowly, we are getting there.

Virtualization that started in the servers flowed into the storage realm and eventually into the network, and converged systems mashing up virtual compute and virtual networking soon begat hyperconverged infrastructure, which added in virtual storage – one of the fastest growing segments in the datacenter space. These days, “software-defined” has become the adjective of choice for infrastructure.

But that doesn’t mean that we are done. Now, attention has turned to composable infrastructure, the idea of building cloud-like infrastructures where all the datacenter resources are essentially brought together into a single pool from which the applications can draw whatever they need to run the most quickly and efficiently, and then return those resources back into the pool when they are no longer needed. This is not about virtualizing the operating system (whether it be one for a server, a storage array, or a switch) to set it apart from the underlying hardware, but rather to actually compose the compute, storage, and networking a fabric to, in essence, create the hardware on the fly and make it look and feel like malleable bare metal, and more importantly, breaks the tight coupling between CPUs and main and auxiliary memories.

In an enterprise IT world that is seeing the speeds of servers continuing to ramp up with the growing use of accelerators like GPUs and field-programmable gate arrays (FPGAs), the already massive amounts of data only getting larger and modern workloads like machine learning and analytics increasingly demanding more real-time processing, all of this is important. Vendors like Cisco and Hewlett Packard Enterprise have been talking about composable infrastructure for about three years, with HPE building a business around its Synergy platform, which the company says has about 1,400 customers. HPE is adding another tool to its hyperconverged and composable toolboxes with the intended acquisition of network virtualization vendor Plexxi, a deal that was announced this week.

A few smaller startups, including Liqid, TidalScale and HTBase, also have emerged in hopes of finding traction in what promises to be an increasingly competitive market.

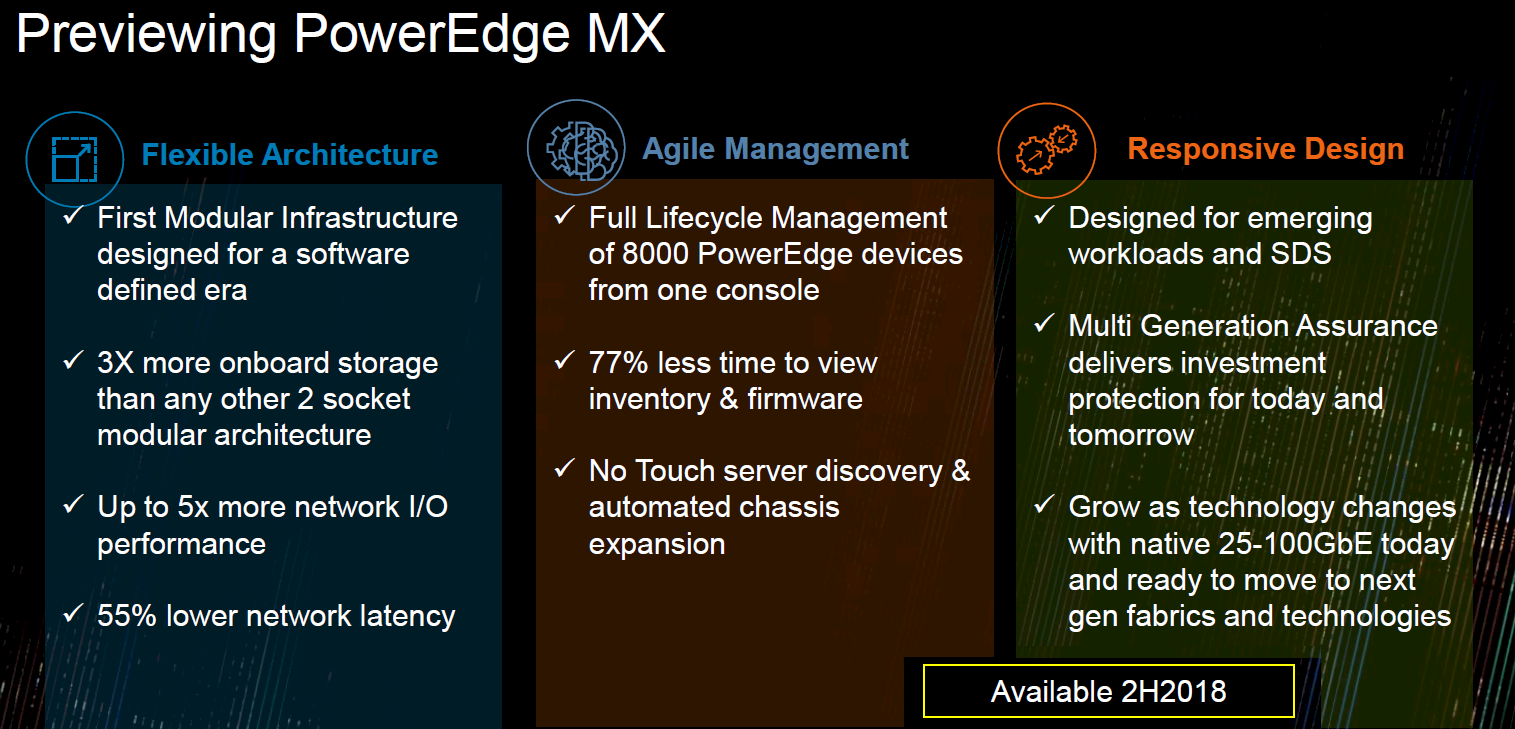

At its recent Dell Technologies World show earlier this month, much of Dell EMC’s focus was around new storage products, the growing use of NVM-Express to accelerate the performance of flash and other non-volatile memory, and its hyperconverged infrastructure portfolio. However, there was brief discussion on stage of composable infrastructure and its upcoming PowerEdge MX system, a key part of its growing composable strategy that is due out in the second half of the year. Not many details of the modular system were released – more are coming, the crowd was told – but there were promises around such capabilities as five times more network I/O performance and three times the onboard storage than typical two-socket systems, improved management, and a design that will adapt to new workloads and fabrics.

Dell EMC has not been highly vocal about its composable strategy, but it is something the company has been working on for a while – the PowerEdge MX has been in the works for at least the past couple of years – and it’s a key part of the vendor’s future, according to Robert Hormuth, chief technology officer for server and infrastructure systems at the company. And while there is a level of composability that can be done today, it will take a few more years before true composability is achieved, Hormuth tells The Next Platform.

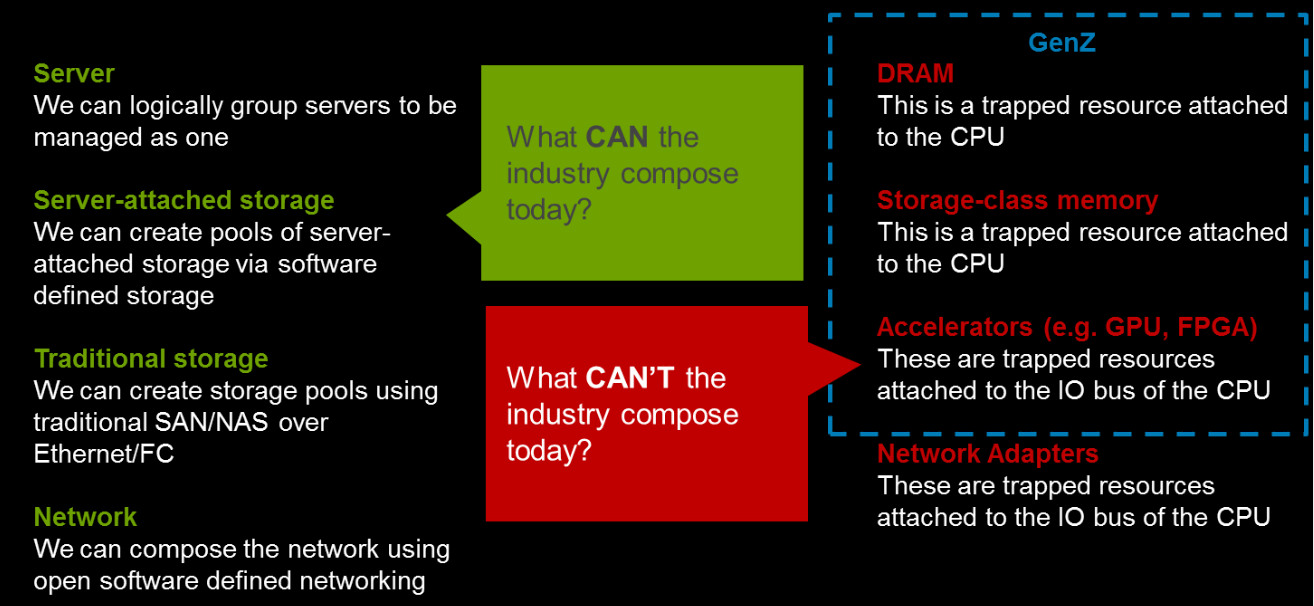

“If you look at today, at Dell EMC, we’re as composable as anybody else,” Hormuth says. “We can compose storage – we’ve got ScaleIO, we’ve got vSAN. We’ve got open networking; we can compose networking. We’ve got virtualization; we can compose servers. The world today is as composable as it can be, but it’s an I/O-composable world, not a memory-composable world. It is an important difference between the two. If you think about the two, the I/O-composable world all lives behind a protocol stack. Whether it be TCP IP or file/block protocols, it lives behind the I/O part of the memory domain. But we have all these new emerging devices that I like to call ‘microsecond-class devices’ – storage-class memory, FPGAs, GPUs, they live in the sub-microsecond world and you can’t put a protocol stack on. If you took storage-class memory and put it at the end of a 10 Gb/sec wire, you’ve kind of killed in the intrinsic value of it. You took a device that was 800 nanoseconds and now you turned it into a 50 microsecond access. That’s why we move toward this memory-centric and kinetic world where we get to this next level of full composability through the memory domain because you don’t want to put these devices behind that protocol stack.”

We have talked about the need to break the tight coupling between the CPU and the main memory and allow it to be configured on the fly to create a fully composable infrastructure. Other vendors also have put a focus on memory to deal with modern workloads. Memory has been at the center of HPE’s efforts behind The Machine, which will include a hugely scalable share memory pool (with the plan to leverage memristors) and will use a silicon photonics interconnect.

Hormuth talks about the shift from a compute-centric to a memory-centric world, one that takes advantage such technologies as storage-class memory, NVM-Express, and the emerging Gen-Z interconnect.

“If you think about what we have today, it’s a bunch of nodes and CPUs with memory attached to them and all attached to a network,” Hormuth says. “We’re passing data around enormously and shifting bits around is expensive. We need to shift to where we’re doing more memory-centric, in-memory computing. We’re shifting from the world of the past where you think about big data and we would do batch processing overnight and we would show up at the office and do insurance quotes for the entire United States. But we’re shifting to a world where their data has a time-intrinsic value. You either process the data and get real-time results and a real-time experience delivered to your business or your customer or you might as well not process it. To make this shift, these workloads that are much more real time than the old batch world that we came from requires some different thoughts, different architectures and different flexibility than what we’ve done architecturally for the last 20 years.”

Dell EMC is using the term “kinetic” to describe true composability, saying that it includes not only the modular infrastructure design but goes deeper by extending the idea of composability to individual storage devices and eventually to memory-centric devices like DRAM, storage-class memory, GPUs and FPGAs, which currently are trapped in the server.

The company already has made steps in that direction. The growing use of NVM-Express in both servers and storage devices – including the newly announced PowerMax storage array, the successor to Dell EMC’s VMAX all-flash line – is an example, as is pursuing the development of storage-class memory (SCM) and NVMe-over-Fabrics, and work on such industry standards for management and composability the Distributed Management Task Force’s Redfish APIs. In addition, the evolution of the Gen-Z interconnect will be crucial to addressing this memory-centric approach, Hormuth says. The two-year-old industry consortium that includes such heavyweights as Dell EMC, HPE, Lenovo, Cray, IBM, AMD, and Arm earlier this year released the Gen-Z Core Specification 1.0, enabling server an storage OEMs and ODMs, component makers, networking companies and others to begin building products based on it. There already are vendors doing PCI-Express disaggregation, but the issue is that PCI-Express is found behind the IOMMU of the processor, not the MMU.

“That’s a big difference,” Hormuth says. “If you think about the OS and its ability to put memory in the heap or the memory that the OS can allocate to jobs or applications, all that memory has to live behind the MMU, not the IOMMU. You can go make the PCI-Express DRAM card all day long – you can load a driver, you can use it as a fast cache, DRAM block, storage file, whatever – but you can’t give it to the OS and say, ‘Here, dole this out to the applications,’ because it lives behind the wrong MMU. That’s where Gen-Z helps push us to that memory-centric world, where we can put things in the proper memory space of the processor. It’s a subtle difference, but it’s an important one.”

Another important step is that in the PowerEdge MX, there is no midplane. In a memory-centric, composable world, that means the system is adaptable to expected changes in technology, he says.

“If you look at the evolution of compute and Moore’s Law and the performance CAGR of servers, we’re battling a battle of physics,” the CTO says. “We’ve got all these cores, which need more memory bandwidth, which means more memory channels, which means bigger CPUs, bigger sockets, higher TDPs. We’re at six channels with Intel, we’re at eight with AMD, we’re going to have eight, 10, who knows what in the future, so you need an architecture that can be scalable and future-proof. We looked at where we are and where the memory’s going and made sure we accommodated a design for the future so it’s very future-proof, which also means you’ve got cool the thing. One attribute that the midplane-less does is that we’re able to have much more effective cooling because we don’t have a big band in the back that we’re trying to shove air through.”

In addition, it will be easier to adapt to changing I/O, including Gen-Z. When a change in I/O is make, network administrators won’t have to worry whether the midplane can handle the width, speed or signal integrity of the new I/O.

“The industry is where the industry is,” Hormuth explains. “We’re just as composable as anyone else. We all have the same technology. We all have software-defined, we all have SAS switching capabilities. We all have that today. To get to the true kinetic and composability takes an architecture shift. The future state we see in a memory-centric view, we see that you have to add memory tiering. To get memory tiering, we need more architectures that support memory-centric, so you’re going to see things like on-package memory, we’ll probably have to migrate the industry to one DIMM per channel versus two to get the frequency up. We’ll need a fabric like Gen-Z to have storage-class memory.”

Well said. I would point out that the DRAM-DIMM design of the last few decades, and the software assumptions about memory, break as soon as there is HBM or other low latency, non RAS/CAS DRAM sitting on the CPU package. Starting at that point there is a new class of near memory, then DIMMs, then shared memory as Gen-Z enables.

At that point, not only might the optimal data center pod architecture become more like the innards of an EMC Symmetrix array of 15 or 20 years ago (many small processors, each with their own private memory, surrounding and sharing a reliable central memory in the rack), but also the optimal design simply dissolves the historic silo boundary between server and storage.

Interesting times!