The center of gravity for data is continuing to shift from core datacenters to other parts well beyond the walls of those facilities. Data and applications are being accessed and created in the cloud and at the network edge, where billions of smartphones and other smart, connected devices that make up the Internet of Things (IoT) reside and do their work.

In this increasingly digital and data-centric world, that is where the action is, where data needs to be collected, stored, analyzed, and acted on, so it’s not surprising that so many of those established tech vendors that have made billions of dollars over the past few decades building systems for datacenters are now pushing those capabilities past the walls and toward the cloud and edge.

At The Next Platform, we’ve been talking for more than a year about the edge and distributed computing created by the changes in the enterprise IT space, with more focus being put on branch and remote offices, the cloud and gateways. The amount of data being developed in these places far outside of the central datacenter will only skyrocket, and enterprises need to be able to quickly – and efficiently – analyze the data and make business decisions based on it, so sending it back to the datacenter or cloud to do all that makes no operational or financial sense. Increasingly, they want to be able to deal with the data closer to where it’s being generated. Compute, storage and analytic capabilities – as well as newer technologies, like artificial intelligence and machine learning – must move out there.

Hewett Packard Enterprise, Dell EMC and other tech giants are busy planting their flags is this new and fast-growing territory. HPE CEO Antonio Neri said last summer that the company will invest $4 billion through 2022 into growing its capabilities in what he called the “intelligent edge,” and is leaning on his company’s Aruba Networks business – bought for $3 billion in 2015 – to help lead the effort. Dell EMC ha rolled out gateways running on Intel silicon that can aggregate and analyze edge-generated data and, like HPE, sells highly dense systems that can fit nicely into edge environments. Dell-owned VMware has extended the reach of its NSX network virtualization platform from the datacenter out into the cloud and edge.

Likewise, Cisco Systems has been aggressive in its pursuit of both the multicloud and the edge by expanding the capabilities in such offerings as its HyperFlex hyperconverged infrastructure solution and building out such initiatives as its intent-based networking strategy, all with the goal of making it easier for enterprises to deploy and manage their far-flung environments. The issue is that the idea of a datacenter is changing, according to Daniel McGinniss, senior director of data center marketing at Cisco. It’s evolved beyond being a single place for systems, applications and data all nestled behind walls.

“Just take a look at what this looks like and how the evolution is occurring,” McGinniss tells The Next Platform. “Clearly we have our on-premises datacenter. That’s where we began and certainly [it’s] here to stay. But the whole cloud paradigm has really changed the way companies operate nowadays. Even if it’s an on-prem private cloud, there’s clearly just a new model being born, especially if we look at what we are now calling cloud-native applications. It’s changed and it’s put new expectations on the business.”

With clouds and the edge, “it’s really been about, ‘How do we get the compute and the work closer to the sources of demand?’ We’re actually moving back to this decentralized model. Obviously there’s a new set of pressures or demands being put on the telco environments on how they can create new bandwidth for the exponential requirements that are being created by all of the mobile devices that are connecting to the network.”

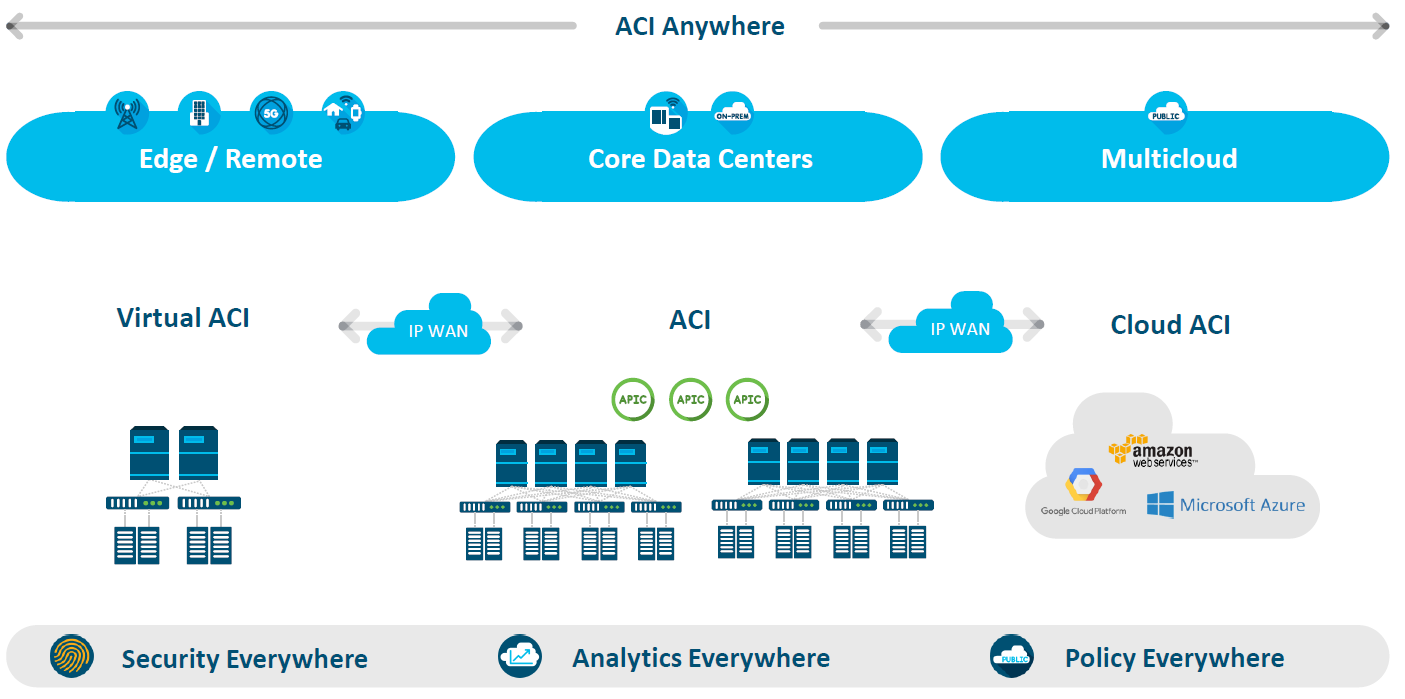

Cisco is building its “datacenter anywhere” strategy with the idea that the datacenter will be defined by where the data is, not where the systems are. That was put into focus at the company’s latest Cisco Live event in Barcelona. It’s where Cisco unveiled the latest iteration of its Application Centric Infrastructure (ACI) offering. When ACI was launched almost six years ago, it was done in response to the growing software-defined networking (SDN) and network-functions virtualization (NFV) trends that were coming to the fore and putting pressure on the long-time networking market leader by decoupling the control plane and various network tasks from the underlying hardware and putting them into software. It’s since evolved into a foundational element of Cisco’s intent-based networking efforts.

Still, McGinnis says, the goal is to have full lifecycle management from day one – including provisioning, deployment, troubleshooting and remediation, and regulatory compliance – and ensuring that the networking infrastructure can adapt to the needs of the applications running on top of it. At the show, Cisco said it is integrating ACI into the infrastructure-as-a-service (IaaS) platforms in Amazon Web Services (AWS) and Microsoft Azure cloud environments. The moves involving the two largest cloud service providers build off a partnership Cisco announced with Google Cloud in late 2017 to build a hybrid cloud platform that combines technologies from both vendors. It also dovetails with the trend toward multicloud environments. Most enterprises that are in the public cloud use at least two providers, with many using three or more.

Cisco is using ACI Multisite orchestrator and Cloud ACI Controllers to extend ACI into AWS and Azure. ACI Multisite orchestrator essentially sees a public cloud region as an ACI site and manages it like it does any other on-premises ACI site. The controllers take ACI policies and make them into cloud-native constructs, enabling consistent policies to stretch across multiple on-premises environments and public cloud instances. Cloud ACI is coming in the first half of this year.

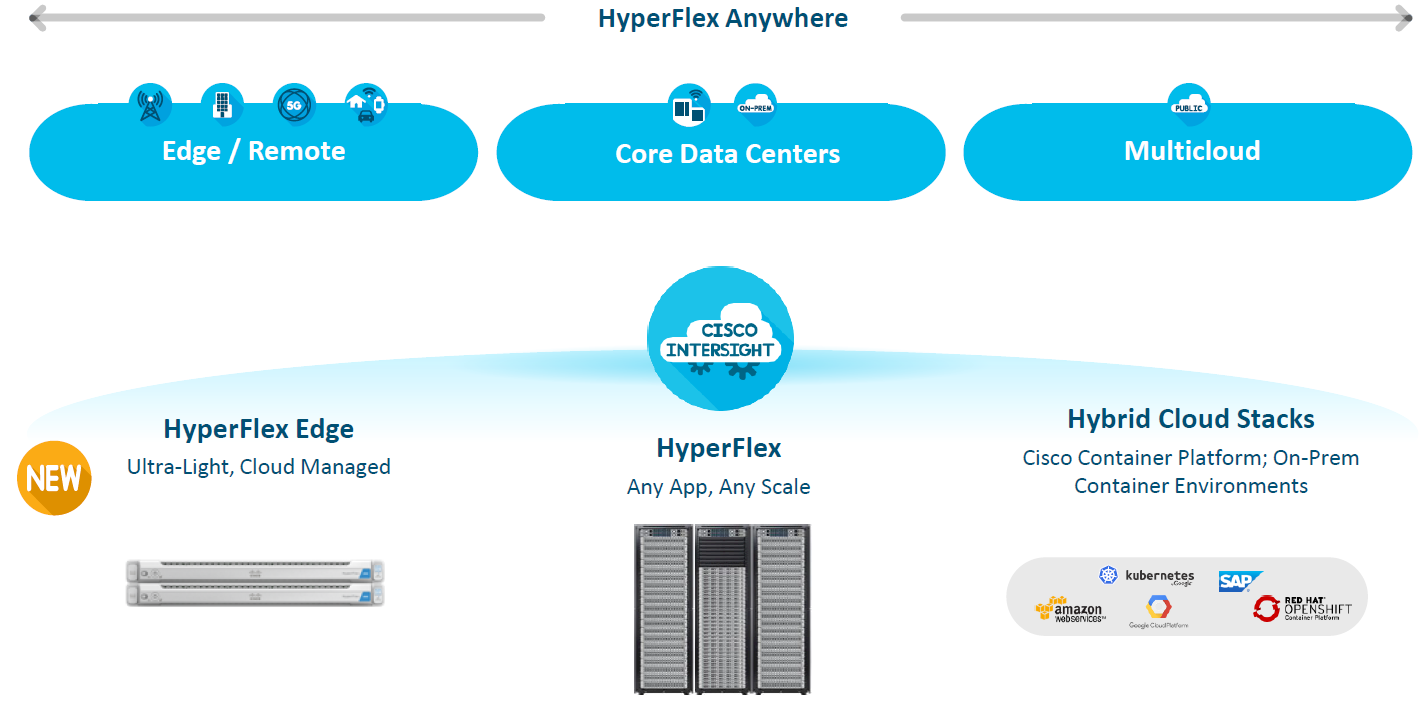

At the same time, the company is pushing HyperFlex to branch and remote offices. HyperFlex, like hyperconverged infrastructures introduced over the years by other vendors, initially was designed to simplify datacenter environments by making compute and storage a single component and addressing such use cases as virtual desktop infrastructures (VDI). Over the years Cisco has expanded its capabilities by growing the applications it can run and addressing multicloud environments including AWS and Google Cloud.

Now the company is using it for the edge. HyperFlex Edge ships directly from the factor to the site and includes connectors to Cisco’s Intersight IT operations management platform to enable automated installation of HyperFlex clusters. The offering includes Intel’s Optane memory and NVM-Express drives as well as the new HyperFlex Acceleration Engine, an optional offload engine PCIe card that is powered by an onboard FPGA. It can offload processing from the CPU to help applications run faster. It also supports container technologies like Kubernetes and Red Hat’s OpenShift.

“We have had remote branch office environments forever in IT, but what we’re seeing now is increased demand for compute and storage within those traditional environments,” Todd Brannon, senior director of data center marketing at Cisco, explains. “Think about retail, where maybe we’re doing video analytics of customer foot traffic to understand dwell time or maybe put offers in front of them in real time. There are all these different examples across traditional types of environments where we’re just seeing digitization and customers that are trying to transform their business. We are going to see more and more data being consumed generated and analyzed outside that datacenter.”

Be the first to comment