There aren’t a whole lot of HPC companies in Japan these days, especially when you consider how prominently the country figures in the global supercomputing community. HPC startups are an even rarer phenomenon there, but one such company, XTREME-D, it trying to make a name for itself in the HPC cloud space.

The four-year old startup recently unveiled its flagship cloud product, known as XTREME-Stargate, which is touted as enabling “the new era of cloud platform for HPC and AI.” Last week, at the 2019 Stanford HPC Conference, attendees got the opportunity to judge that claim for themselves during a presentation by XTREME-D CEO and founder Naoki Shibata and technical advisor David Barkai.

The startup, founded four years ago, has developed a set of cloud products for HPC and AI users and has been rolling them out over the past year. Its first offering, XTREME-DNA, is an automated cloud administration tool that enables an HPC cluster to be configured and provisioned in an existing public cloud in just ten minutes. At this point, the choice is limited to Amazon Web Services, with Azure and Oracle to be supported in the second quarter of the year.

The Stargate Iaas is the company’s own bare metal service aimed at traditional high performance computing applications, as well as AI and, more generally, high performance data analytics (HPDA). Shibata says the first bare metal cloud is housed in a datacenter in Japan, but the company has plans to expand the service to Singapore and the United States.

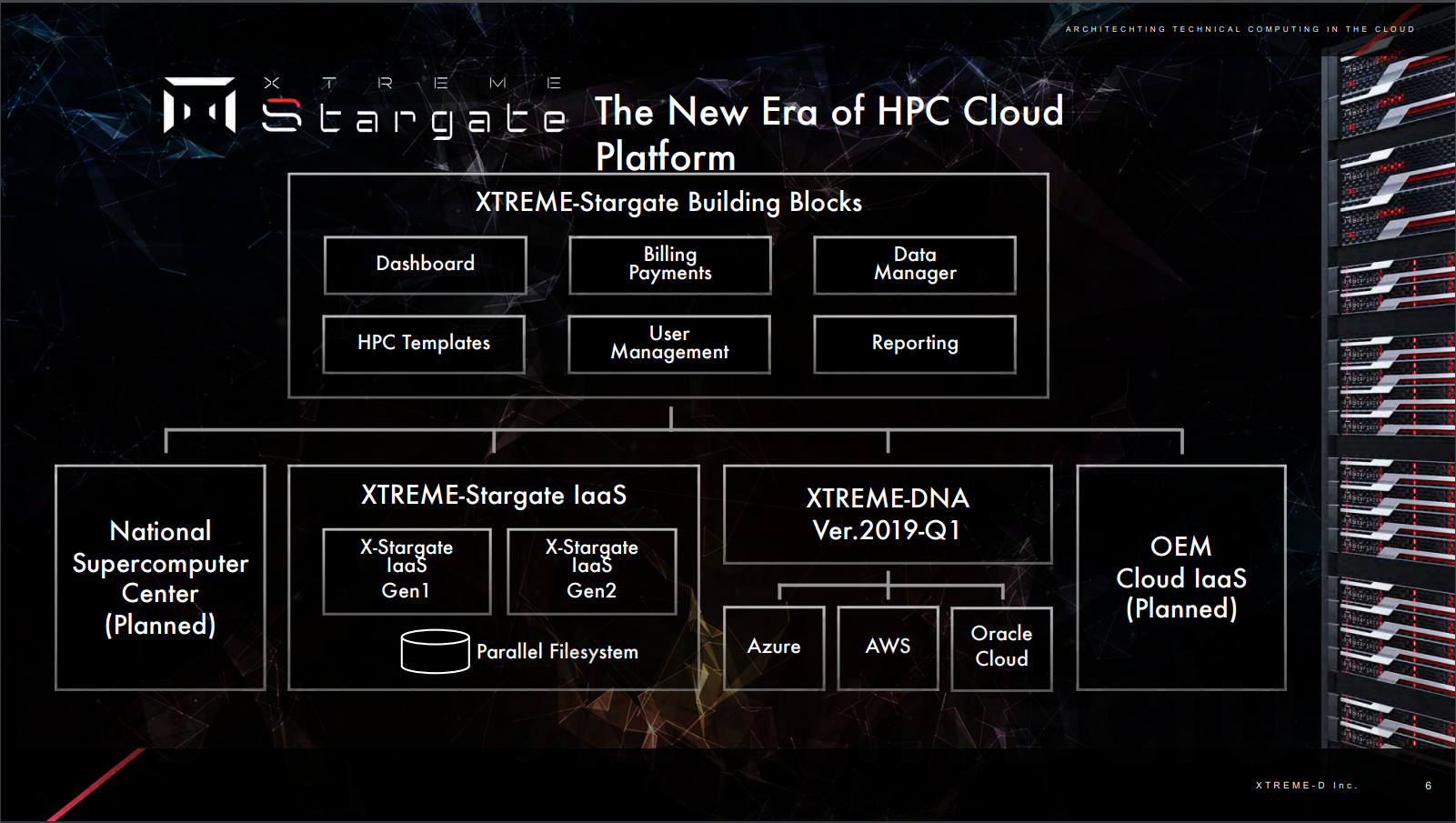

The nomenclature here is a little confusing since both the DNA and Stargate IaaS offerings are based on what they call Stargate building blocks, which are the modular components common to the two services. These components include HPC templates, a dashboard, a billing payments module, a data manager and so on. The block diagram below illustrates more of the detail:

As you can see, the Stargate modules will also be used in a future service will give customers access to supercomputer centers and another that will tie into OEM bare metal clouds. Another upcoming product is the Stargate HPC Gateway, an appliance that connects the customer site and cloud datacenter in order to provide secure data transfers. It acts as a “super head node” for the remote bare metal cluster, but is also compatible with on-premise and public clouds.

The key to Stargate IaaS is the HPC template, which encapsulates the type of cluster environment that will be provisioned for a given job. The template specifies the OS, machine type, middleware, and application itself. Shibata says the template is what enables the HPC cluster setup to be performed in just a few minutes.

Stargate includes a web-based user interface that lets the user choose the appropriate template and cluster instance, and displays the status of all the jobs that are in flight. Shibata says there is also a command line interface, presumably for people who are fond of writing batch scripts. And for those who want to go even lower, an API is available that can be used to perform cluster preparation and job launch programmatically.

Shibata says the main advantage for their Stargate offering is setup speed, and adds that competing IaaS cloud services require manual preparation, provisioning, and performance tuning that can take days or even weeks to accomplish. As Barkai points out, that kind of time commitment sort of defeats the purpose of a service that is supposed to be “on-demand.” In contrast, Stargate users can just click on their template and cluster instance and they’re off and running.

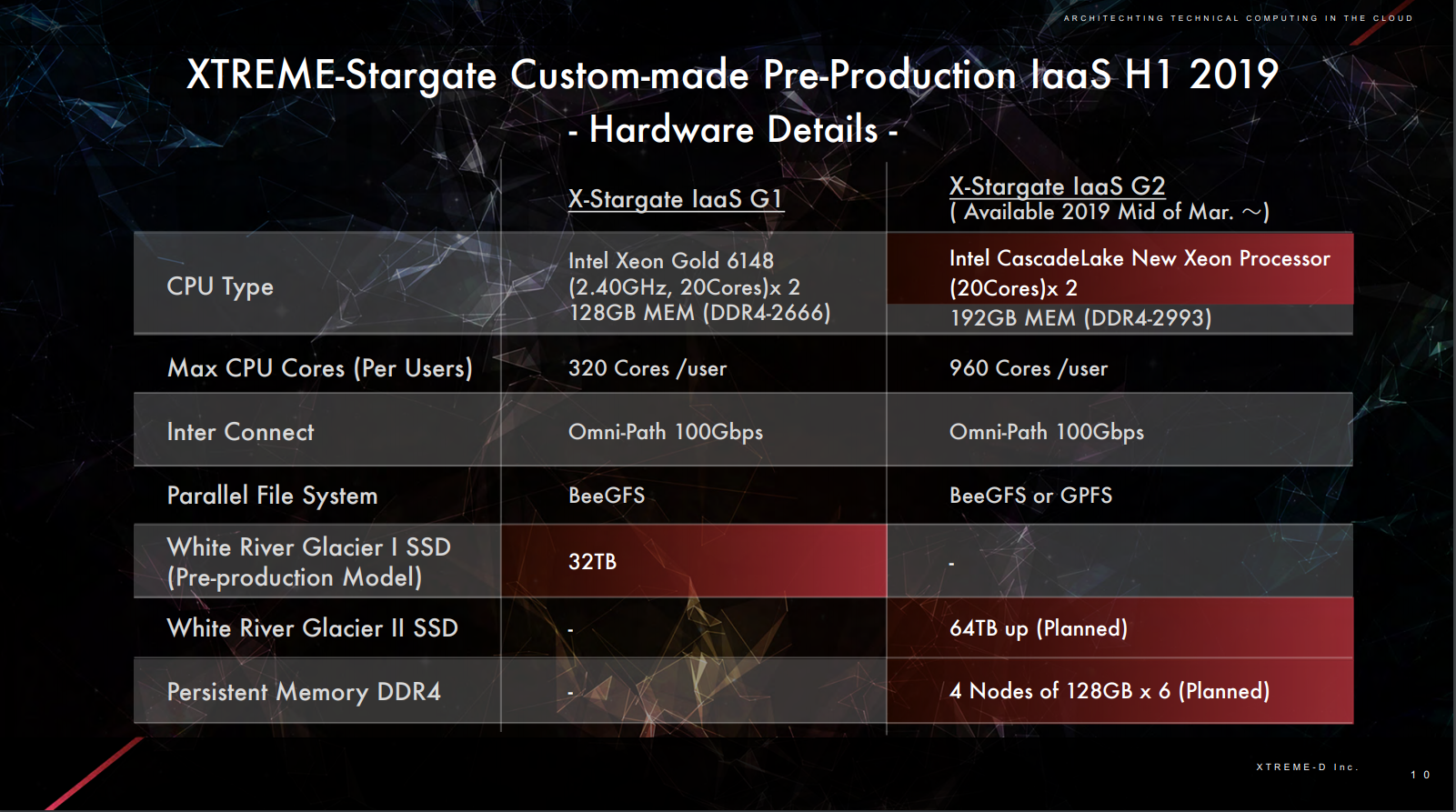

The current Iaas cloud hardware is all Intel, with Xeon CPUs, Optane SDDs, and the Omni-Path interconnect fabric. Intel’s Parallel Studio development tools are included in the package on a monthly subscription basis. No GPUs, FPGAs, or other types of accelerators are currently available, so even though the cloud is being positioned for AI work, the absence of these components is going to limit what can be done here, especially with regard to training neural networks. If these kinds of AI accelerators are desired, XTREME-D customers will have to get access to them via the DNA service and its hookup to the big public clouds.

The initial Stargate servers are outfitted with “Skylake” Xeon SP Gold processors, but the hardware will be upgraded to “Cascade Lake” Xeon SPs somewhere around mid-March. Since these chips include the new Vector Neural Network Instructions (VNNI), users will at least be able to take advantage of the inferencing speedup that comes with this initial instruction set. But like we said, for accelerated training, customers will have to look elsewhere.

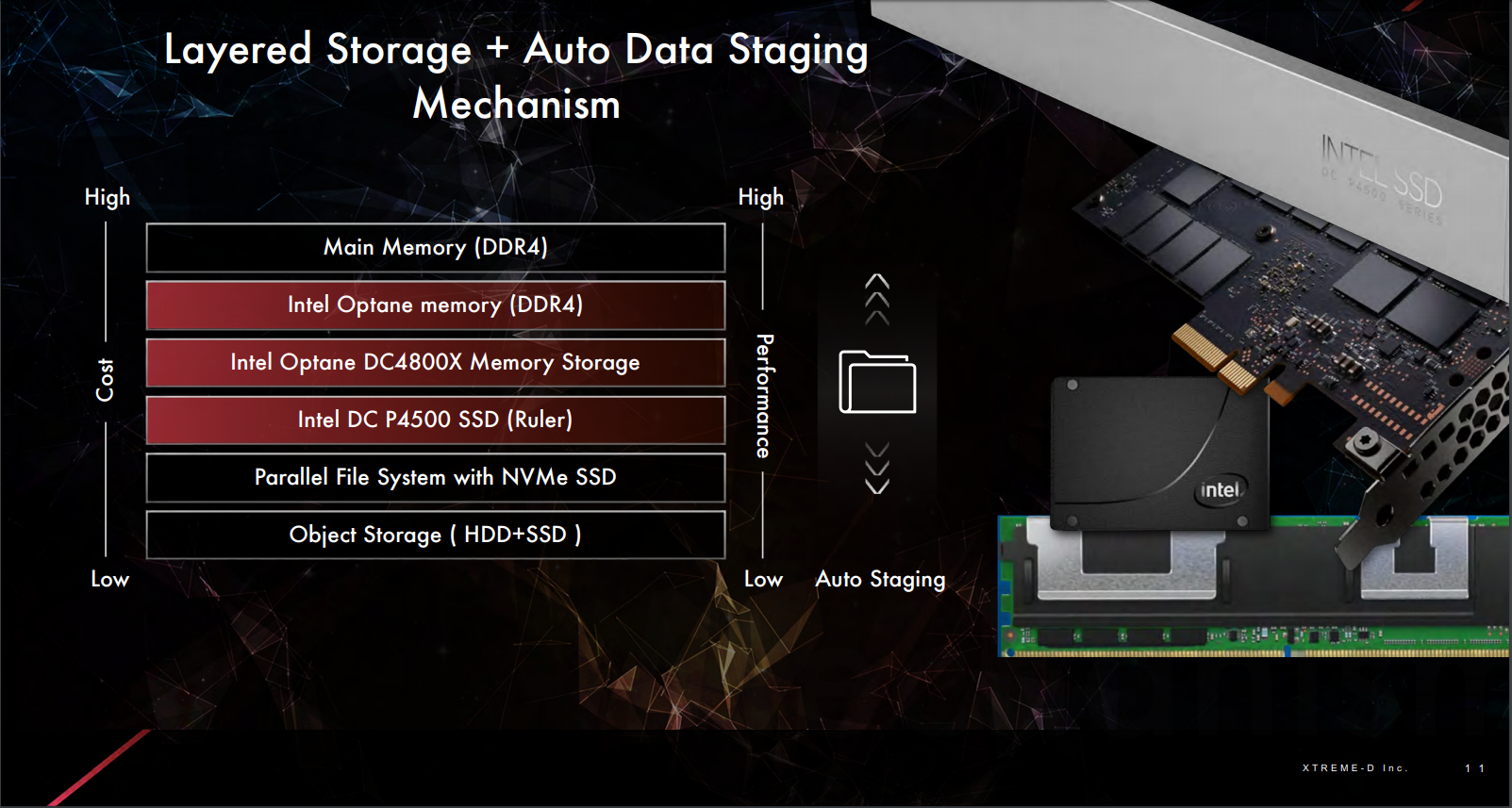

Cascade Lake will also bring with it the ability to access Intel’s 3D XPoint persistent memory modules, and XTREME-D has decided to offer them in concert with the Xeon upgrade. The initial rollout looks to be rather modest though, with plans to equip just four nodes with 768 GB of the new memory.

As you can see, Stargate has only limited elasticity at this point. The initial version tops out at 320 cores per user, which works out to just eight nodes. The upcoming upgrade will triple that to 960 cores.

What it lacks in elasticity though, it makes up for in data management, which is clearly targeted to the kinds of AI and HPDA applications that the company seems most intently focused on. Although all the pieces are not yet in place, the infrastructure will eventually have six memory and storage layers, along with an automatic staging capability that moves the data between layers. The hierarchy is illustrated below.

The complete XTREME-D slide deck can be found here, courtesy of the HPC AI Advisory Council.

Be the first to comment