The massive amounts of data being generated in enterprise datacenters and out there on the public clouds and the need to quickly access and analyze that data is putting a strain on traditional storage and memory architectures that typically inhabit these environments.

For years, tech vendors for years have talked about the coming data-centric world and the need to adapt system architectures to ensure higher bandwidths and lower latencies. It was the driving force behind Hewlett Packard Enterprise’s development of The Machine, which was introduced in 2014 as a single system that would deliver new levels of performance and whose central feature is a huge pool of memory that could be drawn upon as needed rather than tied up in the system’s processors. And at the center of that are memristors, next-generation non-volatile memory components that had been theoretical until HP Labs researchers proved their existence ten years ago.

Memristors are essentially resistors with memory that can retain its stored data even when power is turned off, which helps with availability. Given their nature, memristors also can improve the scalability of machines HPE isn’t the only organizations working with memristors, as we have outlined here at The Next Platform. And while HPE last year rolled out a research prototype of The Machine with 160 TB of memory, it is armed with DRAM as the company continues to work on practical ways to put them to use.

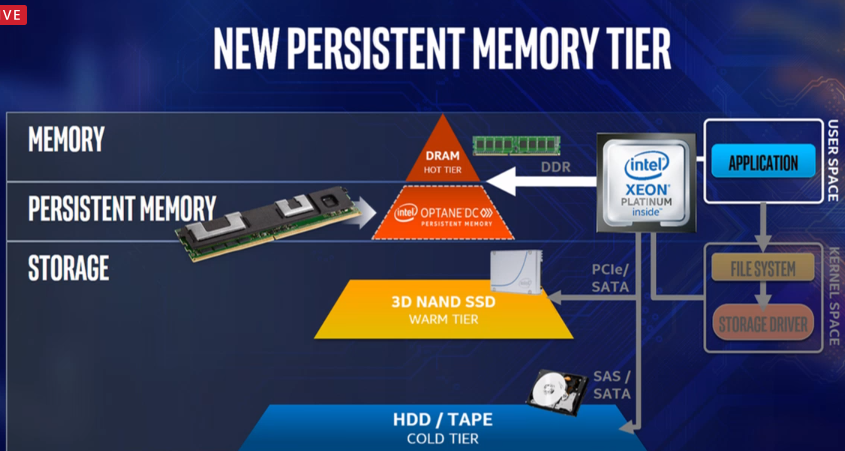

For the past two decades, Intel has expanded beyond PC and server processors and become a platform providers in almost every aspect of the datacenter, and since May 2015 we knew it was working on what it now calls Optane DC persistent memory, a new nonvolatile memory that will fit in the datacenter memory hierarchy between the volatile high performance DRAM and nonvolatile flash and disk. The goal of the persistent memory offering, which keeps the data intact when power is lost, is to drive availability – with reboot times dropping from minutes to seconds – and scalability at costs lower than DRAM.

The current memory-storage hierarchy in datacenter, with its hot, warm, and cold layers of data access, is increasingly strained at a time when data is growing at rapid speed, according to Navin Shenoy, executive vice president and general manager of Intel’s fast-growing Data Center Group.

“In a world of ever-increasing amounts of data, this model is completely insufficient,” Shenoy said at Intel’s recent Data-Centric Innovation Summit at the chip maker’s Santa Clara campus. “The traditional DRAM layer is costly, it offers limited capacity for large datasets, and while the software developer community has been very creative in trying to engineer around memory, it’s not sufficient for the types of workload footprints that we see our customers demanding in the future. At the same time, that warm layer and that cold layer have many limitations in the speed of data transfer, the latency of data transfer and, inherently, hard drives and even tape drives still used in data centers limit efficiency in expanded views of how data is evolving.”

Optane DC enables 11X the write performance in Spark SQL over DRAM-only systems, as well as 9X better performance for read transactions and 11X the number of users per system, he said.

In the months leading up to the event, Intel had made strides with Optane DC persistent memory. The company in late May announced it was making the technology available to developers via the cloud to enable programmers to test and develop applications for it, and to continue to drive developer interest, Intel is offering awards to developers who come up with interesting ideas, proof points and use cases. Last month, Google announced it was working with Intel and SAP to develop virtual machines on the Google Cloud that would support the upcoming release of SAP HANA in-memory workloads with the Optane DC memory. The VMs will be powered by Intel’s upcoming Xeon “Cascade Lake” 14 nanometer processors, which are scheduled to be released later this year. They were originally expected as the “Apache Pass” 3D XPoint memory DIMMs with the “Purley” server platform that was expected to debut in late 2016 and that was pushed out to July 2017.

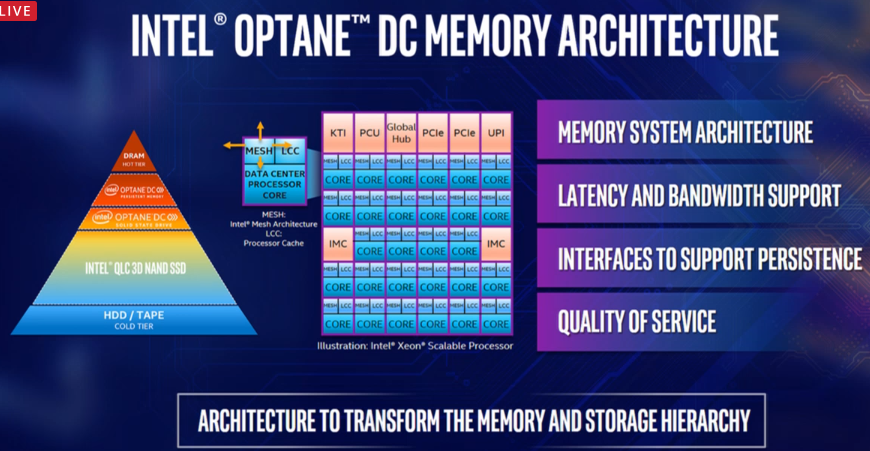

The chips will sport a new integrated memory controller that will enable Optane DC memory. The modules also are DDR4 pin-compatible, so they can be inserted into the current “Skylake” Xeon SP platforms with no modifications, according to Alper Ilkbahar, vice president and general manager of data center memory and storage solutions at Intel.

At the summit, Shenoy said the company is now in production of the Optane DC devices and began shipping the first ones to select customers – including Google – this week. The memory modules are being shipped in 128 GB, 256 GB and 512 GB sizes and include advanced security features, including hardware encryption.

In a nod to the platform approach Intel is taking the datacenter, Shenoy and Ilkbahar spoke about the amount of innovation that had to go into not only developing the Optane DC modules but also other components and support ecosystems around them. Shenoy called the development of the Optane media itself “a breakthrough in material science and a fundamental technology. We had to revamp the integrated memory controller on the microprocessor. We had to develop the software and enable a big software and hardware ecosystem.”

There was a lot of architectural and design work needed for the CPU, including the addition of the new memory controller, to optimize them for Optane DC.

“On the module itself, we have a lot of innovation,” Ilkbahar said. “We have media controllers that manage non-volatile memory at unprecedented memory and speed levels. There is no other memory device or storage device out there today that has these types of requirements that have to work at DDR speeds. At the platform level, we had to rearchitect our firmware, BIOS, Common Flash Interface, all of them had to be rearchitected so that we could reintegrate this new memory type within our platform. On top of that, when you look at the software stack that sits on this, there is a lot of innovation that went into that. To enable all the software innovation, we had to first invent a new programming model called Persistent Memory Programming Model. We made this an open standard and created libraries so that software vendors and partners had a very easy time adopting and developing software around this new technology.”

Current datacenter applications can work with the Optane DC modules, but to take advantage of all the features, some of the software will have to be rewritten, he said.

“The majority of the software or applications that run in the enterprise datacenter don’t access data directly,” Ilkbahar said. “They use middleware – a data access layer – that could be databases, that could be frameworks, and the applications that thousands and thousands of people write interface with these data access layers through standardized APIs. That’s why long list of companies who are delivering some of the most popular data access layers are optimizing their code for Optane persistent memory so the applications don’t need to change. The applications will still see the same standard APIs; they can still access the data without any modifications. Just the software below it has moved.”

Shenoy said Intel already has addressed some of the gaps it sees in the memory-storage hierarchy with such products as 3D XPoint memory developed with Micron and used in and Optane SSDs that he said offer at lower latency and higher endurance than traditional SSDs.

Not quite.

“The chips will sport a new integrated memory controller that will enable Optane DC memory. The modules also are DDR4 pin-compatible, so they can be inserted into the current “Skylake” Xeon SP platforms with no modifications, according to Alper Ilkbahar, vice president and general manager of data center memory and storage solutions at Intel.”

Requires pulling Skylake and inserting Cascade Lake processor. Skylake on Purely before Apache Pass a waste of money?

Mike Bruzzone, Camp Marketing

Not quite,

“The chips will sport a new integrated memory controller that will enable Optane DC memory. The modules also are DDR4 pin-compatible, so they can be inserted into the current “Skylake” Xeon SP platforms with no modifications, according to Alper Ilkbahar, vice president and general manager of data center memory and storage solutions at Intel.”

According to all Intel sources queried at Flash Memory Summit, to upgrade Purley ‘Lewisburg’ C62x chip set boards by specification support Optane Apache Pass DIMMs, their Skylake processor must be pulled and platforms reintegrated with Cascade Lake generation processor.

From a platform investment What a financial waste Skylake Xeon has been ahead of Cascade Lake that support Optane (and accommodate other NVMe DIMMs) intermediate memory tier . . . not quite.

Intel will undoubtedly offer a special customer deal for upgrading Skylake financial waste to Cascade Lake, also addresses Meltdown and Spectre side channel attack with Cascade Lake hard security fix, at a special price?

How convenient it must be for Intel to offer customers Cascade Lake with Optane DIMMs special sales fix, right as AMD moves to ramp Epyc and Rome.

There are two other pertinent questions. Will Optane DIMMs work in back in time boards specified for Apache Pass? Likely, but it would not be the first time if not. Second, will customers actually upgrade Skylake to Cascade Lake? At any Intel special price?

The technical press and certainly a decade ago would be all over Intel for anything similar to this Skylake investment waste. All over Intel for offering a special fix right as AMD attempts to ramp on Epyc server market re-entry.

Why not today?

Mike Bruzzone, Camp Marketing