Databases and datastores are by far the stickiest things in the datacenter. Companies make purchasing decisions that end up lasting for one, two, and sometimes many more decades because it is hard to move off a database or datastore once it is loaded up and feeling dozens to hundreds of applications.

The second stickiest thing in the datacenter is probably the server virtualization hypervisor, although this stickiness is more subtle in its inertia.

The choice of hypervisor depends on the underlying server architecture, of course, but inevitably the management tools that wrap around the hypervisor and its virtual machines end up automating the deployment of systems software (like databases and datastores) and the application software that rides on top of them. Once an enterprise has built all of this automation with VMs running across clusters of systems, it is absolutely loath to change it.

But server virtualization has changed with the advent of the data processing unit, or DPU, and VMware has to change with the times, which is what the “Project Monterey” effort with Nvidia and Intel is all about.

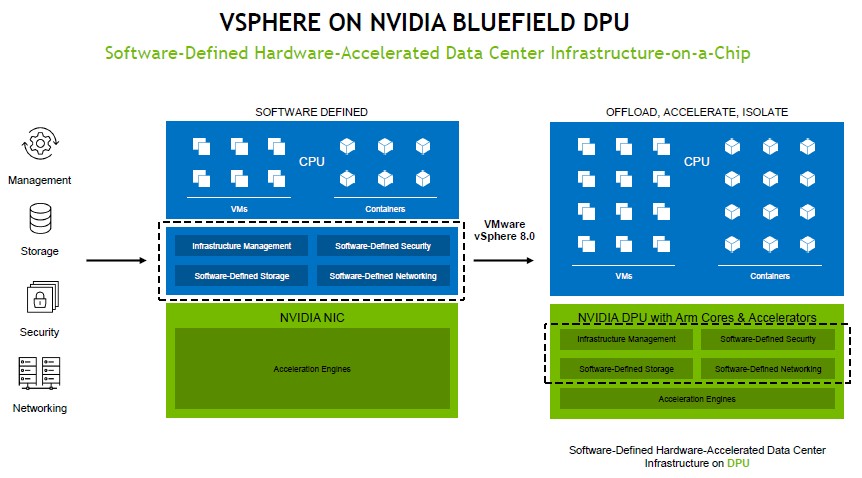

The DPU offload model that enhances the security of platforms – particularly network and storage access – while at the same time lowering the overall cost of systems by dumping the network, storage, and security functions that would have been done on the server to that DPU, thus freeing up CPU cores on the server that would have been burdened by such work. Like this:

Offload is certainly not a new concept to HPC centers, but the particular kind of offload the DPU is doing is inspired by the “Nitro” family of SmartNICs created by Amazon Web Services, which have evolved into full-blown DPUs with lots of compute of their own. The Nitro cards are central to the AWS cloud, and in many ways, they define the instances that AWS sells.

Offload is certainly not a new concept to HPC centers, but the particular kind of offload the DPU is doing is inspired by the “Nitro” family of SmartNICs created by Amazon Web Services, which have evolved into full-blown DPUs with lots of compute of their own. The Nitro cards are central to the AWS cloud, and in many ways, they define the instances that AWS sells.

We believe, as do many, that in the fullness of time all servers will eventually have a DPU to better isolate applications from the control plane of the cluster that provides access to storage, networking, and other functions. DPUs will be absolutely necessary in any multi-tenant environment, but technical and economic benefits will accrue to those using DPUs on even single-node systems.

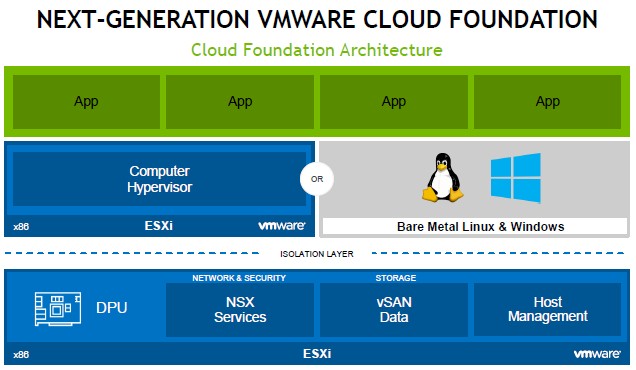

With the launch of the ESXi 8 hypervisor and its related vSphere 8 management tools, Nvidia and VMware have worked to get much of the VMware virtualization stack to run on its Arm-based server cores on the BlueField-2 DPU line, virtualizing cores running on X86 systems that the DPU is plugged into. Conceptually, this next generation of VMware’s Cloud Foundation stack looks like this:

With the Nitro DPUs and a homegrown KVM hypervisor (which replaced a custom Xen hypervisor that AWS used for many years), AWS was able to reduce the amount of server virtualization code running on the X86 cores in its server fleet down to nearly zero. Which is the ultimate goal of Project Monterey as well. But as with the early Nitro efforts at AWS, shifting the hypervisor from the CPUs to the DPU took times and steps, and Kevin Deierling, vice president of marketing for Ethernet switches and DPUs at Nvidia, admits to The Next Platform that this evolution will take time for Nvidia and VMware as well.

“I think it is following that similar pattern, where initially you will see some code running on the X86 and then a significant part being offloaded to the Bluefield DPUs,” Deierling explains. “Over time, I think you’ will see more and more of that being offloaded, accelerated, and isolated to the point where, effectively it’s a true bare metal server model where nothing is running on the X86. But today, there’s still some software running out there.”

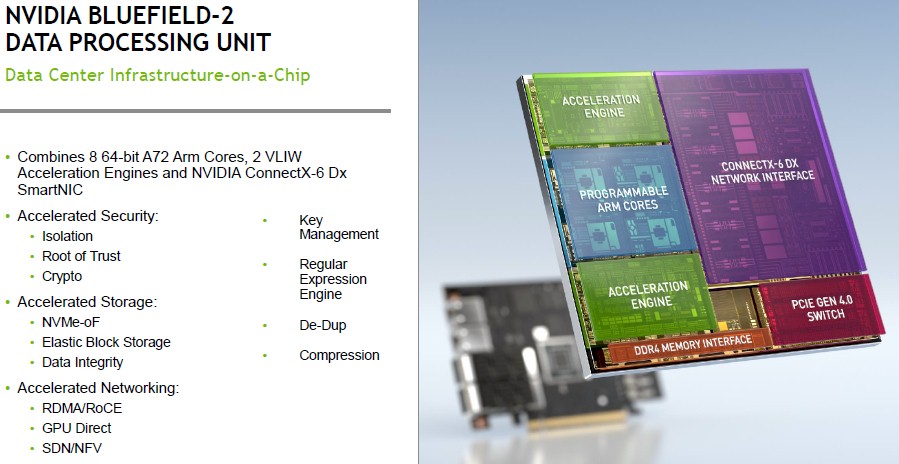

The BlueField-2 DPU includes eight 64-bit Armv8 Cortex-A72 cores for local compute as well as two acceleration engines, a PCI-Express 4.0 switch, a DDR4 memory interface, and a 200 Gb/sec ConnectX-6dx network interface controller. That NIC interface can speak 200 Gb/sec Ethernet or 200 Gb/sec InfiniBand, as all Nvidia and prior Mellanox NICs for the past many generations can. That PCI-Express switch is there to provide endpoint and root complex functionality, and we are honestly still trying to sort out what that means.

It is not clear how many cores the vSphere 8 stack is taking to run on a typical X86 server without a DPU or how many cores are cleared up by running parts of the vSphere 8 stack on the BlueField-2 DPU. But Deierling did illustrate the principle by showing the effect of offloading virtualized instances of the NGINX Web and application server from the X86 CPUs to the BlueField-2.

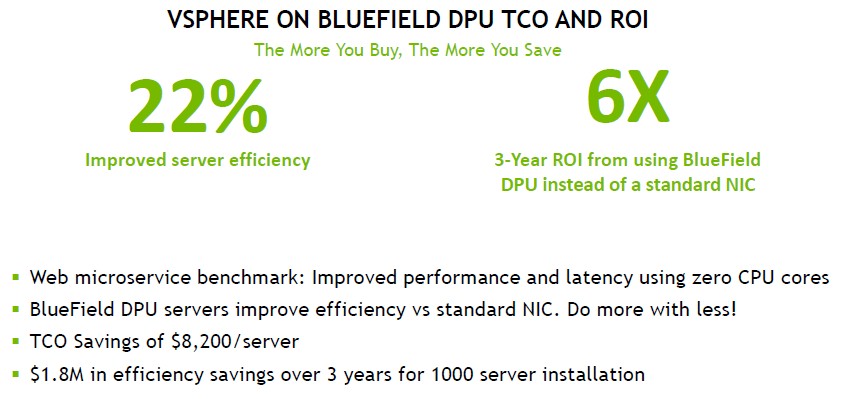

In this case, NGINX was running on a two-socket server with a total of 36 cores, and eight of the cores were running NGINX and their work could be offloaded to the Arm cores on the BlueField-2 DPU and various security and networking functions related to the Web server also accelerated. The performance of NGINX improved, the latency of Web transactions dropped. Here is how Nvidia calculates the return on investment:

Deierling says that using the DPU offered a “near immediate payback” and made the choice of adding a DPU to systems “a no brainer.”

We don’t know what editions of the vSphere 8 stack – Essentials, Standard, Enterprise Plus, and Essentials Plus – are certified to offload functions to the BlueField-2 DPU, and we don’t know what a BlueField-2 DPU costs either. So it is very hard for us to reckon what the ROI of running virtualization on the DPU might bring specifically.

But even if the economics of the DPU were neutral – the cost of the X86 cores freed up was the same as the cost of the BlueField-2 DPU – it still makes sense to break the application plane from the control plane in a server to enhance security and to accelerate storage and networking. And while the benefits of enhanced security and storage and networking acceleration will be hard to quantify, they might even be sufficient for IT organizations to pay a premium for a DPU instead of just using a dumb NIC or a SmartNIC.

Here is one case in point that Deierling brought up just as an example. For many years, hyperscalers and cloud builders did not have security across the east-west traffic between the tens to hundreds of thousands of servers interlinked in their regions, which constitute their services. The DPU was invented in part to address this issue, encrypting data in motion across the network as application microservices chatter. A lot of hyperscalers and cloud builders as well as other service providers, enterprise datacenters, and HPC centers similarly are not protecting data in transit between compute nodes. It has just been too expensive and definitely was not off the shelf.

With Project Monterey, Nvidia and VMware are suggesting that organizations run VMware’s NSX distributed firewall and NSX IDS/IPS software on the BlueField-2 DPU on every server in the fleet. (The latter is an intrusion detection system and intrusion prevention system.) The idea here is that no one on any network can be trusted, outside the main corporate firewall and inside of it, and the best way to secure servers and isolate them when there are issues is to wrap the firewall around each node instead of just around each datacenter.

The NSX software can make use of the Accelerated Switching and Packet Processing (ASAP2) deep packet Inspection technology that is embedded in the Nvidia silicon, which is used to offload of packet filtering, packet steering, cryptography, stateful connection tracking, and inspection of Layer 4 through Layer 7 network services to the BlueField-2 hardware.

The first of the server makers out the door with the combined VMware stack and Nvidia BlueField-2 is Dell, which has certified configurations of its PowerEdge R650 and R750 rack servers and its VxRAIL hyperconverged infrastructure with the Nvidia DPUs and vSphere 8 preinstalled to offload as much work as possible to those DPUs. These systems will be available in November. Pricing is obviously not available now. Hopefully they will be when they start shipping so we can figure out the real ROI of DPU offload for server virtualization. The numbers matter here. In a way, the ROI will pay for enhanced security for those who have to justify the added complexity and cost. Those who want the enhanced security at nearly any cost won’t care as much about the DPU ROI. The trick for VMware and Nvidia is to price this low enough that it is indeed a no-brainer.

Be the first to comment