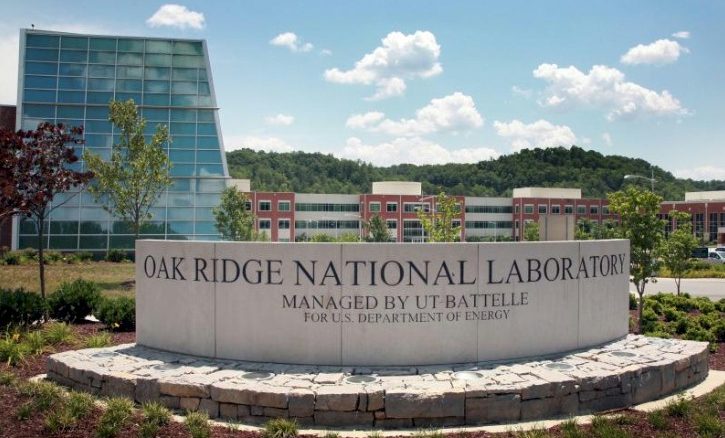

Oak Ridge Trials Arm-GPU Combo On HPC Testbed

The GPU has become a standard platform for accelerating high performance computing workloads, at least for those that have had their code tweaked to support acceleration at all. …

The GPU has become a standard platform for accelerating high performance computing workloads, at least for those that have had their code tweaked to support acceleration at all. …

The choice of processors available for high performance computing has been on growing for a number of years. …

If we could sum up the near-term future of high performance computing in a single phrase, it would be more of the same and then some. …

Like their US-based counterparts, Google and Amazon, the Chinese Internet giants Baidu and Alibaba rely on GPU acceleration to drive critical parts of their AI-based services. …

Nvidia has for years made artificial intelligence (AI) and its various subsets – such as machine learning and deep learning – a foundation of future growth and sees it as a competitive advantage against rival Intel and a growing crop of smaller chip maker and newcomers looking to gain traction in a rapidly evolving IT environment. …

As GPU-accelerated servers go, Nvidia’s DGX-2 box is hard to beat. …

The one thing that AMD’s return to the CPU market and its more aggressive moves in the GPU compute arena have done, as well as Intel’s plan to create a line of discrete Xe GPUs that can be used as companions to its Xeon processors, has done is push Nvidia and Arm closer together. …

The datacenter business at Nvidia hit a rough patch a little less than a year ago, but it is starting to pick up again after a few quarters of declines and may soon be in positive growth territory. …

The image of Nvidia co-founder and CEO Jensen Huang pacing across the stage at a tech conference and talking about his company’s latest new markets for artificial intelligence, the latest partnerships with high-profile vendors, and looking into the near horizon for Nvidia’s next revenue stream has become a familiar one. …

It would be convenient for everyone – chip makers and those who are running machine learning workloads – if training and inference could be done on the same device. …

All Content Copyright The Next Platform