Lambda Snags $320 Million To Grow Its Rent-A-GPU Cloud

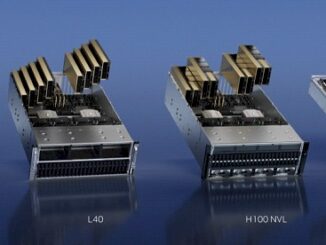

Riding high on the AI hype cycle, Lambda – formerly known as Lambda Labs and well known to readers of The Next Platform – has received a $320 million cash infusion to expand its GPU cloud to support training clusters spanning thousands of Nvidia’s top specced accelerators. …