The world has gone nuts for generative AI, and it is going to get a whole lot crazier. Like $400 billion a year in GPU hardware spending by 2027 crazier.

If you don’t think this is an enormous amount of spending, consider that the US Department of Defense, which is one of the biggest spenders on Earth, has an $842 billion budget allocated for the 2024 government fiscal year, which ends next July.

Here is some more perspective. Gartner analysts casing the IT market reckon that in 2024 total IT spending, including hardware, software, IT services, and telecom services, will come to $5.13 trillion, up 8.8 percent calendar year on year. Of this, only $235.5 billion is expected to be for datacenter systems – meaning all servers, all storage, and all switching sold across the entire planet. That is up 8.1 percent, and we think largely thanks to the rapid adoption of AI clusters for generative AI and despite some pretty serious infrastructure spending declines in more generic serving in the datacenter.

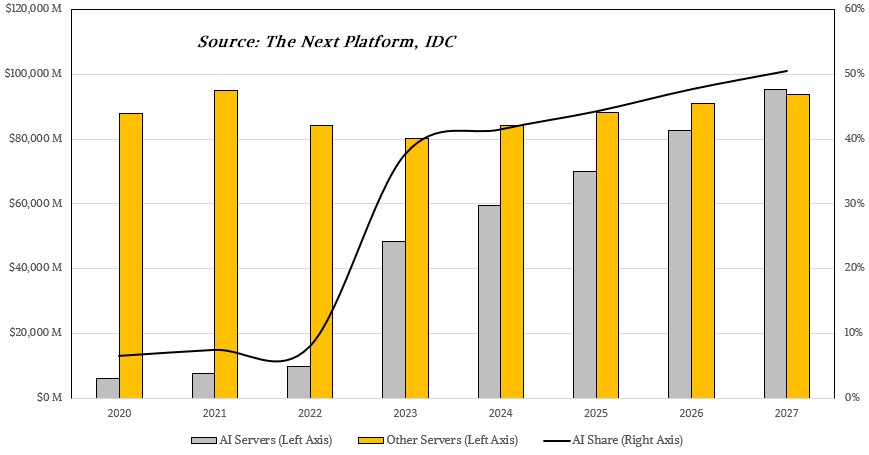

We did some spreadsheet work back in October based on some server revenue spending projections from IDC to try to figure out how much of that spending would be for AI clusters, and that model showed AI systems represented under $10 billion in system sales in 2022, will kiss $50 billion in 2023 after a tremendous spike and continue growing from that point to kiss $100 billion and about 50 percent of server revenues by 2027. We though this was crazy enough, to be quite blunt.

If the prognostications made by AMD chief executive officer Lisa Su at the Advancing AI event in San Jose this week turn out to be true, then it looks like we are all going to have to go back and revise our AI models upwards again. Because Su says that the market for datacenter GPUs has gotten critical mass and has undergone a fission explosion and is now flooding the deuterium and tritium wrappings of a nuclear bomb with neutrinos at the same time that tremendous economic pressures are going to create a secondary fusion reaction. (Would that all of this AI could solve the critical problem of the generation of electricity through fusion that will be needed to power all of the AI systems that will be installed. It would help to not only save the planet in many ways, but less us reverse so much damage that has been done. And we could all make money doing the restoration.)

A year ago, when Su & Team were first hinting about what the MI300 family of GPUs might look like, the company look at all of the market research out there and also its own pipeline and reckoned that the total addressable market for datacenter AI accelerators was maybe on the order of $30 billion in 2023 and would grow at around 50 percent compound annual growth out to 2027 and hit more than $150 billion by that time.

“That felt like a big number,” Su said during her keynote, and we would have concurred this was true, given what we knew then and know now about the worldwide revenues for datacenter hardware overall from the likes of IDC and Gartner.

“However, as we look at what has happened over the last twelve months and the rate and pace of adoption that we are seeing across industries, across our customers, across the world, it’s really clear that the demand is just growing much, much faster,” Su continued. “So if you look at now, to enable AI infrastructure – of course, it starts in the cloud but it goes into the enterprise. We believe we will see plenty of AI throughout the embedded markets and into personal computing. We are now expecting that the datacenter accelerator TAM will grow more than 70 percent annually over the next four years to over $400 billion in 2027. So does that sound exciting to us as an industry? I have to say for someone like me who has been in the industry for a while, this pace of innovation is faster than anything I have ever seen before.”

Now, that was just the TAM for the datacenter part of the AI accelerator business – this does not include the TAM for edge and client AI hardware accelerators. AMD did not discuss what the TAM is for that broader silicon market. This number seems impossibly large when IDC was forecasting only a few months ago that the whole server business would be a little less than $200 billion by 2027.

If you do the math backwards and use a ratio that the GPU cost of an AI server is around 53 percent of the overall price – somewhere around $200,000 of a $375,000 bill of goods for machine based on an eight-way Nvidia HGX GPU compute complex – then AI accelerator hardware should only be driving somewhere around $50 billion in GPUs in 2027 if our guess about how the server market projected by IDC and the split between AI and non-AI servers is correct. Clearly, someone needs to revise their estimates about how this will all play out and what, precisely, AMD means by “datacenter AI accelerator.” It means GPUs and NNPs for sure, but it might mean a portion of CPU sales, too.

In any event, what AMD said is that growth is faster than planned and the revenue run rate for accelerators is going to be 9X higher than we would have estimated only a few months ago.

We think this definitely qualifies as crazy. And assuming that the pricing on GPUs and other accelerators comes down as HBM memory comes down, it means that volumes are going to be truly huge if this all plays out the way Team Su is anticipating. And with this kind of growth, there is plenty of room for competition and profit for many vendors.

I think I’ve got it: 8^b

⟨φ(deuterium)| f(fission) |ψ(tritium)⟩ → neutrinos + ⟨φ(economic)| g(fusion) |ψ(pressure)⟩ = ‖market critical mass‖²

“If you do the math backwards and use a ratio that the GPU cost of an AI server is around 53 percent of the overall price”, on my data from May of this year. Also cited in my Nvidia q3 on channel on financial. Anyone else touting this would be a confirmation or a copy? Only $50 B units? Volumes are unreported? mb

No, I did my own math on the cost of a clone of a DGX and what I think the HGX compute complex is.

Tim, then we both came to the same conclusion 54% cost and 46% margin. I began with a total revenue total cost assessment for RTX cards on my quarterly channel supply data; risk, ramp, peak, run down, run end which seems precise for Nvidia and AIB offerings.

I relied on a set card price so said MSRP to determine marginal cost. I do have change in actual quarterly retail price recorded by PC Builder and HUB so I could further refine in particular on RDNA ll and Ampere 2023 production tails. RDNA ll run end card sale price confirms AIB card cost so I had less incentive to do the full model you could see AMD AIB card cost at run end price.

The channel ramp data seeking change in quantity and the production metric over 5 quarters is 15.5%, 19.6%, 32.8%, 20.4%, 11.5% which gives approximately GPU and/or dGPU + kit component price for a card at MSRP revealing MC = MR = P at the last unit of production. Said last unit of ‘card’ production is very near my dGPU component cost per mm2 from TSMC to AMD and Nvidia on channel on financial data so the dGPU kit price is revealed.

I looked into this on all the gamer whin about price gouging and it was clear entering 2023 AMD and Nvidia moved from 3rd degree to 1st degree MSRPs which the production chain lead all through 2022 rejecting 3rd degree MSRPs on application competitive pricing.

I then took this production aim albeit for cards and there are also price supports that lift consumer card margins on premium features and applied that cost : price / margin framework to systems. I started with one 8 GPU DGX and rejected the traditional Intel CPU 20% value rule and also rejected dGPU subsystem at 30%. The more I worked the whole system model the more it appeared the production chain had agreed on 56% cost and 46% margin. AND for cards plus a bit more margin for top shelf products supporting a premium feature set subsequently pulling total category margin up and or subsidizing mid shelf price reductions.

While I stared with cards and applied that framework to systems it appears to me this was the square deal the production chain demanded minimally 46% margin.

mb