It is beginning to look like AMD’s Instinct datacenter GPU accelerator business is going to do a lot better in 2024 than many had expected and that the company’s initial forecasts given back in October anticipated. But make no mistake. The GenAI boom cannot yet make up for the ongoing slump in server spending, which will likely continue through the first half of 2024.

AMD has not really made much money in datacenter GPUs so far, excepting the “Frontier” supercomputer at Oak Ridge National Laboratory and its several chips off the Frontier block knockoffs based on the “Arcturus” MI250X series. That work gave AMD a step function in credibility for GPU compute, but here at the beginning of 2024, AMD has made sufficient progress in its HPC and AI software stacks and has a very competitive designs with the “Antares” MI300 series – including both the MI300A hybrid CPU-GPU device and the MI300X all-GPU device – that it can absolutely wax up its GPU board and surf on the GenAI wave right into the largest datacenters of the world.

And that is exactly what we expect AMD to do.

But it is important to not get too excited, or to think that AMD can actually make a dent in the beast that is Nvidia.

Even if AMD is able to crank out the Antares chips and secure HBM3 memory capacity from Samsung and SK Hynix sufficient to rapidly expand its GPU manufacturing capacity, and boost its manufacturing and testing capacity to make sure everything that ships works perfectly, AMD’s revenues for GPU compute will still be gated by the availability of CoWoS 2.5D packaging from foundry and packaging partner Taiwan Semiconductor Manufacturing Co and by the availability of HBM3 memory.

Keep this in mind as we review AMD’s numbers for the fourth quarter of 2023 and look ahead to what 2024 might look like. Let’s dig in.

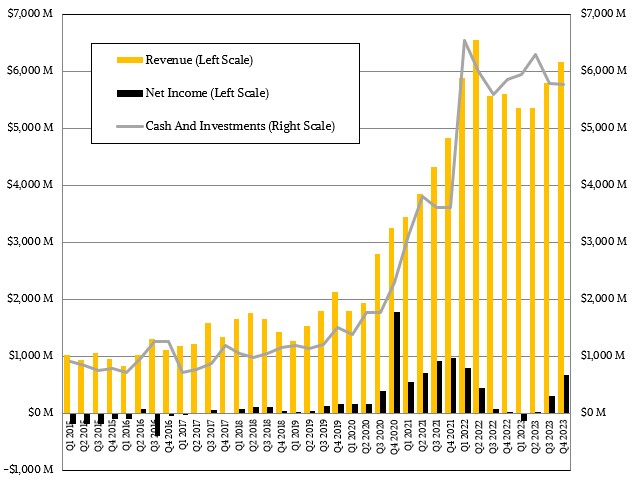

In the final quarter of 2023, AMD had $6.17 billion in revenues, up 10.2 percent year on year and up 6.3 percent sequentially, which was pretty good given the softness in the PC market that AMD still depends upon and the server recession in the datacenter – outside of AI servers of course, which are booming. The company posted $667 million in net income, a very big shift from the mere $21 million it made in the year ago quarter, and that net income represented 10.8 percent of revenues, which is at the low end of a good quarter for the company but certainly nowhere near its peak.

The reason why this is the case is that the devices AMD is making are based on the most advanced TSMC processes and packaging and, in the case of the MI300A hybrid device that is at the heart of the 2 exaflops “El Capitan” supercomputer at Lawrence Livermore National Laboratory, these devices were sold at the cut-throat price that the US government can command. (Which is substantially deeper than what even the hyperscalers and cloud builders can get away with and which is probably close to 50 percent off list price.) Even with that, AMD has been able to make some money in the datacenter business.

But, again, nothing extraordinary even if it is on par with some good quarters that AMD turned in during 2022.

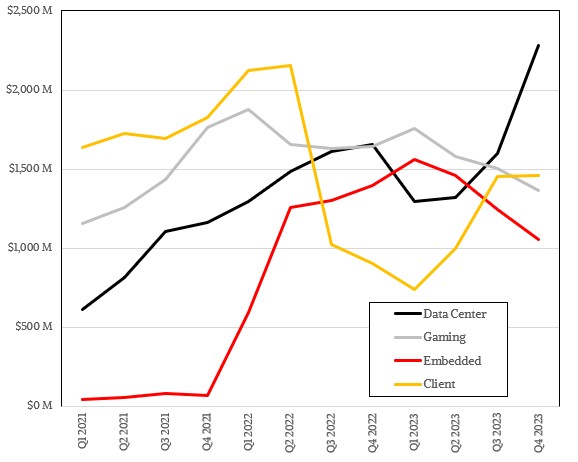

In Q4 2023, AMD’s Data Center group had revenues of $2.28 billion in revenues, up 37.9 percent, and operating income growth outpaced that revenue growth (which is what you want to see) by rising 50 percent to $666 million. (Round that number up or down please, its ominous.) Data Center revenues were up 42.8 and operating income was up 117.6 percent sequentially (which is also what you want to see in a Q3 to Q4 jump). Operating income as a share of revenue was 29.2 percent, which is nearly 10 points higher than in Q3 and nearly 20 points higher than in Q1 and Q2 of last year, which is fine. But to make it real, the average for all of 2022 was 30.8 percent of Data Center revenues came down to operating income, and each quarter was pretty close to that average.

The cost of advancing CPU and GPU designs and getting them made was relatively high in 2023, and now, hopefully, AMD has mastered things and it can wring some higher profits out of its current products and leverage its expertise on future “Turin” Zen 5 Epyc CPUs coming later this year and future Instinct MI400 GPUs expected next year.

AMD’s Client group is recovering but still nowhere near the levels seen in late 2021 and early 2022 before the PC market collapsed. Revenues were $1.46 billion, up 61.8 percent, and operating income was a mere $55 million, down 136.2 percent. Gaming group revenue took it on the chin, down 16.8 percent to $1.37 billion and operating income fell in concert, down 15.8 percent to $224 million. The Embedded group, dominated by the Xilinx FPGA product lines, also took a hit, down 24.3 percent year on year to $1.06 billion in sales with operating income down 34 percent to $461 million.

Imagine had AMD not started to reinvigorated its datacenter CPU business back in 2017 and really got on track with competitive GPUs back in 2019. . . .

To be absolutely fair, the Data Center group was about 2.5 percent of AMD’s revenue back in 2015 when we started re-tracking AMD as it was talking about its datacenter aspirations. That jumped to around 15 percent of revenues with the ramp of the “Naples” Epyc CPUs in 2018 and then the addition of the “Rome” and “Milan” server CPUs pushed that ratio up to 25 percent, give or take. And with the MI300 launch and the addition of GPUs and the continuing softness of PC and gaming chips, Data Center rose to 37 percent of AMD’s overall revenues in Q4. But we don’t think this is normal. With all of its markets firing on all cylinders, we think it is possible for Data Center to have more than a $11 billion annual run rate eventually be about a third of a $34 billion company.

While AMD’s Data Center group is doing well considering all of the complexities and costs, it is important to remember that AMD needs all of its groups to do well for the whole company to do well.

What is clear is that the Data Center group will be carrying the other groups here in 2024, and there is no question in our minds that its revenues will grow thanks to the MI300 ramp and that server spending outside of AI will start to pick up in the second half of the year. There are lots of machines out there in the hyperscalers and cloud builders that are five years old, and these will be replaced with shiny new machinery with top end, high bin CPUs because that is the best way to manage a fleet if you keep gear around for longer.

The question we have is whether the hyperscalers and cloud builders are sitting by, waiting for the Turin CPUs to do their buying in the second half as they conserve their cash for Instinct MI300X acquisitions in the first half. That’s what we would do.

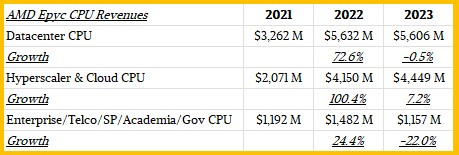

It is unfortunate in a way for the Epyc server CPU line that the GenAI revolution happened as the “Genoa” Zen 4 generation, including the “Bergamo” and “Siena” variations, were coming to market because GenAI stalled server upgrades among the hyperscalers, cloud builders, service providers, and large enterprises of the world. Here is how we think Epyc server sales did in the past three years, by industry:

AMD still has work to do to get OEMs to sell more Epyc chips into the enterprise. Whatever Intel is doing here, it is working. Whatever AMD is doing among the hyperscalers and cloud builders to sell Epycs, it is working. We think it is competing on throughout and price, plain and simple, and that enterprises have not yet got the memo. There is a lot of inertia in the enterprise when it comes to server buying, for better or worse.

From a financial standpoint, what matters most in terms of revenue growth for AMD’s Data Center group is how the MI300 series will do this year.

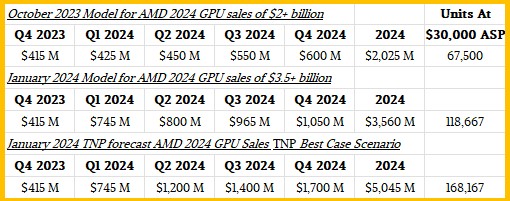

Back in October, AMD chief executive officer Lisa Su told Wall Street that it expected for datacenter GPU sales to be in excess of $2 billion in 2024 and that it expected GPU revenues of $400 million in Q4 2023 because of initial MI300 sales for El Capitan and a few hyperscalers and cloud builders who were getting early access.

During the call going over Q4 2023, Su said that AMD did better than $400 million in datacenter GPU sales, and raised the forecast for 2024 to be above $3.5 billion in revenue. The table below shows our model for the distribution of that revenue on the two forecasts:

We also added in what we think is the probable best case scenario for AMD’s GPU sales, and a whole bunch of us chatting this morning think that $5 billion or more is probably a better guesstimate for what AMD might be able to do in GPU sales in 2024. It is not so much that AMD is low-balling this, it is that demand is higher than expected, people are more ready with their software than expected, and the supply of components is better than expected. These are good kinds of change, and we think AMD will be even more pleasantly surprised for 2024 than it is now expecting.

With that, Nvidia’s GPU sales, if they stabilize at around $11,500 billion a quarter in 2024 on average, will be just shy of an order of magnitude larger than what AMD is doing.

AMD has a long, long way to go to take a significant bite out of Nvidia. But make no mistake: AMD definitely has Nvidia by the ankle, and it is chomping.

Thanks for a well detailed article with supporting evidence and a bold call. Nice to see decisiveness while seeing all sides. I think you nailed THE one reason the stock is only down 2% post-earnings which were mainly viewed as negative at the headline level. Looking under the surface is always the where the truth of the matter lies.

El Capitan is far larger than Frontier and being outfitted with MI300x and MI300A units in large quantities. We already know Meta, Microsoft, Oracle and many more are adding these to their cloud clusters.

“With that, Nvidia’s GPU sales, if they stabilize at around $11,500 billion a quarter in 2024 on average”.

Where does this come from? It is expected to be much higher. Nvidia 2023 Q4 DC sales will be $18-20B. Guidance for 2024 is “a substantial increase in supply”, Jensen just said “this year will be huge”.

That’s just the GPUs–no networking, no servers, no cables, no systems software, no support. Just the GPUs.

And if Nvidia sells even more high end GPUs and generates even more revenues, it only proves my point further. AMD is not going to make a huge dent in Nvidia, but it will build a very nice business for itself. Perhaps faster than anyone expects.

re: MI300, the back half of the year is more loaded than your model shows I believe. My take on the con call was very few units are in customers hands, really qualification levels only, so I’m not sure how you’re coming off of $415M in Q4 23? That’s about 18K units in Q4 of MI300 level product, or perhaps on the order of 3x (50K+) MI2xx class product. There is little evidence those exist in the market. On the call AMD’s comments were similar to “prepared to ramp” as opposed “shipping production” units. My sense is the 1H of the year will account for maybe 25% of the annual units and a steeper ramp in the back half, perhaps something like 30% in Q3 and 45% Q4. This is informed by the forecast revenue decline in Q1 rev.

Overall it was a positive for AMD, and they are optimistic about their opportunity.

The $400M-ish jump in DC revenue was a one-time payment for the El Capitan supercomputer announced back in 2019. That counts as HPC revenue, not AI. All public AMD guidance so far suppots only sample quantities available for evaluation purposes. Volume deliveries aren’t expected until 2024 H2.

I think the GPU portion was more like $275 million, as I explained in the comment to EC.

The Q4 2023 number is pretty solid. They guided to $400 million back in October, and they said they beat that, and I think it was due to higher yields being matched to higher interest. Most of that is El Capitan. The machine costs $500 million, with probably $100 million in non-recurring engineering costs. Another $50 million or so for storage. Another $25 million for networking. We’re down to $325 for the compute, most of which (but not all) is GPU. We don’t know how many GPUs El Capitan has. My guess is it will be 2.3 exaflops peak, but my guess back in November was for 23,500 MI300A engines. Call it $276.25 million for the GPUs, at a stunningly small $11,755 per GPU. That leaves $138.8 million in MI300 GPUs to come up with. Oh, that would be only 4,625 GPUs at a cost of $30,000 a pop.

Given how this is a lot of HPC usage, you are right that shipped is not the same as revenue recognition. I was looking at how much could ship and what is the revenue on the invoice for that shipment. Things could slip a quarter or two if there are HPC-style acceptances. I think the hyperscaler and cloud builders are probably prepaying for their GPUs, and that should pull some revenue forward in a wash.

I dunno. It’s all conjecture. The point is AMD said $2 billion, raised to $3.5 billion, and many of us think (independently) that it is $5 billion.

MI300 sales have a lot to do with excess demand Nvidia can’t supply. Certainly there will be some purchases to “kick the tires”, so to speak (costing hundreds of millions of dollars per kick), but the H200 will always be preferred to the MI300 for AI. Since AMD’s ramp is slow there is a real question whether people want to commit to production on a platform rather than waiting 6 months for availability of their first choice. And it’s not like buying systems based on AMD will put you 6 months ahead in that case. The AMD systems contain many more unknowns and will take much longer to get set up and running smoothly. I don’t think it’s certain that sales for the MI300 will take off beyond the “kick the tires” sales. It would take a severe shortage in Nvidia chips for it to take off.

“How The “Antares” MI300 GPU Ramp Will Save AMD’s Datacenter Business”

Did you mean Datacenter Accelerator(Compute/AI/Whatever) business as Epyc CPUs appear to be not an issue there. And really MI300 comes in the MI300A variant that’s a Data Center APU/Exascale APU(CPU cores and GPU “Cores” on the Module) and the MI300X that’s all GPU instead on the module.

Well, I meant what I said. The CPUs are in recession. So the GPUs are going to fill in the gap and then some going forward, and very likely offer higher profits at some point, too.

Nvidia’s hardware roadmap is very constrained by its essentially monolithic architecture.

We have seen how this pans out in the DC CPU market – Intel has been swamped by rapidly evolving Epyc.

As the adage goes, DC clients buy roadmaps, not chips. They may be meh about MI300, but feel MI500 will far out compete its contemporary from a monolithic constrained Nvidia.

Exactly.

But I wouldn’t count Nvidia out just yet.

“AMD definitely has Nvidia by the ankle, and it is chomping.”

That is a hilarious line. Great start to the morning.

You’re welcome.

Think of how many plays in a football game are held up by one ankle grab?