The European Union has never been willing to cede the exascale computing race to the United States, Japan, or China.

In recent years, Europe has ramped up its investments in the HPC space through such programs as Horizon 2020, an effort to grow R&D in Europe, and EuroHPC to drive development of exascale systems, and the Partnership for Advanced Computing in Europe (PRACE), which aims to develop a distributed supercomputing infrastructure that will be accessible to researchers, businesses, and academic institutions throughout the EU. The SAGE project will create a multi-tiered storage platform for data-centric exascale computing to enable data to move easily between the tiers.

The goal for the EU is to become a top HPC player by 2020 through such projects, which includes not only building faster, more powerful supercomputers but also to drive the growth of the region’s own high-tech industry to design and build many of the components that will go into these systems and enable the EU to reduce its dependence on US companies and bolster its own economies.

There also is a lot of work going on in Europe even in countries that aren’t in the EU or – in the case of England, are looking to get out of it. The Piz Daint supercomputer, a Cray system with more than 361,000 Intel Xeon E5-2690 v3 cores, Nvidia Tesla P100 accelerators, and a performance of 19.6 petaflops, is the world’s third-fastest supercomputer, according the Top500 list, and is housed at the Swiss National Computing Centre (Switzerland is not an EU member, either). And as we reported here at The Next Platform earlier this week, Hewlett Packard Enterprise is donating three smaller supercomputers clusters based on its Apollo architecture and powered by Cavium’s Arm-based 32-core ThunderX2 processors to three universities in the United Kingdom – the University of Bristol, University of Leicester and the Edinburgh Parallel Computing Centre at Edinburgh University.

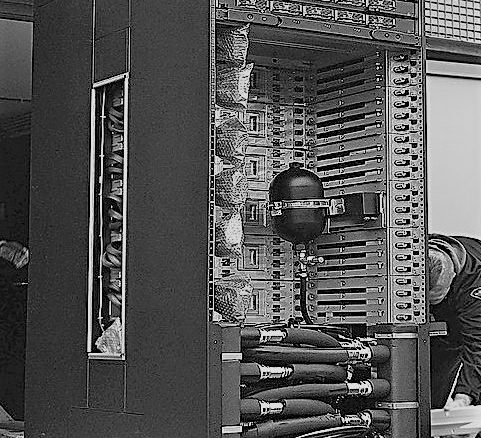

For years, the JuQueen supercomputer (see below) housed at Forschungszenrum Julich in Germany represented the EU’s fastest system. The supercomputer is an IBM BlueGene/Q system, based on 16-core Power BQC processors, with a total of 458,752 cores with a custom torus interconnect and a performance of more than 5 petaflops. One among the top ten fastest systems in the world at one time, JuQueen is now the 22nd fastest supercomputer on the November 2017 Top500 list. However, FZJ is moving ahead, just like its competition.

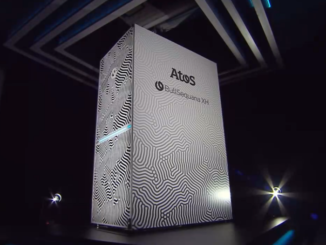

The third and final phase of JuQueen is being shut down and will be replaced by the Julich Wizard for European Leadership Science (JUWELS), a system based on a modular design that is the result of three EU-funded projects – DEEP, DEEP-ER and DEEP-EST. The first phase of the installation was completed this week at Julich and represents a step forward for the EU in its exascale efforts. The 12 petaflops cluster is based on French IT company Atos’ Bull Sequana X1000 system (below) and is made up of 2,550 compute nodes, each powered by powered by two 24-core Intel “Skylake” Xeon SP processors. Most of the nodes have and with most holding 96 GB of main memory, but about 9 percent of the compute nodes include twice the main memory capacity of 192 GB, and another 2 percent of the nodes include Nvidia’s Volta GPU accelerators. The compute nodes are linked via a 100 Gb/sec EDR InfiniBand interconnect, and the JUWELS system will use the ParaStation cluster middleware from ParTec, a software company out of Munich.

The Atos architecture also uses hot water cooling, which Julich said will drive significant savings on operations. “Unlike its predecessor, the new system uses hot water to cool the racks that can be much warmer than the normal ambient temperature,” explained Michael Stephan, technical expert at the Julich for the system. “This means that it can be cooled directly with outside air and without the need to spend additional energy for cooling”.

The use of the Atos Bull Sequana machines and ParTec cluster software illustrates the EU’s efforts to use as much technology from European companies as possible.

According to the EU, work on the modular supercomputer design – the Modular Supercomputer Architecture – began in 2011 as an effort among 16 European partners that are part of the DEEP project. The eventual goal is to make JUWELS a system with three modules of diverse technologies that will be able to run as a single system. It will be interconnected by a high-speed network and use the same software, and will be able to run a broad array of workloads. The first installation that launched this week is a general-purpose cluster module that will run traditional HPC workloads like simulations. It will be followed in 2020 by the Extreme Scale Booster module that will tackle HPC computing applications that can leverage many-core processors and then by the Data Analytics Module for high-performance data analytics tasks.

The Julich had already tested the modular design with a prototype system called JURECA, which landed at the 29th spot on the Top500 list in November 2017 and was built by Dell and T-Platforms, a supercomputer company in Russia. JURECA is powered by 155,150 Xeon E5-2680 v3 processors and was to include Intel’s “Knights Hill” Xeon Phi processors, which the chip maker has now stopped developing. The machine delivers a performance of almost 3.8 petaflops.

Along with the effort around JUWELS, the Julich also is expanding its Lenovo-based storage system for its supercomputers, growing it from 20.3 petabytes to 81.6 petabytes. The storage system will be based on Lenovo’s DSS-G technology and will more than double the bandwidth by allowing access speeds of up to 500 GB/s.

Be the first to comment