Like other makers of supercomputers, the Bull unit of French services and system maker Atos is embracing the new processing technologies coming out of Intel. But like a number of other high-end players that are seeking to offer differentiation of some sort, Bull is continuing to innovate, in this case with additional compute and interconnect options, including a homegrown interconnect.

Bull was showing off its shiny new Sequana X1000 machines at the SC15 supercomputing conference in Austin, Texas this week, and Jean-Pierre Panziera, chief technology officer for HPC systems at Bull, walked The Next Platform through the architecture of the systems, the first prototype of which has shipped as the foundation of the Tera 1000 supercomputer being installed at the Commissariat à l’énergie atomique et aux énergies alternatives (CEA). Sales of the commercial Sequana X1000 that is based on the Tera 1000 will be ramping through 2016.

Sequana is the Latin name for the Seine River in Paris, and is also the name of the goddess of abundance, and both are appropriate given that the Sequana system is highly scalable while at the same time being water cooled. The architecture of the machines is flexible by design to accommodate future “Broadwell” Xeon E5 v4 processors and “Knights Landing” Xeon Phi x200 processors (and coprocessors if customers desire them), we well as “Pascal” Tesla GPU accelerators from Nvidia. The Sequana machines support InfiniBand networking with technology that is OEMed from Mellanox Technologies, and will also make use of a homegrown Bull Exascale Interconnect (BXI) that has different properties that warrant customization. Interestingly, Bull has not adopted Intel’s new Omni-Path interconnect for the system, but there is no reason that this could not be added at some future date if there is enough customer demand for it.

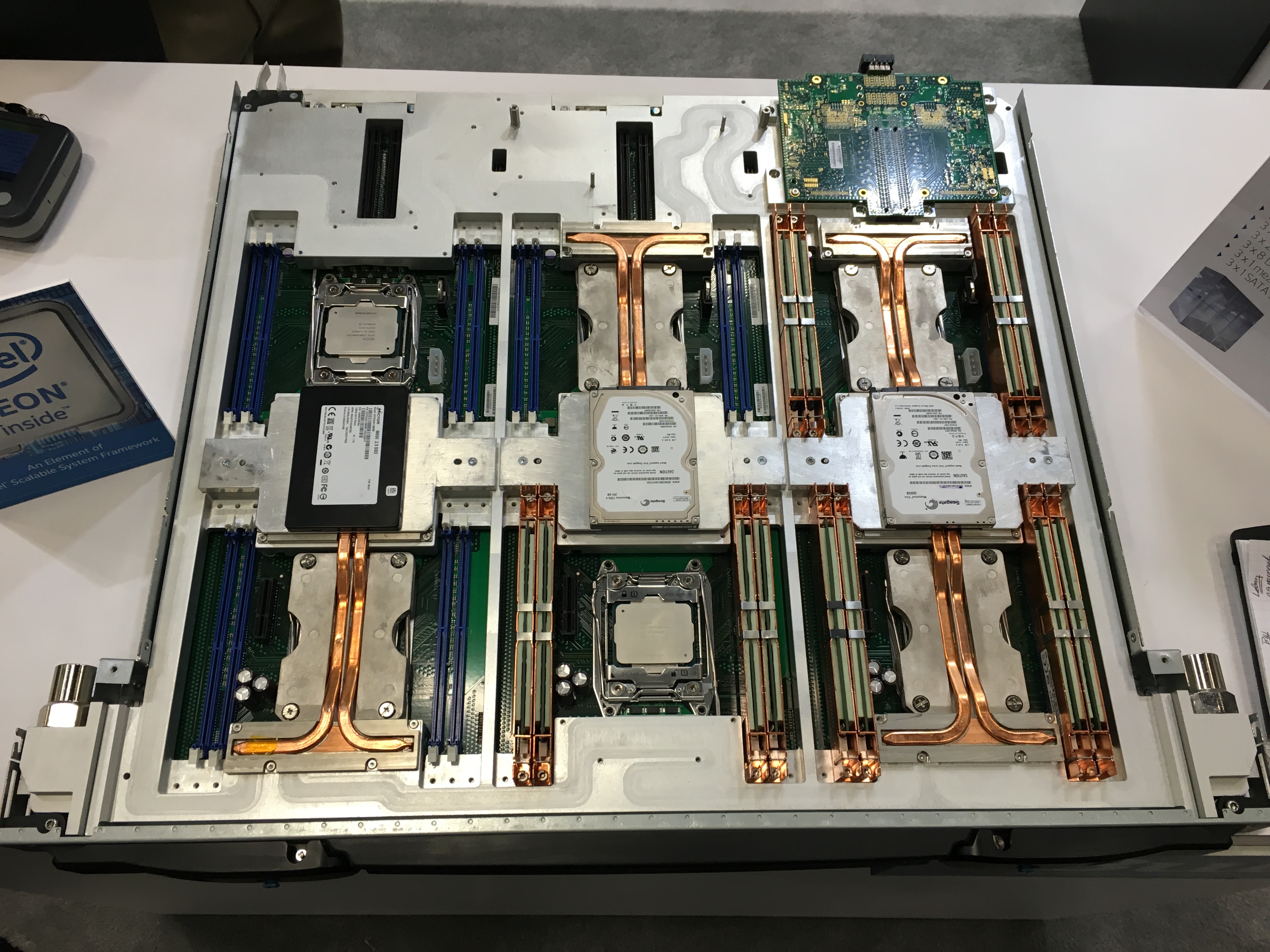

The Sequana system is based on the concept of a cell, a unit of compute, networking, power distribution, and cooling that is intended to be plunked down as a single thing in an HPC center. This cell is comprised of three cabinets: two for compute and one for switching, as shown below:

Because the Sequana system is water cooled, machinery can be packed into the cabinets from to back. There is no need to worry about air flowing through the system, and in fact, there are no fans at all in the system, which should make a Sequana datacenter whisper quiet and considerably warmer than what a lot of people are used to with cold aisles and hot aisle containment. Each compute cabinet has 48 compute trays, each 1U high and packing three server nodes. There are 24 trays in the front of each cabinet and 24 trays in the back (although the picture above shows them all facing in the same direction from the front); the Sequana cell has two compute cabinets for a total of 96 compute trays and at three dual-socket server nodes per tray, that works out to 288 nodes per cell. The switch modules are in the center of the Sequana cell and they provide the switches for linking the server nodes in the cell to each other and for linking multiple cells together to comprise a cluster.

The power distribution units in the Sequana system are at the top of the racks and support 208 volts in the United States and 240 volts in Europe, and Panziera says that Bull is not offering higher voltage power into the racks at this time. On some supercomputers and hyperscale systems, 380 volt is an option, which means less stepping down of power and therefore more energy efficiency overall in the datacenter, but it requires modifications to the infrastructure that are not always easy for customers to adopt in existing datacenters. Each compute rack can deliver up to 90 kilowatts of power (that is front and back together) and each switch rack can have up to 12 kilowatts of power, for a total of 192 kilowatts across 288 nodes. The Sequana X1000 system can also be equipped with what Bull calls Ultra Capacity Modules, which are capacitor banks that hold enough juice to keep the cell running for up to 300 milliseconds in the event of a power brownout.

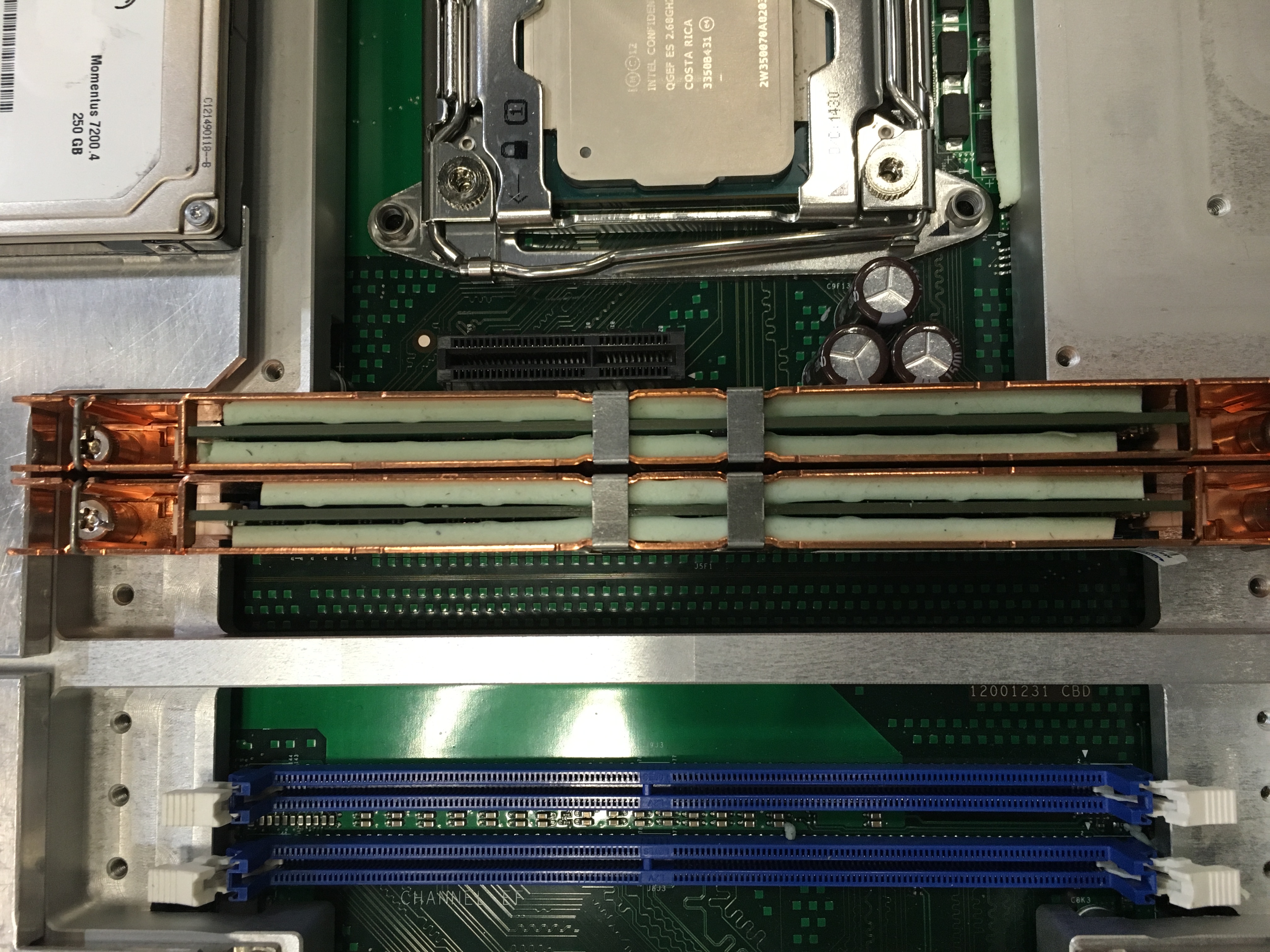

The two dozen Sequana compute trays sit underneath the power distribution units in the rack, and below that are the pumps and heat exchangers that draw heat off of the elements of the system and transfer it to the facility water cooling, eliminating the need for hot and cold aisle containment and air conditioning for the system. This direct cooling method uses water blocks on key elements of the system, such as the processors and main memory. Bull has come up with a thermal transfer foam that it wraps around standard DDR4 memory sticks that allows them to be directly cooling. This is much less expensive than making memory chips with water blocks for cooling. Here’s what that looks like on a Xeon E5 node:

Panziera tells The Next Platform that the heat can accept intake water at anywhere from 3 degrees to 40 degrees Celsius (which is anywhere from 37 degrees to 104 degrees Fahrenheit). So basically, you can drop it anywhere except perhaps the hottest desert. The input water temperature for the Sequana compute trays is around 45 degrees Celsius (113 degrees Fahrenheit) and comes out of the tray at around 55 degrees Celsius (131 degrees). So even at the high end of the heat exchanger, the output water from the compute tray is considerably higher than the warm water in the heat exchanger, and that is what the laws of thermodynamics demand for cooling to work.

Panziera can’t talk about the pricing on the Sequana X1000 compute nodes and other components, but what he did say is that throughout the system, the cost of providing the water cooling infrastructure – including the water blocks on the components and the heat exchangers and pumps – is equivalent to the cost of adding components like fans throughout the system and powering them. It is a net wash as far as manufacturing and operations goes. But for the customer, the efficiency gains result in less power consumed for compute, switching, and cooling and therefore less money spent for the lot. In the end, what matters is how efficient the system is at turning the electricity into utilized computational cycles. With cool doors and hot aisle containment, Panziera says that an HPC datacenter can get a power usage effectiveness (PUE) of somewhere between 1.35 to 1.5. (This is a ratio of the total power consumed divided by the total power used by the compute infrastructure.) With the Sequana design, Panziera says that Bull will be able to push that PUE down to about 1.02. This seems absurd, but this is not the first time we have heard numbers down around 1.05. Hyperscalers do it, but they do it with much less dense machinery, air cooling, and high voltage power distribution. They could move to water cooling, but they can always build a bigger datacenter and get the electric company to bring in more power. Government and academic research centers and large enterprises often cannot do that for their datacenters, so that is why systems like the Sequana X1000 from Bull and the Apollo 8000 from Hewlett Packard Enterprise resort to water cooling to cram more compute into a smaller space and also boost the PUE of the datacenter without having to rebuild it.

Bull will be delivering three different compute options and two different networking options in the Sequana line, and we think it will expand these options as other technologies mature. The compute nodes and Layer 1 and Layer 2 switching nodes are linked to each other using a backplane called Octopus, which means that the Sequana cell is for all intents and purposes a giant blade server (or similar to the BladeFrame architecture from Egenera if you remember them from the enterprise space).

The Sequana X1110 blade is based on a two-socket node using the future Broadwell Xeon E5 v4 processor that Intel is widely expected to launch in the February to March timeframe next year. Current Haswell Xeon E5 v3 processors are also supported in the system, and the initial configuration sold to CEA is using Haswell nodes rather than waiting for Broadwell nodes. Three of these two-socket cards fit onto the X1110 blade, and each node has two PCI-Express 3.0 x16 slots and mezzanine cards that plugs into them to provide looks into the fabric. Each node has eight DDR4 memory slots (for per socket), and with a 32 GB stick the practical upper memory limit is 256 GB per two-socket node, which is more than enough for the vast majority of HPC workloads.

Interestingly, Panziera also said that half of the cost of a compute tray is the CPUs – not the memory as many expect. HPC shops do tend to run light on the memory configurations, with 128 GB being normal and 256 GB on the rise, up from 32 GB and 64 GB a few years back. Many enterprise systems are set up with at anywhere from 512 GB to 1 TB these days, thanks in large part to virtualization.

Here is what the Sequana X1110 server tray looks like:

The X1110 nodes have an optional 2.5-inch solid state flash drive that mounts onto the chassis and this can be used for both local operating system storage and for saving checkpointing data for when a calculation pauses to take a snapshot of a long-running calculation. By saving this checkpointing data to the flash drive, checkpointing can happen faster because it doesn’t flood the network with traffic, says Panziera. There is a PCI-Express switch in the compute trays that links the nodes to the storage, and each node can address any of the three drives in the enclosure.

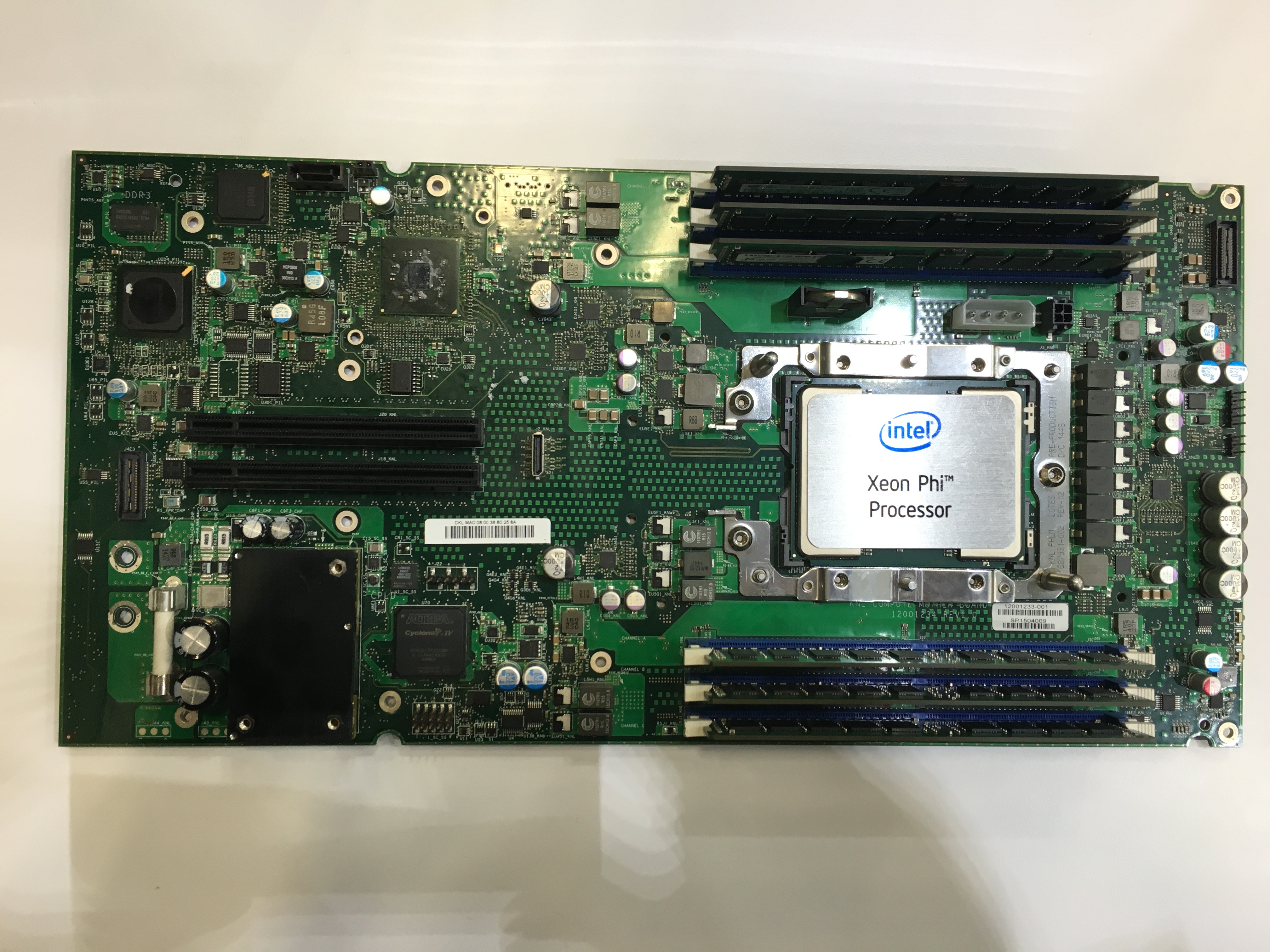

The X1110 tray above shows three Xeon nodes, but customers will have other options. For instance, Bull will ship next year a Sequana X1210 blade that has three bootable Knights Landing Xeon Phi processors, each of them linking to each other, to each node in a cell, and all nodes across cells in the cluster using either InfiniBand or BXI interconnects. Here is what the Bull Knights Landing node looks like:

This is the Knights Landing node that is shipping to CEA in its prototype form now. It has two PCI-Express 3.0 x16 slots for connectivity to the outside world and six DDR4 memory slots, for a maximum capacity of 384 GB assuming the use of 64 GB memory sticks, which is highly unlikely for most HPC shops. Using 32 GB sticks, the Knights Landing chip tops out at 192 GB, which is probably a more likely configuration. Three of these nodes will go into a Sequana X1210 blade.

Bull is not allowing for Xeon and Xeon Phi processors to mix within a server tray, but obviously customers can mix Xeon and Xeon Phi processors within a Sequana cell and across the system.

So how much number-crunching oomph does a Sequana cell pack? Using Knights Landing processors, which will be rated at around 3 teraflops in a top-end configuration, that works out to 864 teraflops per cell (it looks to be about three standard racks wide and almost as deep as two racks). Using top-end 22 core Broadwell Xeon E5 chips, assuming they run at about 2.3 GHz as the current Haswell Xeon E5s do with the 18-core part, will yield about 810 gigaflops per socket and about 1.62 teraflops per node. So at 288 nodes, you have 467 teraflops or so. With half as many processors, Xeon Phi yields twice as many flops. But with the “Skylake” Xeon E5 v5 chips due in 2017, the flops will more or less double and the Xeon will catch up with Knights Landing. About 2018 or so in the Aurora supercomputer, Knights Hill will come out with about 3 teraflops per node (and maybe a lot fewer cores per node), we think, but the nodes will have a lot more memory and memory bandwidth and we think some clever uses of DRAM, MCDRAM, and 3D XPoint memory that allows those processors to run at a lot higher utilization.

Customers will eventually be able to mix and match Xeon CPUs and Nvidia Tesla GPU coprocessors inside of a Sequana system. The plan, says Panziera, is to have one two-socket Xeon E5 node and two nodes with a total of four “Pascal” GP100 Tesla GPUs. The PCI-Express switch would link the Xeon E5s to the GPUs while Nvidia’s NVLink interconnect would lash the four Pascal GPUs together so they could share data using 20 GB/sec links. (PCI-Express 3.0 x16 links deliver 16 GB/sec peak bandwidth.) With NVLink 1 coming in 2016, the GPUs can share data, but with NVLink 2 and the “Volta” GV100 GPUs coming in 2017, the GPUs will have a coherent memory space across the high bandwidth memory on those GPUs.

InfiniBand And BXI, But No Omni-Path

The Sequana design does not currently make use of the Omni-Path interconnect, whether it is delivered through a PCI-Express adapter card or through the integrated dual-power network interface that Intel will be offering on the Knights Landing-F variant of the processor. Panziera says that one reason is that many of the functions that are done by Omni-Path are done on the compute node processors rather than offloaded to the network interfaces or switches, and that this is why Bull is not doing this right now. Eating up compute cycles is not such a big deal for the Xeon E5 processors, which run at very high clock speeds, but it becomes an issue, he explains, on the many-cored and lower-clocked Xeon Phi processors. (We think the 72 core Xeon Phi will run at somewhere between 1.1 GHz and 1.3 GHz, which is about half to a third slower than stock Xeon processors.) That extra compute time for networking functions running on the server will add latencies, Panziera says.

Having said all that, there would obviously be a way to add Omni-Path to the Sequana nodes at some point using adapter cards, hooking them in the Octopus backplane. It is less clear how the integrated Omni-Path would be extended to the backplane, but it should be possible. Bull could certainly license the Omni-Path ASICs for both the switch and adapters to make its own mezzanine cards and switches, as many companies are doing. This is all just a matter of engineering.

For the moment, Bull is coming out with 100 Gb/sec EDR InfiniBand for the Sequana X1000 machines, and to be precise, Bull is building its own EDR InfiniBand switch using the Switch-IB 2 ASIC from Mellanox and Mellanox is making a special version of its ConnectX-4 adapter card to be used as the mezzanine on the X1110 and X1210 blades. The InfiniBand EDR setup in the Sequana cell has a dozen 36-port Layer 1 switches for linking the 288 nodes to each other inside of the cell and another dozen 36-port Layer 2 switches for linking the cell out to other cells in the system.

The BXI network that Bull has created for the Tera 1000 system for CEA is a bit of a different beast, but Panziera says that it offers bandwidth that is comparable to 100 Gb/sec InfiniBand or Ethernet. The BXI interconnect implements the Portals protocol that has been evolving under its development at Sandia National Laboratories for the past 25 years. Various Sandia machines have used different implementations of Portals, notably the Paragon parallel RISC system built by Intel in 1991, the ASCI Red parallel X86 system built by Intel in 1994, and the ASCI “Red Storm” XT system built by Cray. The important thing, says Panziera, is that BXI offloads all communication overhead for the interconnect to the NICs and switches, leaving all of the CPU capacity available for doing compute rather than managing network overhead. The BXI interconnect switches and adapters are the same form factors as the InfiniBand variants, but it looks like BXI scales a lot further with supporting up to 64,000 nodes and up to 16 million threads. The BXI interconnect is prototyping at CEA now and will be shown off at the ISC16 conference in Frankfurt, Germany next June and then put into production shortly after that.

By the way, customers will not have to buy a Sequana just to use the BXI interconnect. Bull will be offering PCI-Express 3.0 x16 adapter cards and fixed port switches so anyone can buy them.

TERA SEQUANA – LE FUTUR SUPERCALCULATEUR EXAFLOPIQUE DU CEA

A ce jour, le Consortium ATOS & BULL procède à la construction de 2 Supercalculateurs :

– Le TERA 1000 qui est en cours de construction dans les locaux du CEA pour se substituer au TERA 100 pour la Simulation des Essais Nucléaires français, avec ses 25 Pétaflops, le TERA 1000 sera le plus grand Supercalculateur Pétaflopique de France. Il remplacera le TERA 100 qui s’est avéré, semble t il pas assez puissant pour la Simulation des Essais Nucléaires. 25 Pétaflops = 25 millions de milliards de calculs par seconde, ce qui n’est pas rien. Pour rappel le TERA 100 a une puissance de 1 Pétaflops soient 1 million de milliards de calcul par seconde. Le TERA 1000 sera 25 fois plus grand et plus puissant que le TERA 100.

– Le TERA SEQUANA sera le Supercalculateur Exaflopique de la France qui compte rester dans la course aux Supercalculateurs Exaflopiques dont 7 exemplaires sont en cours de construction dans le monde (2 pour les USA, 1 pour la Russie, 1 pour la Chine (TIANHE 3), 1 pour la France (TERA SEQUANA), 1 pour le Japon, 1 pour la Hollande qui compte coupler un Supercalculateur Exaflopique à un Radiotélescope le rendant 1000 fois plus rapide et 1000 fois plus précis). SEQUANA sera également installé dans les locaux du CEA pour des simulations pour le compte de la Défense, mais aussi pour la Science qui va faire un bond en avant grâce au TERA SEQUANA qui sera la fierté des français. 1 Exaflop = 1 milliard de milliards de calculs par seconde.

– A l’horizon 2050, 3 Supercalculateurs Zettaflopiques seront construits dans le monde, les USA et la CHINE construiront leur propre Supercalculateur Zettaflopique, les autres pays du Monde (France, Europe, Russie, Japon, Argentine Brésil et Iran) mettront leur fond en commun pour édifier le SZI (Supercalculateur Zettaflopique International), reste à savoir quel sera le lieu de construction de cet Hyperordinateur (France ou Russie ou Japon ou Brésil ou Argentine). 1 Zettaflop = 1000 milliards de milliards de calculs par seconde, le prix du SZI sera de l’ordre de 2000 à 3000 milliards d’euros. Si c’est ATOS & BULL qui construisent le SZI son nom sera le TERA ZETTA. Suite dans une prochaine rubrique SUPERCALCULATEUR ZETTAFLOPIQUE. J’ai rédigé à ce jour de nombreux pavés de texte sur les Supercalculateurs Zettaflopiques, pour les consulter taper (ZETTAFLOPIQUE) dans le moteur de recherche de Google et vous accèderez à mes 70 publications, sinon RDV sur le mur Facebook de david mocchetti où je tiens un journal scientifique genre Sciences & Avenir avec comme différence le Journal Facebook de David est gratuit.

–

Alain Mocchetti

Ingénieur en Construction Mécanique & en Automatismes

Diplômé Bac + 5 Universitaire (1985)

UFR Sciences de Metz

alainmocchetti@isfr.fr

alainmocchetti@gmail.com

@AlainMocchetti