Emerging “Universal” FPGA, GPU Platform for Deep Learning

In the last couple of years, we have written and heard about the usefulness of GPUs for deep learning training as well as, to a lesser extent, custom ASICs and FPGAs. …

In the last couple of years, we have written and heard about the usefulness of GPUs for deep learning training as well as, to a lesser extent, custom ASICs and FPGAs. …

With the International Supercomputing 2016 conference fast approaching, the HPC community is champing at the bit to share insights on the latest technologies and techniques to make simulation and modeling applications scale further and run faster. …

If you are trying to figure out what impact the new “Pascal” family of GPUs is going to have on the business at Nvidia, just take a gander at the recent financial results for the datacenter division of the company. …

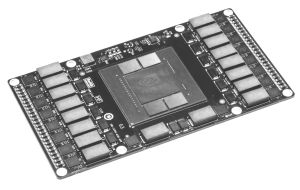

Nvidia made a lot of big bets to bring its “Pascal” GP100 GPU to market and its first implementation of the GPU is aimed at its Tesla P100 accelerator for radically improving the performance of massively parallel workloads like scientific simulations and machine learning algorithms. …

Deep learning could not have developed at the rapid pace it has over the last few years without companion work that has happened on the hardware side in high performance computing. …

As a former research scientist at Google, Ian Goodfellow has had a direct hand in some of the more complex, promising frameworks set to power the future of deep learning in coming years. …

It is hard to find a more hyperbolic keynote title than, “A New Computing Model” but given the recent explosion in capabilities in both hardware and algorithms that have pushed deep learning to the fore, Nvidia’s CEO keynote at this morning’s GPU Technology Conference kickoff appears to be right on target. …

With machine learning taking off among hyperscalers and others who have massive amounts of data to chew on to better serve their customers and traditional simulation and modeling applications scaling better across multiple GPUs, all server makers are in an arm’s race to see how many GPUs they can cram into their servers to make bigger chunks of compute available to applications. …

While GPUs are commonly used to accelerate massively parallel compute jobs that are behind simulations, media rendering, or machine learning algorithms, the next wave of growth could come from databases, thereby upsetting the balance of power in get another part of the datacenter infrastructure. …

When it comes to leveraging existing Hadoop infrastructure to extend what is possible with large volumes of data and various applications, Yahoo is in a unique position–it has the data and just as important, it has the long history with Hadoop, MapReduce and other key tools in the open source big data stack close at hand and manned with seasoned experts. …

All Content Copyright The Next Platform