When it comes to leveraging existing Hadoop infrastructure to extend what is possible with large volumes of data and various applications, Yahoo is in a unique position–it has the data and just as important, it has the long history with Hadoop, MapReduce and other key tools in the open source big data stack close at hand and manned with seasoned experts.

With right around 36,000 Hadoop nodes and 600 petabytes of storage across 19 clusters running everything from MapReduce, Tez, Spark, and more, it stands to reason teams behind fresh AI and machine learning initiatives would have little interest in building out new clusters for training and execution of deep learning and more advanced machine learning frameworks.

All the work on Hadoop at Yahoo has yielded some interesting fruits. Today, the company lifted the lid on something that, beyond managing to allow the use of every major trend we’ve been following in a single string of news (Spark, Hadoop, deep learning, machine learning, RDMA, Caffe, GPU computing), has provided a rather unique approach to bringing all of those collections of technologies onto a single cluster.

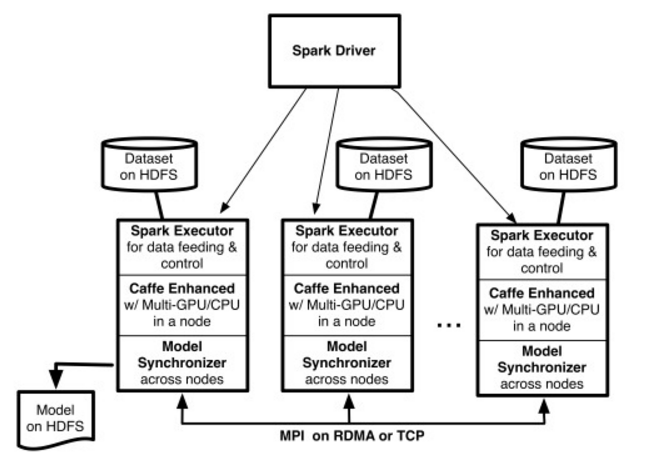

As it turns out, Spark has served as a prime springboard for Yahoo’s efforts to branch out their deep learning capabilities. Today, the company announced a rather unique architecture and set of open source tools that blend the best of Spark and deep learning framework, Caffe. The effort, called CaffeOnSpark, offers open source distributed deep learning for Hadoop and Spark clusters. It removes the need for separate clusters for different parts of the deep learning workflow while allowing other applications to run alongside such efforts.

What is also noteworthy, at least in terms of the infrastructure angle, is how they’re doing it. Instead of using separate training and execution clusters for deep learning, teams at Yahoo, led by VP of Architecture, Andy Feng, have meshed the best of all worlds—GPU computing, Caffe, Hadoop, Spark, and networking approaches including RDMA to create what looks like a Frankenstein of high performance computing and standard “big data” architecture.

Of course, under the hood, it’s anything but Frankenstein, as Feng tells The Next Platform. With the Yarn scheduler at the helm, Infiniband connections, and a custom-developed MPI on RDMA or TCP approach, teams are able to extract performance throughout the entire deep learning and machine learning workflow—as well as get speedups on their existing Spark jobs. Further, Yahoo went full-bore with GPUs, choosing the latest Nvidia Tesla K80 coprocessors (those used on the highest-end supercomputers) over what others in the world of deep learning training clusters choose, which is often the Titan X (although Nvidia is keen on making the deep learning community aware of its specialized accelerators, the M40 and M4).

The Tesla K80s (four per node) and some purpose-built GPU servers sit in the same core Hadoop cluster with memory shared via a pool across the Infiniband connection. The Yarn scheduler has a feature, called node-level (which is the subject of a great deal of current development) and it can target workloads or applications and understand where they need to go. Accordingly, if a user has a deep learning workload, it can be sent to the GPU for processing or if it’s a CPU-only one, it will be shuttled off there. CaffeOnSpark itself can be launched as one would do on a regular cluster—this is why it is a fit for the architecture above as well as for running on the Amazon cloud, for example, with GPU instances blazing.

“Open sourcing a framework like this to let users use Caffe on top of Hadoop and Spark is important because that’s where most company’s data is stored today. The idea was to allow data processing on the same processing, as well as make use of deep learning on that same cluster without modeling results or moving data back and forth. From our view, this caters to a gap—the thing that was missing in Hadoop and Spark,” — Sumeet Singh, Senior Director of Product Management for the cloud and big data teams at Yahoo.

As one might imagine, not all of the nodes are outfitted with the high-end Tesla GPU cards. “We were trying to balance the rack density in terms of how much compute we should switch through the top of rack switch. Traditional racks we have can support 40 servers; we took some CPU servers out and put GPU servers in them and did that across multiple racks and connected those with Infiniband in addition to the top of rack switch for the Hadoop servers, which are connected to 10GbE,” Singh says. The outcome is a pool of compute resources, which are either traditional Hadoop CPU or GPU resources. Those GPUs are expensive, so it’s just for the deep learning workloads, so there have been queues set up and Yarn manages that.

The part of this that’s difficult to get one’s head around is that the training and inference portions of a deep learning workload are happening on the same cluster—something that for all other companies we’ve talked to doing this are happening on separate clusters (one with GPUs, another usually straight CPU). As Singh and Feng describe, Yarn is smart enough to understand the workflow’s need to take advantage of the GPUs for the training and can then, without having to move data off the cluster, route that over to the machine learning frameworks that are CPU only for classification and other analysis. “That’s the beauty of this,” says Feng, “the data never leaves the cluster.”

As a side note, one of our goals here at The Next Platform is to track how the formerly disparate areas of hyperscale, high performance computing, and large-scale enterprises are sharing, trickling down, and riffing on technologies between one another. What this represents, beyond the Yahoo context, is a blend of all of these in tandem. GPU computing and MPI on RDMA/Infiniband come directly from HPC; Hadoop and Spark were designed to power real-world enterprise and hyperscale operations. Together, with some optimizations, Yahoo has built a solid example of what can happen when emerging areas like deep learning are looped into those merging trends.

Nice article. While yahoo is headed in the direction of a converged infrastructure, I am surprised yahoo is using Infiniband and not RDMA over converged ethernet (RoCE) which is quite popular among cloud vendors such as Microsoft.