HPC Pioneers Pave The Way For A Flood Of Arm Supercomputers

Over the past few years, the Arm architecture has made steady gains, particularly among the hyperscalers and cloud builders. …

Over the past few years, the Arm architecture has made steady gains, particularly among the hyperscalers and cloud builders. …

As Moore’s law continues to slow, delivering more powerful HPC and AI clusters means building larger, more power hungry facilities. …

The most exciting thing about the Top500 rankings of supercomputers that come out each June and November is not who is on the top of the list. …

When you host the workhorse supercomputers of the National Science Foundation, you strive to provide the best possible solutions for your scientists. …

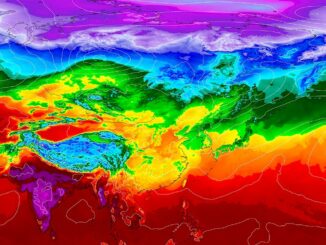

At SC23 in November, the Association for Computing Machinery (ACM) will give out its first-ever ACM Gordon Bell Prize for Climate Modelling at a ceremony in Denver. …

The Association for Computing Machinery has just put out the finalists for the Gordon Bell Prize award that will be given out at the SC23 supercomputing conference in Denver, and as you might expect, some of the biggest iron assembled in the world are driving the advanced applications that have their eyes on the prize. …

We are still plowing through the many, many presentions from the Hot Interconnects, Hot Chips, Google Cloud Next, and Meta Networking @ Scale conferences that all happened recently and at essentially the same time. …

If Nvidia and AMD are licking their lips thinking about all of the GPUs they can sell to the hyperscalers and cloud builders to support their huge aspirations in generative AI – particularly when it comes to the OpenAI GPT large language model that is the centerpiece of all of the company’s future software and services – they had better think again. …

The question is no longer whether or not the “El Capitan” supercomputer that has been in the process of being installed at Lawrence Livermore National Laboratory for the past week – with photographic evidence to prove it – will be the most powerful system in the world. …

If you want to get the attention of server makers and compute engine providers and especially if you are going to be building GPU-laden clusters with shiny new gear to drive AI training and possibly AI inference for large language models and recommendation engines, the first thing you need is $1 billion. …

All Content Copyright The Next Platform