At SC23 in November, the Association for Computing Machinery (ACM) will give out its first-ever ACM Gordon Bell Prize for Climate Modelling at a ceremony in Denver.

The new award type, which will run for the next decade, aims to recognize climate scientists and software engineers for their work in tackling climate change, according to the ACM but for us and other gathered at SC, it’s much nerdier than that. And guess what? We will not need to use words “AI” or “machine learning” once. You’re welcome.

The award also focuses on innovative use of parallel computing to improve climate modeling and our understanding of Earth’s climate. Finalists for the award are chosen based on their influence in climate modeling and societal impact, with an emphasis on the use of high-performance computing.

This year’s top three contenders are using the most powerful systems on the planet; from Frontier at ORNL, Fugaku in Japan, and the Sunway machine in China, it’s really a showcase of just what exascale means on the ground.

Here are some details about the three finalists, the systems they’re scaling, and more important, how they’re wrangling software at exascale:

The Simple Cloud-Resolving E3SM Atmosphere Model Running on the Frontier Exascale System

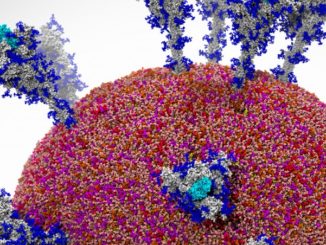

The Energy Exascale Earth System Model (E3SM) project is working on a new atmosphere model called SCREAM (Simple Cloud-Resolving E3SM Atmosphere Model) that can run on GPUs. This new model is special because it can show details of the Earth’s atmosphere at a very small scale, with grid cells as small as 3 km across. This is much smaller than older models, which have grid cells that are 25 to 100 km across. The smaller grid allows SCREAM to show extreme weather events and details about clouds, mountains, and coasts more accurately, helping scientists better understand climate change.

The SCREAM model is designed to take advantage of new developments in high-performance computing, particularly GPUs, which can do a lot of calculations at the same time. The model went through its first test by running a 40-day simulation of winter conditions, and it did well. Looking ahead, the team is planning to complete a C++ version of SCREAM by 2022 and is also working on a smaller version that focuses on just a part of the Earth. This smaller model will be easier and cheaper to run, making it good for testing new ideas. They’re also adding features to help the model better understand how particles in the air interact with clouds.

Establishing a Modeling System in 3-km Horizontal Resolution for Global Atmospheric Circulation Triggered by Submarine Volcanic Eruptions with 400 Billion Smoothed Particle Hydrodynamics

Note: This project is detailed more extensively here.

In the age of global warming and rapid city growth, getting accurate weather and air pollution forecasts is more important than ever. Traditional weather models sometimes get it wrong, especially when trying to predict extreme weather or pollution levels. The iAMAS system aims to solve this by using a new, more detailed approach and running on a powerful Sunway supercomputer. It looks at the atmosphere in small chunks just a few kilometers wide, and it even includes the impact of particles in the air, known as aerosols. This makes the model more accurate and allows for a 5-day global weather forecast with fine details, thanks to the use of 39 million processor cores and advanced computing techniques that speed up the simulation and reduce data handling time.

This iAMAS model uses several cutting-edge computing strategies to run more efficiently. It employs fine-grained optimization and manual adjustments to the code to speed up calculations by three to thirteen times. The model successfully performed a detailed global weather forecast, the fastest ever done with this level of detail and including aerosols. Early results are promising, showing that higher resolution and aerosol data can significantly improve forecasts. Still, more tests are needed to fully understand the model’s strengths and weaknesses, especially regarding its high-resolution data and how aerosols impact the weather. These results are especially important for areas where aerosols, both natural and human-made, have a big impact on weather, like in Asia.

Big Data Assimilation: Real-time 30-second-refresh Heavy Rain Forecast Using Fugaku During Tokyo Olympics and Paralympics

Japan’s Big Data Assimilation (BDA) project, which started in 2013 and concluded in 2019, created an advanced weather prediction system that updates every 30 seconds and has a resolution of 100 meters. The system uses phased array weather radar (PAWR) to gather detailed data on cloud formation and wind speed. The project initially used Japan’s K supercomputer to crunch the numbers in less than 30 seconds when all the necessary data was ready. A follow-up project, running from April 2019 to March 2022, further developed the system for real-time operation. During two specific periods in 2020 and 2021 that included the Tokyo Olympic and Paralympic games, the updated system used the Fugaku supercomputer to improve its predictions.

The new system demonstrated its capabilities in real-time during the Tokyo Olympic and Paralympic games in 2021. With Fugaku’s enormous computing power, the project team was able to increase the “ensemble size,” which makes the predictions more reliable, from 50 to 1,000 using a technique known as the local ensemble transform Kalman filter (LETKF). This marks a significant advancement in weather prediction technology, offering more precise and timely forecasts that can be crucial for event planning and disaster preparedness. Future work will likely focus on leveraging the insights gained from this 8.5-year project to improve weather prediction even further.

Despite the variability in these projects, there are some common themes beyond the obvious. Both Japan’s Big Data Assimilation (BDA) project and the iAMAS system represent breakthroughs in high-resolution, real-time weather and climate modeling through the use of large-scale supercomputing. Japan’s BDA system uses phased array weather radar (PAWR) to feed real-time data into a weather model that updates every 30 seconds with 100-meter resolution, leveraging the K and Fugaku supercomputers for computational power. Similarly, iAMAS uses a multi-dimension-parallelism structure and other optimization techniques to run detailed global weather forecasts at a 3-km resolution on the Sunway supercomputer platform, utilizing a staggering 39 million processor cores.

Notably, both systems significantly reduce computational and input/output (I/O) costs and times, and they demonstrate the power of high-performance computing in tackling complex atmospheric simulations, including the integration of aerosol effects in iAMAS.

Both the BDA and iAMAS systems underscore the enormous potential in utilizing cutting-edge computing to address urgent global issues like climate change and extreme weather prediction.

The Gordon Bell Prize, therefore, not only incentivizes innovation in this field but also brings focus to the increasingly vital role that high-performance computing plays in grappling with complex, real-world problems.

Global climate change, on earth, is one of these important phenomena that we should seek to better understand and control (to the extent possible), because of its expected outsized impacts on the quality and quantity of our lives. I’ve told folks at past conferences that it could be as significant as if the laws of physics were changing, over time … (read on?)

If the gravitational constant was changing, or if Newton’s 1st law changed, we’d have to re-design and re-build a very substantial fraction of our infrastructure, at vast expenses (we might have to make all chairs and tables stronger too, for example). Similarly, as long-term statistics of rainfall, wind, and temperature change over time, due to climate change, we become faced with having to re-design and rebuild our water supply and drainage infrastructures, those various buildings designed to withstand the “old” climate “sweet-spot” (eg. for wind loads), HVAC systems, and agricultural production systems (among others), at vast expenses. Not to mention folks forced to migrate because their land has turned either into an uninhabitable dust-bowling desert (west coasts), or a submarine habitat for aquatic species (east coasts).

I’m glad that GBP has a special new category focused on this challenge and am partial to continuum-based approaches, running on well-characterized machines for that (okay, some creativity could be nice too …). I’d love to see SCREAM running on Fugaku (the current HPCG leader)!

I wonder how well Mixed-Precision (MxP) compute and dataflow mapping could work to further improve performance and “tear down this wall” (of memory access) on these climate modeling challenges?

Interestingly (maybe) Europeans are putting together a Swiss (cheese!) version of the Venado rocket sleigh, in the Alps (GH200, but bigger, and maybe operational earlier) to replace Piz Daint (see TNP’s 04/12/21 “Nvidia Enters The Arms Race”) and do high-resolution weather and climate modeling (from 09/04/23: https://www.cscs.ch/science/earth-env-science/2023/researchers-develop-high-resolution-models-for-weather-and-climate-research ).

Could be a “high-wire” act to follow (eg. at SC23, and then for GBP at SC24)!